- Find Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- Re: Mysterious data spike

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mysterious data spike

I have a plethora of survey data from several thousand video conference calls.

After each call, users are asked to fill out a survey.

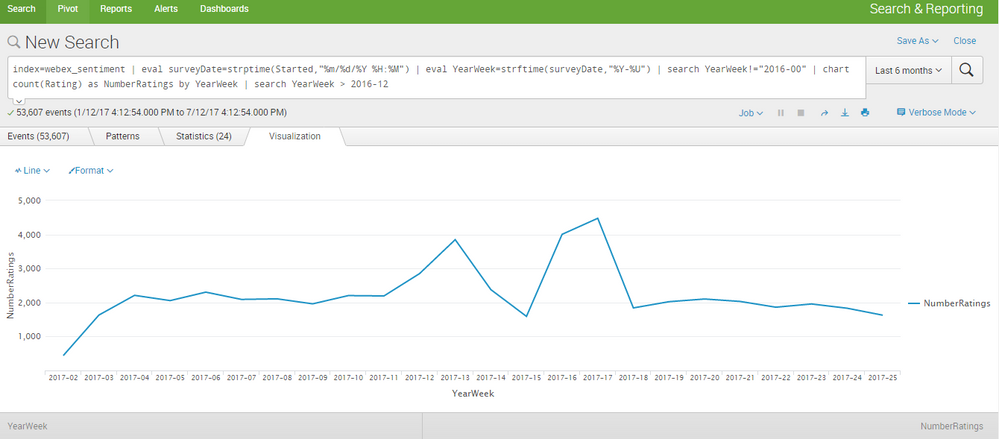

I have found a mysterious spike in a number of survey results during the month of April that is skewing my visualizations.

The spike can be seen here:

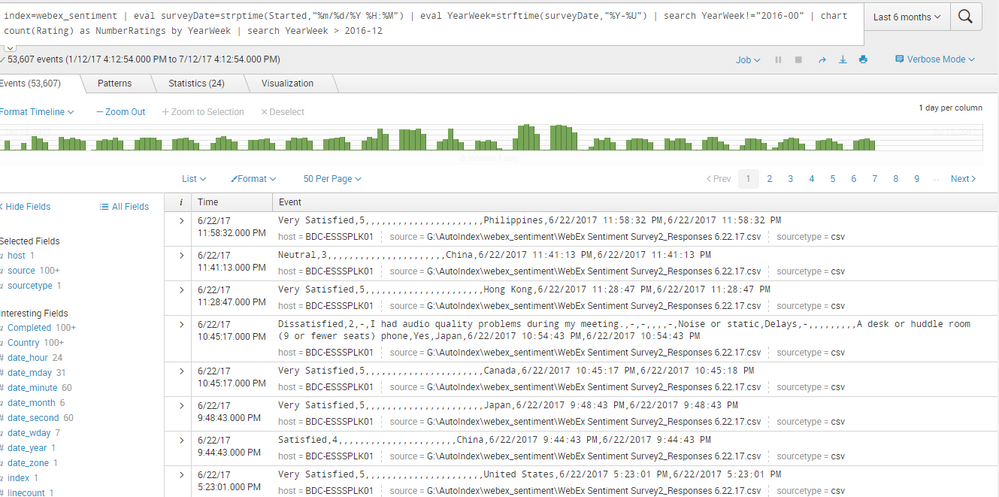

Here is a sample of the event data:

This makes no sense, as there was no surge in number of calls during this time. My data is corrupt, but I'm not sure how/why.

Please advise.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mmodestino

Thank you so much!!

Here are the first eight events in list form:

7/10/17

12:40:28.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,7/4/17 0:56,7/4/17 0:56

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 7.4.17.csv sourcetype = csv

7/10/17

12:40:28.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,7/4/17 0:04,7/4/17 0:04

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 7.4.17.csv sourcetype = csv

7/10/17

12:37:39.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Philippines,6/29/17 0:32,6/29/17 0:32

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 6.29.17.csv sourcetype = csv

7/10/17

12:37:39.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Philippines,6/29/17 0:10,6/29/17 0:10

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 6.29.17.csv sourcetype = csv

7/10/17

12:37:34.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,6/28/17 0:45,6/28/17 0:45

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 6.28.17.csv sourcetype = csv

7/10/17

12:37:34.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Japan,6/28/17 0:36,6/28/17 0:36

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 6.28.17.csv sourcetype = csv

7/10/17

12:37:34.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,6/28/17 0:08,6/28/17 0:08

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 6.28.17.csv sourcetype = csv

7/10/17

12:37:28.000 AM

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Taiwan,6/27/17 0:08,6/27/17 0:08

host = BDC-ESSSPLK01 source = G:\AutoIndex\webex_sentiment\WebEx Sentiment Survey2_Responses 6.27.17.csv sourcetype = csv

Here are those same eight events in raw form:

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,7/4/17 0:56,7/4/17 0:56

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,7/4/17 0:04,7/4/17 0:04

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Philippines,6/29/17 0:32,6/29/17 0:32

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Philippines,6/29/17 0:10,6/29/17 0:10

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,6/28/17 0:45,6/28/17 0:45

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Japan,6/28/17 0:36,6/28/17 0:36

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,China,6/28/17 0:08,6/28/17 0:08

Very Satisfied,5,,,,,,,,,,,,,,,,,,,,,,Taiwan,6/27/17 0:08,6/27/17 0:08

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ah also, can you provide the header values from the csv? I could just make one up, but better we get you sorted the whole way

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where do I find that?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In one of the raw csv files, or they were hardcoded in your props.conf when the data was onboarded

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I must apologize for my lack of knowledge; I'm very new to Splunk.

Where can I find the raw csv files or the props.conf?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no problem! I just fudged it.

I came up with this props with your data, (i used the field_names just to fudge the headers, you likely dont need that as I bet you monitor is ingesting files with headers):

[ webex_sentiment_csv ]

CHARSET=UTF-8

INDEXED_EXTRACTIONS=csv

KV_MODE=none

SHOULD_LINEMERGE=false

category=Structured

description=Webex Sentiment Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled=false

pulldown_type=true

TIME_FORMAT=%m/%d/%y %k:%M

TIMESTAMP_FIELDS=field25

FIELD_NAMES=field1,field2,field3,field4,field5,field6,field7,field8,field9,field10,field11,field12,field13,field14,field15,field16,field17,field18,field19,field20,field21,field22,field23,field24,field25,field26

TZ=UTC

TRUNCATE=1000

Here is a good visualization of what went wrong:

As you can see the time with the single hour digit caused Splunk to be unable to parse the timestamp

All you need to do is work with your admin and make sure the that props is set up to read the headers, then point to the header field that had your timestamp in in and properly parse it

The add data wiz makes these issues easy to avoid and validate

good reference for props.conf:

http://docs.splunk.com/Documentation/Splunk/6.6.2/Data/Whysourcetypesmatter

Are you responsible for ingesting the data into splunk or do you have an admin?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mmodestino I am so grateful for your help!

We have a Splunk application owner who manages the solution, but I do have admin capabilities.

I believe this particular data set is ingested constantly through forwarders.

Where do I need to input the props code you created?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You will need it on the forwarders, I'd put it on the indexers too for good measure.

Don't put exactly what I provided as you likely don't need the field_names.

the main thing for you will be

TIME_FORMAT=%m/%d/%y %k:%M

TIMESTAMP_FIELDS=<yourTimestampField>

Ask your data owner to get you the raw file you are ingesting so you can see the headers, then I could provide the exact config

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mmodestino Thanks again for your help. I've made some good progress.

I believe the problem is not with all single hour digits, but rather just with the zero.

This screenshot shows correctly ingested data at 5 AM: http://imgur.com/a/3ABDc

I was able to find the raw csv files, and here are screenshots of the headers: http://imgur.com/a/GQoc9

Please let me know the config changes I need to make 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this should do it. Needs to be on the forwarders...id probably put it in an app and push to ufs and idx for good measure.

[ webex_sentiment_csv ]

CHARSET=UTF-8

INDEXED_EXTRACTIONS=csv

KV_MODE=none

SHOULD_LINEMERGE=false

category=Structured

description=Webex Sentiment CSVs

disabled=false

pulldown_type=true

TIME_FORMAT=%m/%d/%y %k:%M

TZ=UTC

TIMESTAMP_FIELDS=<set this to "Started or Completed" whatever field makes sense to you>

HEADER_FIELD_LINE_NUMBER=1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mmodestino

Back with more questions 🙂

I emailed our Splunk admin and this was his response:

However, this was never set up to have the timestamp correspond with the survey time. The timestamp was initially being taken on when the data was ingested into the index. We used the time fields from the survey for when a survey was taken vs the timestamp for the record.

I'm not sure that I really understand what he is saying. And there still must be a problem because the data is incorrect.

Any chance you can interpret this for me? Will changing the props.conf fix things?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The admin is advising that the _time field in Splunk is not a representation of anything other than when the data was ingested. Thus your events can't really be looked at over _time to provide any insight other than how may events were ingested at any given time....you must use fields extracted from the events as your time series. (which you were trying)

This can work, but I would still confirm the props.conf to confirm we aren't letting Splunk automatically detect the date at all. The format and timezone should always be explicitly set to ensure the timestamps are interpreted correctly.

TIME_FORMAT=%m/%d/%y %k:%M

TZ=UTC

Also, if we are going to use the time fields from the survey anyways, might as well use it as timestamp, as the ingest time is useless in analysis of this data, not to mention it takes you like 4 pipes to get the data prepped for charting.

Anyways, I'll play with the strftime extractions you are being forced to do and see if theres any weirdness there...but yeah...i still say you clean up the on boarding to set the timestamp as one of the fields from the data, rather than a useless timestamp for when the data was ingested...unless thats useful for someone??

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok so a ton of places this can go wrong after playing with this...

In the events in your original screenshot, the strptime is extracting against the "Started" field and is expecting the full year "2017" - "%m/%d/%Y %H:%M" - note the captial %Y

In the events you posted here, and the ones I ingested, the Year in the "Started" field is not 2017 it is 17. Thus the strfptime eval is invalid for these events and needs to be: "%m/%d/%y %H:%M"....are you receiving 2 different "Started" field time formats???

I think this adventure mainly points back at this data needing to be revisited in the onboarding phase, to iron out these gotchas and ensure uniform ingestion and prep before analysis.

Thats how mysterious results occur 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again. I completely agree that the survey time fields should be the time stamp, and I will try to work with the admin to accomplish that...

I don't understand why it showed up as 2017 then as 17 - I don't think I'm receiving different "Started" field time formats.

Here is the original screenshot and CSV data: http://imgur.com/a/SjJpd

Here is the screenshot and CSV data that I posted later: http://imgur.com/a/gCsGX

Perhaps my query in the first screenshot caused the different date format?

Perhaps the single/double hour time digit change is a contributing factor?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check your imgur photos from when you were zooming in on the spike. You can see the mixed timestamps there. You will need to account for this before making any changes, as setting the field as the timestamp will be affected

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you clarify what you mean by account for the mixed time stamps?

Can this be fixed by changing the forwarders to this code you wrote?

[ webex_sentiment_csv ]

CHARSET=UTF-8

INDEXED_EXTRACTIONS=csv

KV_MODE=none

SHOULD_LINEMERGE=false

category=Structured

description=Webex Sentiment CSVs

disabled=false

pulldown_type=true

TIME_FORMAT=%m/%d/%y %k:%M

TZ=UTC

TIMESTAMP_FIELDS=<set this to "Started or Completed" whatever field makes sense to you>

HEADER_FIELD_LINE_NUMBER=1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check out the results in this screenshot

You have dates in the "Started" field that look like 4/26/2017 10:54 (two of those from Brazil) then a third copy that looks like 4/26/17 10:54

so I think what @dalJeanis and I have both concluded in different ways, is that there are some serious data integrity issues that need to be ironed out before any tangible analysis can be done.

It definitely looks like there are duplicate events, and it most certainly looks like the data ingestion of these files needs a good long look.

I would start by reviewing how you get this data, and from where. I am almost thinking you have 2 forwarders monitoring active/standby machines or something....you are getting two copies of identical data, then one copy with a different TIME_FORMAT

The props configuration above is only suitable for the events with the year as a two digit number. It just needs to be set to a %Y if you clean up the timestamps to all reflect 2017 instead. So mostly the code is good.

Sounds like you have a capable admin. I would suggest a working session to review the data integrity of this data source...it may be that this is a known issue that they neve went back and cleaned up cause someone only started looking now 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mmodestino

I'm still having difficulty getting the timestamp to parse correctly.

Here is my props.conf with your code: http://imgur.com/a/9xtrJ

I've looked through the CSV files and the incoming data is correct. The problem is in the way Splunk is ingesting the timestamp.

Here is a picture for reference of the incorrect timestamping: http://imgur.com/a/gB2na

Here is the raw data coming in from the csv: http://imgur.com/a/MPFrJ

I'm not sure if this matters, but those # signs change to numbers when the field is expanded: http://imgur.com/a/IWGr6

I believe I need to change the props.conf, but I'm not sure to what exactly.

Here is the timestamp parsing occurring incorrectly in the Add Data Wizard: http://imgur.com/a/hYBEQ

I hope you are able to solve the problem with that information. Thanks again for all your help 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Matt,

Sorry for the delay in getting back to you.

The data is looking good now!

The timestamp is being extracted properly and is Splunk is converting the timestamp to the local timezone you have set under your account settings (which appears to be UTC -5).

So we are almost there....2 things:

1) Can you confirm the webex timestamps are UTC?

2) Confirm your account settings are UTC-5 and that Splunk is correctly translating the original UTC timestamp to your local time in the _time field

Also, once this is done, please remember this will only affect the data that is ingested AFTER the changes. Any old data will need to be re-indexed to correct the issues.

Also, have your admin keep in mind, these props.conf need to be placed on the forwarder as you are dealing with indexed_extractions (i put it on the UF and IDX for continuity sake)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mmodestino_splunk - I upvoted your answer (because it deserves it) and also 3 of your comments to pass along the 50 points that the OP gave me, which were probably meant for you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you ruled out a change in response rate? Perhaps the April calls encouraged more people to answer the surveys.

If this reply helps you, Karma would be appreciated.