Are you a member of the Splunk Community?

- Find Answers

- :

- Premium Solutions

- :

- Splunk Enterprise Security

- :

- traffic fails regularly

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

traffic fails regularly

Hello all

I have a problem on my splunk.

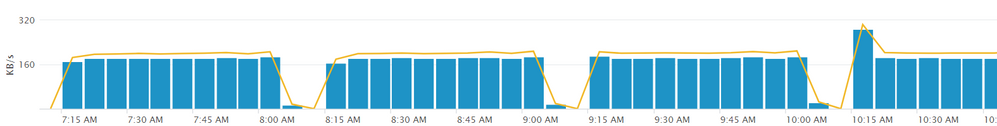

The monitoring console illustrates the forwarded traffic from forwarders to the indexer fails each an hour regularly. exactly at x:05 to x:15. why it happens and how to prevent it?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @amitm05

recently, there are spikes on some forwarders' connections.

I dont know how to check data loss. I can only say that there is no record at the fail time interval and All records have an index_time other than the fail time interval.

The receiver Port 9997 is up and accessible during the fail time interval.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The logs provided above are showing the error messages where the read operation is failing on the indexer. I am talking about the below messages in the log file:

04-21-2018 13:14:52.368 -0400 ERROR TcpInputProc - Error encountered for connection from src=x.x.x.1:43534. Read Timeout Timed out after 600 seconds.

04-21-2018 13:14:53.378 -0400 ERROR TcpInputProc - Error encountered for connection from src=x.x.x.2:63327. Read Timeout Timed out after 600 seconds.

04-21-2018 13:14:57.592 -0400 ERROR TcpInputProc - Error encountered for connection from src=x.x.x.3:60907. Read

Which says there is a read timeout for 600 seconds (10 minutes) which is for what time the traffic is failing.

However I've a question here @ 920087764

Are we talking about the data loss here or just the indexing gap. I mean if the missed events are actually getting received after those 10 minutes then it is not a data loss but only the indexing problem for that short duration. Although no spike after resuming indexing indicates that probably this is a data loss scenario. But I still want to confirm this from you.

Also, the error in connection during that time can indicate many possibilities like an Antivirus/Host scan or any other network activity which stops the external connections to the indexer.

One thing to try could be to telnet the receiver port 9997 of your indexer during that time and see if it fails.

I hope this will lead you towards the solution !

Please respond with any updates you may have.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Usually there is spike after resuming indexing.

The 9997 port is up during the time.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I need help.

the above pattern is continuing. 😞

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, so let's start from the beginning (heh, beginning, but in only vague order!)

- Confirm there are no problems on the networking side. This may take wireshark/tcpdump on a few clients to confirm.

- Also on a few forwarders, check their system usage (cpu, network, etc...) at the time when they drop out.

- Check your servers health. Go to Settings, Monitoring Console, check the "Health Check" tab, click "start" in the upper right. If nothing shows up, check through other MC pages for high consumption or errors.

- You say "all search are disabled". What does this mean? Are you using "disabled" as "I have disabled them" or are you saying that you try to run searches "but they don't work"?

I think that's a good start. My guess is you have networking or client issues you are unaware of (not particularly likely) or you have hardware/system problems on your Splunk indexers (more likely).

Take good notes of things you see that look "interesting". Screenshots help, and especially accurate descriptions of what it is you are doing.

I honestly think that you may be best off calling Support and having them help you through this. Something's going on and I have an inkling that you may have a problem with a server or two. But who knows? Might be something really simple you just haven't noticed yet.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We need WAY more information. What is the URL for your MC visualization (where are you in MC)? Which panel? What search is driving that panel?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

all search are disabled. It happens just on data forwarding from forwarder to receiver. i attach the pic to next post.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check your splunkd.log and see what messages you are getting then.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2018 13:00:03.980 -0400 ERROR KVStorageProvider - An error occurred during the last operation ('saveBatchData', domain: '11', code: '22'): Cannot do an empty bulk write

04-21-2018 13:04:10.885 -0400 ERROR TsidxStats - sid:searchparsetmp_552825949 Inexact lispy query: [ AND action app dest dm src token user ]

04-21-2018 13:04:10.885 -0400 ERROR TsidxStats - WHERE clause is not an exact query

04-21-2018 13:04:29.677 -0400 WARN DispatchSearchMetadata - could not read metadata file: /$Splunk_HOME/var/run/splunk/dispatch/ansarian_adminSplunkEnterpriseSecuritySuiteRMD5d73b432578a81d_at_152452269_75089/metadata.csv

04-21-2018 13:04:31.282 -0400 WARN DispatchSearchMetadata - could not read metadata file: /$Splunk_HOME/var/run/splunk/dispatch/ansarianadminSplunkEnterpriseSecuritySuite_RMD54f6d0fghmfeb66d6_at_1524560271_75092/metadata.csv

04-21-2018 13:05:44.670 -0400 INFO WatchedFile - Checksum for seekptr didn't match, will re-read entire file='/$Splunk_HOME/var/log/splunk/audit.log'.

04-21-2018 13:05:44.670 -0400 INFO WatchedFile - Will begin reading at offset=0 for file='/$Splunk_HOME/var/log/splunk/audit.log'.

04-21-2018 13:14:52.368 -0400 ERROR TcpInputProc - Error encountered for connection from src=x.x.x.1:43534. Read Timeout Timed out after 600 seconds.

04-21-2018 13:14:53.378 -0400 ERROR TcpInputProc - Error encountered for connection from src=x.x.x.2:63327. Read Timeout Timed out after 600 seconds.

04-21-2018 13:14:57.592 -0400 ERROR TcpInputProc - Error encountered for connection from src=x.x.x.3:60907. Read