Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Monitoring Splunk

- :

- Re: Where do I start with troubleshooting full que...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where do I start with troubleshooting full queues?

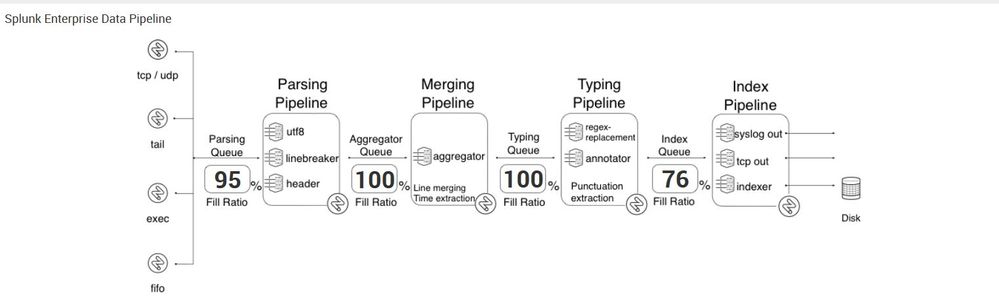

So, there's this...

Looking for documentation/advice on where to start.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check your index-time field extractions and your regexes in your transforms.conf file. The best practice for troubleshooting blocked queues is checking the rightmost full queue so in this case your typing queue. Something in your typing queue is taking too long to complete which is backing up the rest of the system. Make sure your regexes aren't too greedy and that you aren't doing too many index time field extractions.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So it sounds like starting with the typing queue which, according to https://wiki.splunk.com/Community:HowIndexingWorks, sounds like it handles the regex. Anyone have good ideas on how to correlate regex performance back to a given sourcetype/stanza in the conf? That would save @a212830 from some grunt work.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In addition, look here: http://wiki.splunk.com/Community:TroubleshootingBlockedQueues

A blocked Typing queue is usually a sign of complex/inefficient RegEx replacement in props/transforms. Merging and parsing pipelines are suffering upstream.

Indexing pipeline looks a little busier than it should as well, specifically given the typing pipeline slowing things down. Slow-ish storage?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My first guess is that this is likely down to excessive or inefficient REGEX operations during parsing. It could be index-time extractions or even field-replacement/masking operations. Another possibility is a lack up of-front sourcetyping at the input layer, if Splunk doesn’t know what sourcetype to apply to data it will try and figure it out itself on each event, which is obviously not a good idea.

I would review all inputs, props and transforms entries to see if you can spot any outliers or operations that have been applied to most or all of the incoming data. Alternatively there’s this app on Splunkbase – Data Curator: https://splunkbase.splunk.com/app/1848/ - which will provide efficiency scores on all sourcetypes based on *.conf file entries. It hasn’t been updated in a while so still says it’s for 6.2, but I expect it to work on 6.4.x as well.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not really an answer but these are good resources to start:

- http://conf.splunk.com/speakers/2015.html#search=splunkd&

- Docs and wiki searches for what actions are performed in the different queues.

I believe I attended one of those sessions and may have listened to the replay of the other. I thought they go into this a little in those. I'll also reach out to peers to get more eyes on this question.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Asynchronous comment from a peer: You troubleshoot queuing issues by starting at the first blocking queue and then step upstream

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Admittedly, this is a hack and we haven't identified the root cause but it got us going and got Splunk back to a healthy status.

We had an issue where Splunk appeared to be trying to write to an index directory that no longer existed. The index still existed, but the bucket was removed (maybe retention configuration rolled it off before Splunk processes could complete its tasks). We determined this by running 'htop' on the indexer and identifying the process id of the splunkd that was spiking so high. Then, we ran an 'strace' on that process, wrote the output to a local file, and found the error. Lastly, we created the directory it couldn't find and it resolved itself. Again, a hack, but we're still working to identify the root cause.

So, more specifically, we logged onto the indexer that was congested (a Linux box) and executed the following steps:

- Execute 'htop' command

- Identify the process id of the splunk process that's 'spiking'

- Execute 'strace process_id > strace.out' (NOTE: process_id is the process id identified in the previous step and strace.out is just a random filename to store the output)

- You will see some limited action, but let this run for 5 seconds or so and then kill it (ctrl-C)

- Open strace.out and grep for 'directory or file not found' (or something to that affect)

- Once you've identified the directory, make the directory it couldn't find

- Execute 'htop'

- If that was the only directory it couldn't find, the processing utilization for the splunk process(es) should decrease