- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Tail Read error

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tail Read error

I have a server where logs are generated on daily basis in this format-

/ABC/DEF/XYZ/xyz17012022.zip /ABC/DEF/XYZ/xyz16012022.zip /ABC/DEF/XYZ/xyz15012022.zip

OR

/ABC/DEF/RST/rst17012022.gz /ABC/DEF/RST/rst16012022.gz /ABC/DEF/RST/rst15012022.gz

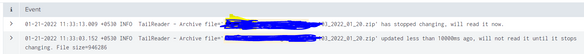

I am getting this error , every time when i am indexing the .gz, .tar or .zip file - "updated less than 10000ms ago, will not read it until it stops changing ; has stopped changing , will read it now."

This problem was earlier addressed in this post,

As suggested I have used " crcSalt = <SOURCE> " but I am still facing similar errors.

inputs.conf:

[monitor:///ABC/DEF/XYZ/xyz*.zip]

index= log_critical

disabled = false

sourcetype= Critical_XYZ

ignoreOlderThan = 2d

crcSalt = <SOURCE>

I am getting this Event in Internal Logs while ingesting the log file

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those are not errors. They're informational messages and can be ignored safely.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not able to pick these compressed files and these file are not getting indexed, Is there anything wrong with my configuration?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Handling compressed files requires special care from splunk.

In case of normal text file you can usually just read the file, remember the position within the file and then read later from that point on, possibly combining the read data with some unprocessed chunk from previous read. Relatively easy.

With compressed files it's not that easy. Especially with zipped files. I won't go into details too deep (especially that I don't know how exactly splunk does it ;-)) but in general - if the file is constantly changing there's no point in uncompressing it because it is probably still in the middle of the compression process and you might get erroneous data from unpack attempt. And (de)compression is somehow cpu-intensive operation. So splunk waits until the file "seems finished".

But the other mesage suggests that spkunk had already decided that the file is ok to go and starts procesing it so if you're not getting any data from it the misconfiguration might be elsewhere.