- Find Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- Re: Mysterious data spike

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mysterious data spike

I have a plethora of survey data from several thousand video conference calls.

After each call, users are asked to fill out a survey.

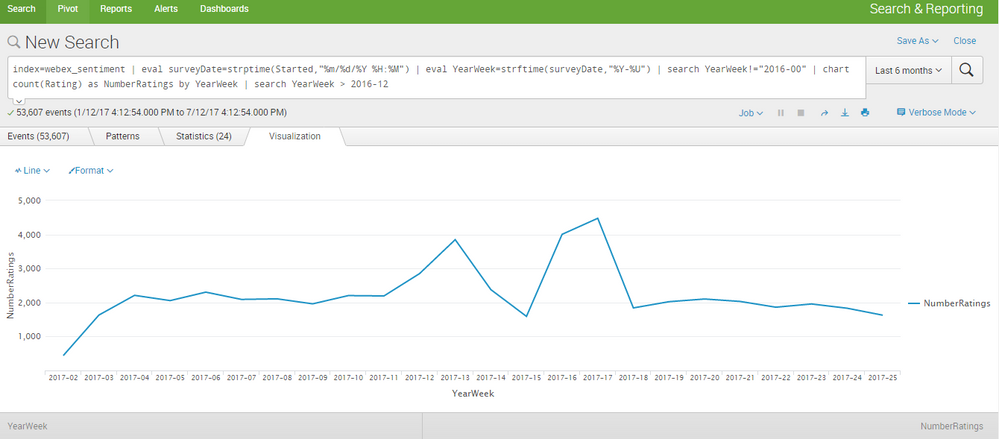

I have found a mysterious spike in a number of survey results during the month of April that is skewing my visualizations.

The spike can be seen here:

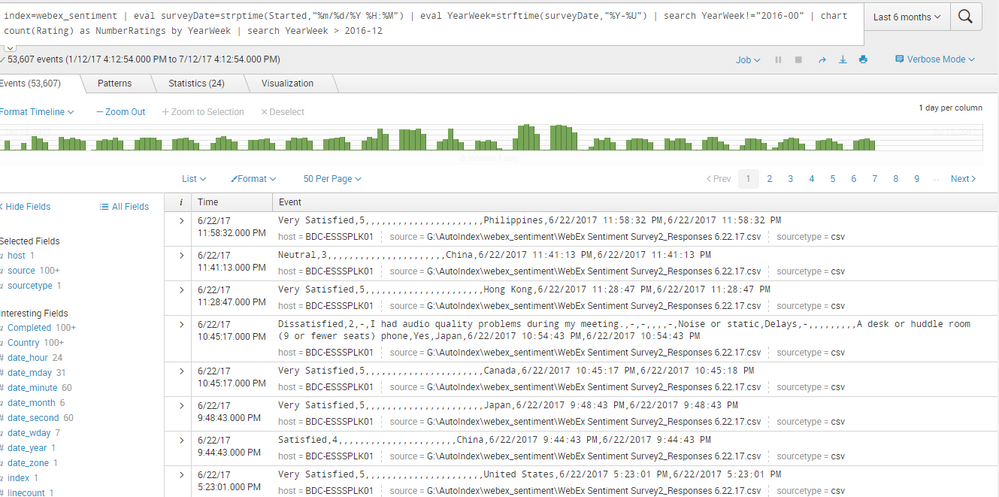

Here is a sample of the event data:

This makes no sense, as there was no surge in number of calls during this time. My data is corrupt, but I'm not sure how/why.

Please advise.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

did you precisely checked the peak time information. Manually checking for the results will really help. Devices work as they are made for and events generate, as we use them.

We can work together to resolve this issue..

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@anand_singh17 What do you propose? I believe the problem is duplicate events

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mhtedford:

Please check one most important thing at your source, which is your inputs.conf, the very reason, the duplicacy may occur again and reaching to root is important. Two possibilities would have happened.

- Inputs.conf is added with this additional configuration made to read data 'ignoreEarlierThan=xdays' and,

- Splunkd service at the source would have been stopped or restarted with some reason/cause. Because of this pause of UF or HF, it will pick up data, as there is no method in Splunk yet to compare or verify, if the data is already indexed for so and so and so, matches or criterias.

As action,

1. You can verify inputs.conf

2. delete this additional record[caution], but please [imp] take help from splunk administrator, even though you have privileges. Any mistake, may lead to corrupt bucket or create issue in index.

Looking for your update,

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ummm. Okay, so suppose there were no surge in calls, but the calls had more participants than usual?

That surge would be completely accurate and non-mysterious.

How can you verify that in the data you have access to?

Another way this could be investigated is by chewing it up in pieces - for instance, try this,,,

your search before the chart command

| bin _time span=1d

| stats count as daycount by country _time

| eventstats avg(daycount) as avgcount stdev(daycount) as stdevcount by country

| where daycount> avgcount + 3*stdevcount

...which will tell you which countries spiked which days. If it's a simple subset, then it gives you information to track down.

Now, the other thing I don't understand about the data is that there doesn't seem to be an indication of which call it was on (unless the field is alphabetically after "linecount"). I guess that's okay as anonymous feedback goes, but it kind of hamstrings you as far as analysis goes. If there is such a field, then do a count by "webconferencenumber" and date, and then check for outliers in that. But, if that field existed, I would have expected you to have already done that.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mhtedford - thanks for the points. Has your problem been solved, was that an accident, or what?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DalJeanis was just thanking you for your help - still trying to figure things out, but I'm much closer.

Here is a screenshot of the available data fields: http://imgur.com/a/GQoc9

Looks like there is not a field which uniquely identifies each conference call, outside of the time stamp.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way, I'm pretty sure that mmodestino got it right - when you fix the ingestion for the timestamps around midnight, the data should properly smooth out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DalJeanis

I also believe that mmodestino is on the right track - just need to work with my admin to get things sorted out with his help.

I input your query with the correct capitalization:

index = webex_sentiment

| eval surveyDate = strptime(Started,"%m/%d/%Y %H:%M")

| eval YearWeek=strftime(surveyDate,"%Y-%U")

| bin _time span=1d

| stats count as daycount by Country _time

| eventstats avg(daycount) as avgcount stdev(daycount) as stdevcount by Country

| where daycount> avgcount + 3*stdevcount

This is the events list: http://imgur.com/a/SpnJP

This is the country visualization: http://imgur.com/a/MH0KM

The yellow spike on the far right is calls in the United States, which makes sense as our company is based here.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since your spike is on the order of several hundred, we can ignore any Country records that are under 100. We can also ignore any records that aren't on the dates in question. Given what we see, the issue is either broad-based or only in the US, that up-down-up spike on the far right.

However, the Germany and Hungary results that do show up look a little suspicious to me, since the repeated numbers jump out and blink at me - 108/90/108 and 15/15/15. THAT made me scan back at the other numbers.

WAY too many of your daycount numbers are divisible by 3. Multiples of 3 should be about a third in any large natural process (23-43% is fine) but these are over 90%.

I checked the code and WE didn't cause that during analysis, you and I.

Conclusion - That is not real data. Chances are pretty good that some file(s) got processed not twice, but three times. And, because the dups are on all different days all across the globe, chances are pretty good it's baked into your system and happens every day. (Which makes me wonder about the 3 in 55 that have +1. What wrong with THEM?)

Let's see if I'm right. There is this highly technical procedure, that I am amazingly adept at, called "poking around".

Pull just the detail events for those days for Hungary 15/15/15. If they look like triple dups, then pull Germany 108/90/108 and check them. Should be the same.

I'm guessing that your post procedure on the web form is sending three copies with different timestamps, but that's total conjecture based solely on there being extra records and on the start and end times being the same. Since THAT dual-timestamp is wrong and I noticed it and wondered about it, I'm connecting those two dots until I know otherwise.

(I believe the form posts a copy with the start and end times set to the start time, then later posts one with the start and end times set to the end time. Who knows where the other copy comes from? The Hungary data should tell you.)

FYI, my total conjecture is about 80% spot on and 15% close enough to spit. But, you always could be somewhere in the other 10-50%, so don't take any bets more than a buck.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure how you figured all that out, but I'm extremely impressed.

I ran this code for Hungary on those days:

index = webex_sentiment

Country=Hungary

| eval surveyDate = strptime(Started,"%m/%d/%Y %H:%M")

| eval YearWeek=strftime(surveyDate,"%Y-%U")

| bin _time span=1d

Results: http://imgur.com/a/IDYFD

I ran this code for Germany on those days:

index = webex_sentiment

Country=Germany

| eval surveyDate = strptime(Started,"%m/%d/%Y %H:%M")

| eval YearWeek=strftime(surveyDate,"%Y-%U")

| bin _time span=1d

Results: http://imgur.com/a/TAdA5

It seems there are duplicated events in a series of three...

What does this mean? How do I go about fixing it?

Is this tied to the strptime extractions that mmodestino pointed out?

Thanks so much for all your help!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It means your process is screwed up. (As an aside, this is part of the 15% of near miss.)

The good news is, there is a principled way to pull the accurate data here. You shouldn't just dedup by Start Time, because you might lose some non-dup events that accidentally coincided. However, there's a third field with the exact same time value present in one of the three events, but not in the other two. Kill the events without that field, and you have accurately deduped your data.

Assuming that third time field is called MyTime, just insert MyTime=* into the query, and you're done.

Then, we go back to debugging the spike, running the same sort of queries, with numbers at 1/3 the original.

If the dedup kills ONLY your spike, then you've probably detected a software update that screwed up the posting of the results, and has since been fixed.

On the other hand -- and more likely -- if the dedup gets rid of 2/3 of your data ALL THE WAY back, then you have filtered an ongoing software glitch, which is still in place, and you are back where you started, relatively, but with a more accurate count.

Either way, you will need to check the monthly file that is being uploaded and verify what data is there. If the dups are present in the file, then the errant process is before or during the creation of that file. If the dups are NOT in the file -- which I believe unlikely - then the errant process occurs during ingestion.

Technically, any error before ingestion is not your responsibility, and you probably have little control over it other than reporting the issue, so if you can't get anyone to solve the real problem, then you may want to change ingestion to send any records without that third time field to the null queue.

Either way, you'll want to keep that test for non-null third time field in your queries for the foreseeable future.

Up to you. Let us know what you find.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What/where is this third time field?

I input this query, but received no results:

index = webex_sentiment MyTime=*

| eval surveyDate = strptime(Started,"%m/%d/%Y %H:%M")

| eval YearWeek=strftime(surveyDate,"%Y-%U")

| chart count(Rating) as NumberRatings by YearWeek

Picture for reference: http://imgur.com/a/s8p8k

I began digging into the original CSV files before they are uploaded into Splunk.

Here is the picture again of the duplicated Hungary events in Splunk: http://imgur.com/a/IDYFD

Looking at these events, it seems there are three duplicates at 10:57 on April 28th.

However, the raw CSV for April 28th has three events at 10:57, but only one is from Hungary: http://imgur.com/a/KC63m

Here is the entire raw CSV file for April 28th (the time fields are on the far right):

https://app.box.com/s/tpljxe8500aegkdasgx1ghhcl3rao5sa

I'm not sure what this means - perhaps the countries are being read incorrectly?

For reference, here is the raw CSV file for April 19, the highest outlier in the data spike with 1,500 surveys in a single day: https://app.box.com/s/82uz69pbg8nxn3cpdfae39ytszh9t23o

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, very good poking around. That's a good clue.

In answer to your question about the third time field, for instance, the third transaction in this image - http://imgur.com/a/TAdA5 has a bunch of commas then...

,,,Germany,4/26/2017 11:37,4/26/2017 11:37,,,4/26/2017 11:37

But it doesn't look like your CSV data actually has that, which together with YOUR clue makes it very likely that your ingestion process is buggy.

Please post your conf file settings for this sourcetype.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aha I've found the files!

Here you can see the index.conf for the webex_sentiment:

http://imgur.com/a/K3Yb4

I believe I need to delete all the data that exists in the index currently, then re-upload with this new sourcetype:

http://imgur.com/a/NbHIU

This new timestamp will correct for the 17 to 2017 problem.

However, with yours and @mmodestino's help I've realized there is a bigger problem with data integrity.

Though the headers appear in the right place in the CSV format, when opened in Notepad I think there are some extra commas or something.

This screenshot shows the problem when Splunk tries to ingest the data: http://imgur.com/a/jFv7Z

The error for event 33 reads:

Could not use strptime to parse timestamp from "Yes." Failed to parse timestamp. Defaulting to timestamp of previous event (Wed Apr 26 03:37:00 2017).

There is text that should not be there appearing in the time fields...

I believe the problem is with the data we are receiving from Cisco.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Time stamp might be workable, if it goes down to the second.... Hmmm. No, from the data, start and stop are the same time, which makes it highly unlikely that the timestamp has to do with the call. It's probably the timestamp for submission of the questionnaire.

So, your data is pretty useless as far as triaging individual calls.

On the plus side, Country is there in the data, capitalized. You can run the query again with the required capitalization and maybe you'll be able to see something. Kill the last two lines of the country query until you've seen if you have an actual spike that's Country related.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DalJeanis

Thanks for the response.

I input your suggestion like this:

index = webex_sentiment

| eval surveyDate = strptime(Started,"%m/%d/%Y %H:%M")

| eval YearWeek=strftime(surveyDate,"%Y-%U")

| search YearWeek!="2016-00"

| bin _time span=1d

| stats count as daycount by country _time

| eventstats avg(daycount) as avgcount stdev(daycount) as stdevcount by country

| where daycount> avgcount + 3*stdevcount

| chart count(Rating) as NumberRatings by YearWeek

| search YearWeek > 2016-12

I received these events back: http://imgur.com/a/8XYvW

However, there is no subset by country visualization: http://imgur.com/a/pNIuu

I'm unclear what exactly you mean by a field that displays which call it was on. Could you clarify?

Best,

Matthew

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure. People have just left a video conference call, and are being asked to fill out a survey about their experience. Isn't there a data field which identifies WHICH conference call they are responding to, so that you can associate the feedback with the actual call?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

eyeballing timeline in the events tab, it indeed appears there is a spike in events...if u click "format timeline" and change it to full, makes it easier to analyze, it does look like there were double the events in the middle of the timeline.

My hunch would be duplicate events or perhaps timestamping challenges or line breaking or something. any reason u didnt use timechart instead? looks like you're extracting time accurately...

Try this to validate the spike in records:

index=webex_sentiment | timechart span=1h count

set your time picker to the week that shows the spike, any hints?

If the data appears clean on those days with spikes, then there simply must have been more responses.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mmodestino

I followed your instructions and I believe that the issue is duplicate events.

Here is my query with "Format Timeline" : http://imgur.com/a/MEJJv

With this visual, you can see the truly see the data spike: http://imgur.com/a/Q4DxY

April 5 has 1,239 events

April 18 has 1,418 events

April 19 has 1,500 events

April 20 has 1,380 events

April 26 has 1,398 events

April 27 has 1,329 events

April 28 has 1,029 events

Outside of these few weeks in April, there are no days that come anywhere near to 1,000 events.

I drilled down to the individual hours per your suggestion, and found what appears to be a multitude of duplicate events: http://imgur.com/a/laaWD

What should be my next steps from here?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

actually it appears to be timestamp related. In other words we need to clean up your props.conf to ensure we extract the timestamp properly...for example, in your second screenshot, we can see that the timestamp in the event, is not the same as the _time field.

As you can see, the event appears to have a timestamp field of july 10th? it can be tough for splunk to determine the date, Splunk is extracting the date as july 10 (mod time?) rather than july 4 or June 28, cause the auto extract failed.

What we need to do is to help Splunk with the time format, as the auto extraction is letting you down.

Can you paste a few of these raw events from this screenshot here so I can run them through the Add data wizard for you and help you build a better timestamp extraction?

Ideally we will use timestamp_fields and a time_format like %m/%d/%y %k:%M

This demonstrates a best practice that states we should always build our props to define how to extract where to find the timestamp, how to process it's format, ,among other golden rules. The add data wiz is really great for that.