- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- fields.conf TOKENIZER breaks my event completely

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

fields.conf TOKENIZER breaks my event completely

I'm trying to get a new sourcetype (NetApp user-level audit logs, exported as XML) to work, and I think my fields.conf tokenizer is breaking things. But I'm not really sure how, or why, or what to do about it.

The raw data is XML, but I'm not using KV_MODE=xml because that doesn't properly handle all the attributes. So, I've got a bunch of custom regular expressions, the true backbone of all enterprise software. 🙂 Here's a single sample event (but you can probably disregard most of it, it's just here for completeness):

<Event><System><Provider Name="NetApp-Security-Auditing" Guid="{guid-edited}"/><EventID>4656</EventID><EventName>Open Object</EventName><Version>101.3</Version><Source>CIFS</Source><Level>0</Level><Opcode>0</Opcode><Keywords>0x8020000000000000</Keywords><Result>Audit Success</Result><TimeCreated SystemTime="2022-01-12T15:42:41.096809000Z"/><Correlation/><Channel>Security</Channel><Computer>server-name-edited</Computer><ComputerUUID>guid-edited</ComputerUUID><Security/></System><EventData><Data Name="SubjectIP" IPVersion="4">1.2.3.4</Data><Data Name="SubjectUnix" Uid="1234" Gid="1234" Local="false"></Data><Data Name="SubjectUserSid">S-1-5-21-3579272529-1234567890-2280984729-123456</Data><Data Name="SubjectUserIsLocal">false</Data><Data Name="SubjectDomainName">ACCOUNTS</Data><Data Name="SubjectUserName">davidsmith</Data><Data Name="ObjectServer">Security</Data><Data Name="ObjectType">Directory</Data><Data Name="HandleID">00000000000444;00;002a62a7;0d3d88a4</Data><Data Name="ObjectName">(Shares);/LogTestActivity/dsmith/wordpress-shared/plugins-shared</Data><Data Name="AccessList">%%4416 %%4423 </Data><Data Name="AccessMask">81</Data><Data Name="DesiredAccess">Read Data; List Directory; Read Attributes; </Data><Data Name="Attributes">Open a directory; </Data></EventData></Event>

My custom app's props.conf has a couple dozen lines like this, for each element I want to be able to search on:

EXTRACT-DesiredAccess = <Data Name="DesiredAccess">(?<DesiredAccess>.*?)<\/Data>

EXTRACT-HandleID = <Data Name="HandleID">(?<HandleID>.*?)<\/Data>

EXTRACT-InformationRequested = <Data Name="InformationRequested">(?<InformationRequested>.*?)<\/Data>This works as you'd expect, except for a couple of fields where they're composites. This is most noticeable in the DesiredAccess element, which in our example looks like:

<Data Name="DesiredAccess">Read Data; List Directory; Read Attributes; </Data>Thus you get a single field with "Read Data; List Directory; Read Attributes; " and if you only need to look for, say, "List Directory," you have to get clever with your searches.

So, I added a fields.conf file with this in it:

[DesiredAccess]

TOKENIZER = \s?(.*?);When I paste the 'raw' contents of that field, and that regex, into a tool like regex101.com, it works and returns the expected results. Similarly, it also works if I remove it from fields.conf, and put it in as a makemv command:

index=nonprod_pe | makemv tokenizer="\s?(.*?);" DesiredAccessWith the TOKENIZER element in fields.conf, the DesiredAccess attribute just doesn't populate, period. So I assume it's the problem.

(Since this is in an app, the app's metadata does contain explicit "export = system" lines for both [props] and [fields]. And the app is on indexers and search heads. Probably doesn't need to be in both places, but hey I'm still learning...)

So, what am I doing wrong with my fields.conf tokenizer, that's caused it to fail completely to identify any elements?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried using transforms? you might want to give this a try this:

transforms.conf

[extract_xml_data_atribute_as_field]

REGEX=<Data Name="([^"]*)"[^>]*>([^<]*)

FORMAT=$1::$2

[extract_xml_data_values_list_as_mv]

SOURCE_KEY = DesiredAccess

REGEX = (?<DesiredAccessList>[^;]*);

MV_ADD=true

props.conf

[<your_sourcetype>]

REPORT-xml_data_to_field = extract_xml_data_atribute_as_field, extract_xml_data_values_list_as_mv

Hope I was able to help you. If so, some karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What benefits would there be to a transforms.conf approach over fields.conf? I'm still fairly new to Splunk, and definitely new to this sort of data massaging, so I don't deeply understand the pros and cons of each.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From your last reply you stated that the other solution you end up with was close enough. 🙂 This solution is better than close enough. Also with this you avoid having the extra makemv command in your search because with this transforms the field is already extracted as a mv field.

Hope I was able to help you. If so, some karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is unadvisable to handle structured data with custom regex because such is fraught with pitfalls. It is better to focus on why KV_MODE=xml "doesn't properly handle all the attributes." Generally speaking, there is no reason why vendor's tested builtin function cannot handle conformant data.

Can you illustrate with cleansed data where indexer/spath isn't handling correctly?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KV_MODE=xml doesn't handle most of the <Data Name="fieldname">value</Data> events, in the way that I would hope/expect. You'll get an attribute named literally "Name" but not something named "fieldname" with a value of "value". The most egregious example in terms of practicality is probably:

<Data Name="SubjectUserName">davidsmith</Data>

In the above, I would like an event attribute named "SubjectUserName" with a value of "davidsmith". (Yes, I want user names in my audit logs...) But neither KV_MODE=xml, nor |xmlkv in a search, handle this case properly. (Or at least "the way I want them to," which may or may not be "properly.")

NetApp's particular flavor of XML has been an issue for years:

Not that this is relevant, because the specific elements I'm asking about in this topic, such as DesiredAccess, aren't parsed properly either. 🙂

I'm primarily interested in understanding why my fields.conf tokenizers aren't working, not so much in debugging Splunk's internal XML parser.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KV_MODE=xml is perhaps the wrong option for this problem. On the other hand, spath command can put attributes into field names with the {@attrib} notation so you don't get field name like "Name"; instead, you get a scalar facsimile of the vectorial attribute space, like Event.EventData.Data{@Name}, Event.System.Provider{@Name}, and so on. Like any reduction of dimensions, spath ends up losing some information. (Another problem - I consider it a bug, is that spath does not handle empty values correctly.) But because XML follows an application-specific DTD, you can usually compensate with application-specific handling, like the following:

| rex mode=sed "s/><\/Data/>()<\//g" ``` compensate for spath's inability to handle empty values ```

| spath

| rename Event.EventData.Data{@*} as EventData*, Event.EventData.Data as EventDataData ``` most eval functions cannot handle {} notation ```

| eval EventDataName=mvmap(EventDataName, case(EventDataName == "SubjectUnix", "SubjectUnix <Uid:" . EventDataUid . ", Gid:" . EventDataGid . ", Local:" . EventDataLocal . ">", EventDataName == "SubjectIP", "SubjectIP<" . EventDataIPVersion . ">", true(), EventDataName)) ``` application-specific mapping ```

| eval Combo = mvzip(EventDataName, EventDataData, "=")

(See inline comments) Output from your sample data is

Combo | Event.System.Channel | Event.System.Computer | Event.System.ComputerUUID | Event.System.EventID | Event.System.EventName | Event.System.Keywords | Event.System.Level | Event.System.Opcode | Event.System.Provider{@Guid} | Event.System.Provider{@Name} | Event.System.Result | Event.System.Source | Event.System.TimeCreated{@SystemTime} | Event.System.Version | EventDataData | EventDataGid | EventDataIPVersion | EventDataLocal | EventDataName | EventDataUid | _raw | _time |

SubjectIP<4>=1.2.3.4 SubjectUnix <Uid:1234, Gid:1234, Local:false>=() SubjectUserSid=S-1-5-21-3579272529-1234567890-2280984729-123456 SubjectUserIsLocal=false SubjectDomainName=ACCOUNTS SubjectUserName=davidsmith ObjectServer=Security ObjectType=Directory HandleID=00000000000444;00;002a62a7;0d3d88a4 ObjectName=(Shares);/LogTestActivity/dsmith/wordpress-shared/plugins-shared AccessList=%%4416 %%4423 AccessMask=81 DesiredAccess=Read Data; List Directory; Read Attributes; Attributes=Open a directory; | Security | server-name-edited | guid-edited | 4656 | Open Object | 0x8020000000000000 | 0 | 0 | {guid-edited} | NetApp-Security-Auditing | Audit Success | CIFS | 2022-01-12T15:42:41.096809000Z | 101.3 | 1.2.3.4 () S-1-5-21-3579272529-1234567890-2280984729-123456 false ACCOUNTS davidsmith Security Directory 00000000000444;00;002a62a7;0d3d88a4 (Shares);/LogTestActivity/dsmith/wordpress-shared/plugins-shared %%4416 %%4423 81 Read Data; List Directory; Read Attributes; Open a directory; | 1234 | 4 | false | SubjectIP<4> SubjectUnix <Uid:1234, Gid:1234, Local:false> SubjectUserSid SubjectUserIsLocal SubjectDomainName SubjectUserName ObjectServer ObjectType HandleID ObjectName AccessList AccessMask DesiredAccess Attributes | 1234 | <Event><System><Provider Name="NetApp-Security-Auditing" Guid="{guid-edited}"/><EventID>4656</EventID><EventName>Open Object</EventName><Version>101.3</Version><Source>CIFS</Source><Level>0</Level><Opcode>0</Opcode><Keywords>0x8020000000000000</Keywords><Result>Audit Success</Result><TimeCreated SystemTime="2022-01-12T15:42:41.096809000Z"/><Correlation/><Channel>Security</Channel><Computer>server-name-edited</Computer><ComputerUUID>guid-edited</ComputerUUID><Security/></System><EventData><Data Name="SubjectIP" IPVersion="4">1.2.3.4</Data><Data Name="SubjectUnix" Uid="1234" Gid="1234" Local="false">()</><Data Name="SubjectUserSid">S-1-5-21-3579272529-1234567890-2280984729-123456</Data><Data Name="SubjectUserIsLocal">false</Data><Data Name="SubjectDomainName">ACCOUNTS</Data><Data Name="SubjectUserName">davidsmith</Data><Data Name="ObjectServer">Security</Data><Data Name="ObjectType">Directory</Data><Data Name="HandleID">00000000000444;00;002a62a7;0d3d88a4</Data><Data Name="ObjectName">(Shares);/LogTestActivity/dsmith/wordpress-shared/plugins-shared</Data><Data Name="AccessList">%%4416 %%4423 </Data><Data Name="AccessMask">81</Data><Data Name="DesiredAccess">Read Data; List Directory; Read Attributes; </Data><Data Name="Attributes">Open a directory; </Data></EventData></Event> | 2022-01-12T15:42:41 |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@yuanliu wrote:KV_MODE=xml is perhaps the wrong option for this problem. On the other hand, spath command

I didn't look deeply enough. In fact, KV_MODE=XML performs spath just like in explicit SPL. It could have worked if not for the want of a placeholder value when Event.EventData.Data contains null values. In explicit spath, I try to fix this bug with "s/><\/Data/>()<\//g" before running spath. But there is no way to fix implicit output.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which is great but doesn't address the question I'm asking. Note that the DesiredAccess attribute still is shown as a single text item, and isn't being tokenized into its individual components.

Assume for the sake of this discussion that I'm going to stick with regexes for now. I have working regular expressions for the fields I care about, and as long as I don't also have a tokenizer for those fields, the field extraction works. But when I add fields.conf the fields named therein aren't extracted, period. Any suggestions on what I'm doing wrong there?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The spath code is just to illustrate how to clean up. Key-value pairs in Combo can be extracted using extract command (aka kv).

| spath

| rename Event.EventData.Data{@*} as EventData*, Event.EventData.Data as EventDataData ``` most eval functions cannot handle {} notation ```

| eval EventDataName=mvmap(EventDataName, case(EventDataName == "SubjectUnix", "SubjectUnix <Uid:" . EventDataUid . ", Gid:" . EventDataGid . ", Local:" . EventDataLocal . ">", EventDataName == "SubjectIP", "SubjectIP<" . EventDataIPVersion . ">", true(), EventDataName)) ``` application-specific mapping ```

| eval Combo = mvzip(EventDataName, EventDataData, "=\"")

| rename Combo as _raw

| rex mode=sed "s/$/\"/"

| kv kvdelim="=" ``` extract key-value pairs from Combo ```

| fields - Event*, _raw

| makemv delim=";" DesiredAccess

| makemv delim=";" Attributes

| makemv delim=";" HandleID

| makemv delim=";" ObjectName

Sample output is like

| AccessList | AccessMask | Attributes | DesiredAccess | HandleID | ObjectName | ObjectServer | ObjectType | SubjectDomainName | SubjectUserIsLocal | SubjectUserName | SubjectUserSid | _time |

| %%4416 %%4423 | 81 | Open a directory | Read Data List Directory Read Attributes | 00000000000444 00 002a62a7 0d3d88a4 00;002a62a7;0d3d88a4 | (Shares) /LogTestActivity/dsmith/wordpress-shared/plugins-shared | Security | Directory | ACCOUNTS | false | davidsmith | S-1-5-21-3579272529-1234567890-2280984729-123456 | 2022-01-12T15:42:41 |

The main point is that structured data are best handled with conformant tested code. In addition, complex, custom index-time extraction makes maintenance difficult. Search-time prowess is Splunk's very strength. Why not use it?

Meantime, the error in fields.conf is that TOKENIZER does not accept extra characters outside the token itself. This should work:

[DesiredAccess]

TOKENIZER = (\b[^;]+)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, at least that updated tokenizer breaks things in a different way...

I edited the fields.conf I'm pushing out to my search heads thusly:

[DesiredAccess]

# TOKENIZER = \s?(.*?);

TOKENIZER = (\b[^;]+)

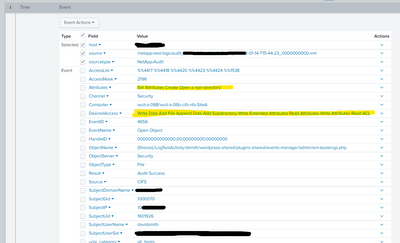

The contents of the fields so tokenized (is that a word?) at least show up when I expand a given search result now. They're a single line, with the semicolons removed. (I highlighted multiple lines because there are actually about a half-dozen such fields that I'm extracting, I limited it to a single instance for this thread because the solution for one should be identical to all the others.)

Your regex works correctly in online tools like regex101.com, but then again so did mine. (Yours is cleaner and faster, though, so thank you for that.) I wish Splunk had more and better examples of how to use TOKENIZER in the docs.

Dumb Newbie Question of the day: The fields are split correctly if I remove the tokenizer, and add | makemv delim=";" FieldNameHere to a search. Is there a way to add that to a config file? (i.e. "every time you search this sourcetype, do this" or similar) Part of my goal here is to make life easier for users that aren't deeply familiar with Splunk field commands, and asking these users to add a half-dozen makemv commands to every search isn't exactly convenient for anyone involved.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The contents of the fields so tokenized (is that a word?) at least show up when I expand a given search result now. They're a single line, with the semicolons removed. (I highlighted multiple lines because there are actually about a half-dozen such fields that I'm extracting, I limited it to a single instance for this thread because the solution for one should be identical to all the others.)

Screenshot of a single event, with the improperly-extracted fields highlighted.

Maybe you can elaborate "breaks things in a different way... " You are correct that values looks to be on a single line IF you just click expand the even view. But that look itself doesn't mean much. Based on your original question, your intention is to break DesiredAccess, etc., into a multivalue field instead of semicolon-separated single string. The proposed TOKENIZER does exactly that. How is this broken? You can count the number of values of the DesiredAccess like this

| eval AccessCount=mvcount(DesiredAccess)

You'll see that the count is > 1. I ingested your sample data, then used the following props.properties

[xml-too_small]

EXTRACT-DesiredAccess = <Data Name="DesiredAccess">(?<DesiredAccess>.*?)<\/Data>

EXTRACT-HandleID = <Data Name="HandleID">(?<HandleID>.*?)<\/Data>

EXTRACT-InformationRequested = <Data Name="InformationRequested">(?<InformationRequested>.*?)<\/Data>

EXTRACT-Attributes = <Data Name="Attributes">(?<Attributes>[^<]*)<\/Data>

and fields.properties

[DesiredAccess]

TOKENIZER = (\b[^;]+)

[ObjectName]

TOKENIZER = (\b[^;]+)

[InformationRequested]

TOKENIZER = (\b[^;]+)

[Attributes]

TOKENIZER = (\b[^;]+)

[HandleID]

TOKENIZER = (\b[^;]+)

When I perform this search

index="tests" source="netapptest.xml"

| table DesiredAccess Attributes HandleID ObjectName

| eval AccessCount=mvcount(DesiredAccess)

| eval ObjectCount=mvcount(ObjectName)

| eval HandleCount=mvcount(HandleID)

it gives

DesiredAccess | Attributes | HandleID | ObjectName | AccessCount | HandleCount | ObjectCount |

Read Data List Directory Read Attributes | Open a directory | 00000000000444 00 002a62a7 0d3d88a4 | Shares) LogTestActivity/dsmith/wordpress-shared/plugins-shared | 3 | 4 | 2 |

So, even though the expanded event view displays these fields in a single line, they are really multivalue fields now; DesiredAccess, for example, is made of 3 distinct values. (Do not test this in verbose mode. That mode can interact strangely.) This is exactly what TOKENIZER does, and I believe that this is what you originally wanted.

The "clipping" of the opening parenthesis in ObjectName highlights the reason why I strongly recommend using vendor-provided commands like spath. You can fine tune that TOKENIZER to get around this one problem, but there maybe other data values to break it.

So, I refined the spath method to eliminate glitches when there are multiple attributes in one property:

| rex mode=sed "s/><\/Data/>()<\//g" ``` compensate for spath's inability to handle empty values ```

| spath

| rename Event.EventData.Data{@*} as EventData*, Event.EventData.Data as EventDataData ``` most eval functions cannot handle {} notation ```

| eval EventDataName=mvmap(EventDataName, case(EventDataName == "SubjectUnix", "SubjectUnix <Uid:" . EventDataUid . ", Gid:" . EventDataGid . ", Local:" . EventDataLocal . ">", EventDataName == "SubjectIP", "SubjectIP<" . EventDataIPVersion . ">", true(), EventDataName)) ``` application-specific mapping ```

| eval Combo = mvzip(EventDataName, EventDataData, "=\"")

| eval Combo = mvmap(Combo, replace(Combo, "<(.+)>=\"", "=\"<\1>")) ``` handle multi-attribute properties ```

| rename Combo as _raw

| rex mode=sed "s/$/\"/"

| kv kvdelim="=" ``` extract key-value pairs from Combo ```

| fields - Event*, _raw

| makemv delim=";" DesiredAccess

| makemv delim=";" Attributes

| makemv delim=";" HandleID

| makemv delim=";" ObjectName

(Note: The above will not work correctly when that custom TOKENIZER exists.) This is a lot more generic in terms of which parts of XML turn into fields. The output of the above for your sample data is

| _time | AccessList | AccessMask | Attributes | DesiredAccess | HandleID | ObjectName | ObjectServer | ObjectType | SubjectDomainName | SubjectIP | SubjectUnix | SubjectUserIsLocal | SubjectUserName | SubjectUserSid |

| 2022-01-21 19:38:25 | %%4416 %%4423 | 81 | Open a directory | Read Data List Directory Read Attributes | 00000000000444 00 002a62a7 0d3d88a4 | (Shares) /LogTestActivity/dsmith/wordpress-shared/plugins-shared | Security | Directory | ACCOUNTS | <4>1.2.3.4 | <Uid:1234, Gid:1234, Local:false>() | false | davidsmith | S-1-5-21-3579272529-1234567890-2280984729-123456 |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I replaced the tokenizer for my desired fields with

TOKENIZER = (\s?(.*?);)

It's close-enough for my case. The tokenized events still have the semicolon in their name, but I can live with that for now. (I tried (\s?(.*?)); but then all the event names were empty strings.)