Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- can we use transaction command in summary indexing

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can we use transaction command in summary indexing

i have task to calculate every detail information from logs, So i have decided to build one table with all the information and later on i can build summary index by using the report. but the problem i have used transaction command in the report.

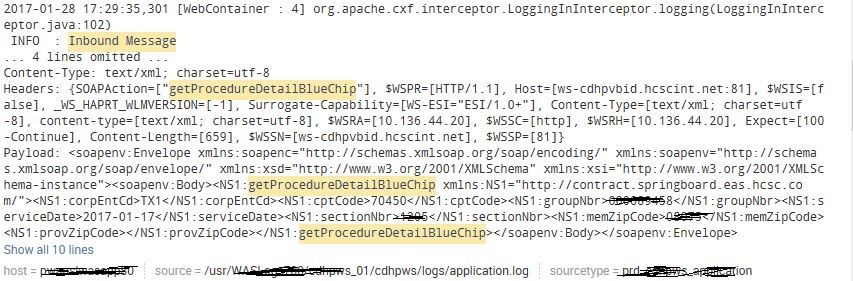

for each and every request i have 4 events ( in , account number, assignzip, out )

their have multi requests are processed at a same time ..

i don't have unique id for each every request,,,

but when i calculated through diff source am not getting some duplicate id no, for that

i have separated the transactions through web container no in which they have executed....

like this i used transaction id,source,no

could you please tell me is their any other way to calculate without transaction command...

I have attached 2 screen shots those 4 events will have for every request ....

and could you please tell me can we use transaction command in the summary indexing...if we want to how we can use ?

because i have used the same query in summary but i have missed some events..

Thanks in advance....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your search got munged so I cannot give you the complete answer but replace your transaction id,source,no with either this:

... | stats min(_time) AS _time values(*) AS * BY id source no

Or this

... | stats min(_time) AS _time list(_raw) AS raw BY id source no

Maybe switch min for max.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For us to help, we need detailed information about how the data is structured. For instance, if you posted a set of dummy data for one transaction, then we could help you work out how to extract your information in the most useful way.

Also, post the code that you used to extract the information.

The transaction command is useful, in certain circumstances, for clearing up your data. However, it is not the only way to handle information that is spread across multiple events which are bound by a transaction code... even if that transaction code might be reused over time.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is my query...

index=ccsp_prd_was source="/usr/WASLogs700/cdhpws_*/cdhpws/logs/application.log" "getProcedureDetailBlueChip" OR "getProcedureDetailBlueChipResponse" AND "Inbound Message" OR "Outbound Message" OR "getProcedureDetailBlueChip response time returning procedure details" OR "memZipCode assigned to zipCode" OR "provZipCode assigned to zipCode" OR "bnftAgrmtNbr" | rex "WebContainer :(?..)" | rex "ID:(?.*)" | rex "(?Inbound|Outbound)" **| transaction id,source,no** | rex "(?m)\(?.*)" | rex "(?m)\(?.*)" | rex "(?m)\(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "(?.*)" | rex "provZipCode assigned to zipCode:(?.*)" | rex "memZipCode assigned to zipCode:(?.*)" | stats min(_time) as startTime,max(_time) as endTime,values(info) as Info,values(duration) as duration,values(StatusCode) as StatusCode,values(message) as StatusMessage,values(CorpEntCd) as corpEntCd,values(costlvlpctl) as Costlvlpctl,values(CptCode) as cptCode,values(GroupNbr) as GroupNbr,values(MemZipCode) as memZipCode,values(procdchrgamt) as ProcChrgamt,values(ProvZipCode) as ProvZipCode,values(SectionNbr) as SectionNbr,values(ServiceDate) as ServiceDate,values(tretcatcd) as TretCatCd,values(tretcatname) as TretCatName,values(bnftAgrmtNbr) as bnftAgrmtNbr,values(acctNbr) as acctNbr,values(provassignZip) as provassignZip,values(memzipassignzip) as memzipassignzip by id,source | eval endTime=startTime+duration | eval _time=startTime | eval StartTime=strftime(startTime,"%Y-%m-%d %H:%M:%S,%3N") | eval EndTime=strftime(endTime,"%Y-%m-%d %H:%M:%S,%3N") | sort -_time | table _time,id,Info,StartTime,EndTime,duration,StatusCode,StatusMessage,source,corpEntCd,Costlvlpctl,cptCode,GroupNbr,memZipCode,ProcChrgamt,ProvZipCode,SectionNbr,ServiceDate,TretCatCd,TretCatName,bnftAgrmtNbr,acctNbr,provassignZip,memzipassignzip