Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Splunk Extraction

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have Splunk events like below & would like to extract the reason for failure.

Event 1 :

FILE_READER[1]: TT19472 Fatal data error processing file '/default/folder/ingest/amr_ca_sf_items_658721_US.out'.

Field length overflow(s) in record 2355, field 17, 'COUNT_DESC'. Expected 300 bytes, field contained 307 bytes.

FILE_READER[1]: TT19015 TPT Exit code set to 12.

Event 2 :

$FILE_READER<1>: DataConnector Producer operator Instances: 1

$FILE_READER<1>: ECI operator ID: '$FILE_READER-18808'

$FILE_READER<1>: Operator instance 1 processing file '/default/folder/ingest/amr_ca_sf_items_658721_US.out'.

$FILE_READER<1>: TT19472 Fatal data error processing file '/default/folder/ingest/amr_ca_sf_items_658721_US.out'.

Field length overflow(s) in record 1, field 1, '"ORDER"'. Expected 20 bytes, field contained 841 bytes.

$FILE_READER<1>: TT19015 TPT Exit code set to 12.

Event 3 :

FILE_READER<1>: TT19434 pmAttach failed. General failure (34): '!ERROR! dlopen failed: /default/folder/installations/lib/axm.so: cannot open shared object file: No such file or directory'

FILE_READER<1>: TT19302 Fatal error loading access module.

FILE_READER<1>: TT19015 TPT Exit code set to 12.

Event 4 :

FILE_READER<1>: TT19134 !ERROR! Fatal data error processing file '/default/folder/ingest/rpv0410_12123_1.out.gz'. Delimited Data Parsing error: Too many columns in row 246.

FILE_READER<1>: TT19015 TPT Exit code set to 12.

Event 5 :

FILE_WRITER<1>: TT19434 pmWrite failed. General failure (34): 'pmunxWBuf: fwrite byte count error (No space left on device)'

FILE_WRITER<1>: TT19306 Fatal error writing data.

FILE_WRITER<1>: TT19015 TPT Exit code set to 12.

Reason for failure should look like below :

1: Field length overflow(s) in record 2355, field 17, 'COUNT_DESC'. Expected 300 bytes, field contained 307 bytes.

2 : Field length overflow(s) in record 1, field 1, '"ORDER"'. Expected 20 bytes, field contained 841 bytes.

3 : Fatal error loading access module or '!ERROR! dlopen failed: /default/folder/installations/lib/axm.so: cannot open shared object file: No such file or directory'

4 : Parsing error: Too many columns in row 246.

5 : Fatal error writing data or General failure (34): 'WBuf: fwrite byte count error (No space left on device)'

If someone can guide on a way to extract this , it will be very helpful .

Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

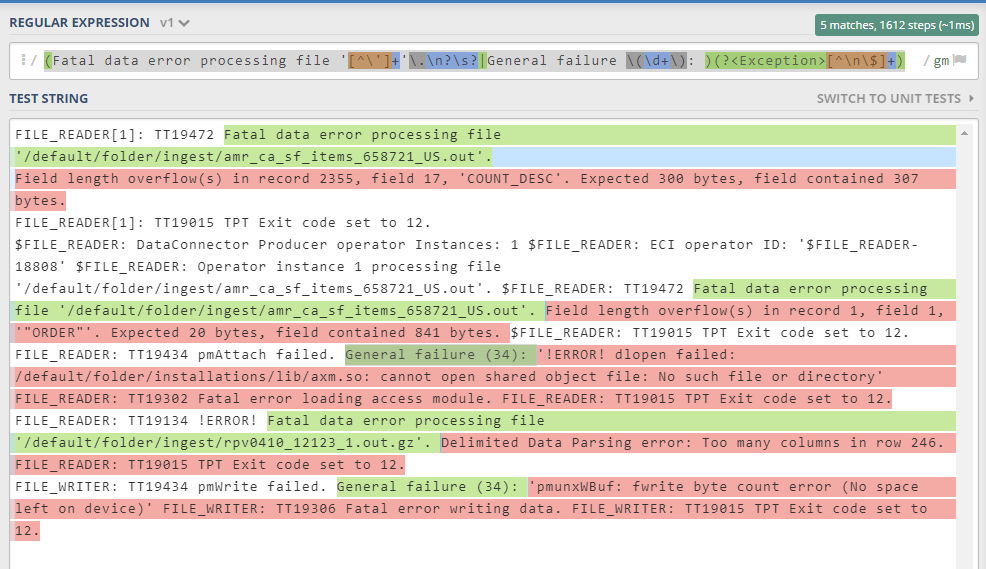

This regex should grab all of your use cases when I tested it in regex101:

| rex "(Fatal data error processing file '[^\']+'\.\n?\s?|General failure \(\d+\): )(?<Exception>[^\n\$]+)"

Here's the link to the regex101 test example: https://regex101.com/r/0V46z8/1

It captures a little more than you want on your last three examples which can be trimmed off by adding this after your extraction:

| rex mode=sed field=Exception "s/FILE_(READER|WRITER)[^\e]+//g"

There's probably a little better way to prevent that extra FILE_READER/WRITER data at the end. Let me know if this works for you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This regex should grab all of your use cases when I tested it in regex101:

| rex "(Fatal data error processing file '[^\']+'\.\n?\s?|General failure \(\d+\): )(?<Exception>[^\n\$]+)"

Here's the link to the regex101 test example: https://regex101.com/r/0V46z8/1

It captures a little more than you want on your last three examples which can be trimmed off by adding this after your extraction:

| rex mode=sed field=Exception "s/FILE_(READER|WRITER)[^\e]+//g"

There's probably a little better way to prevent that extra FILE_READER/WRITER data at the end. Let me know if this works for you.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @dmarling