Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Individual Records are merging in Single event...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

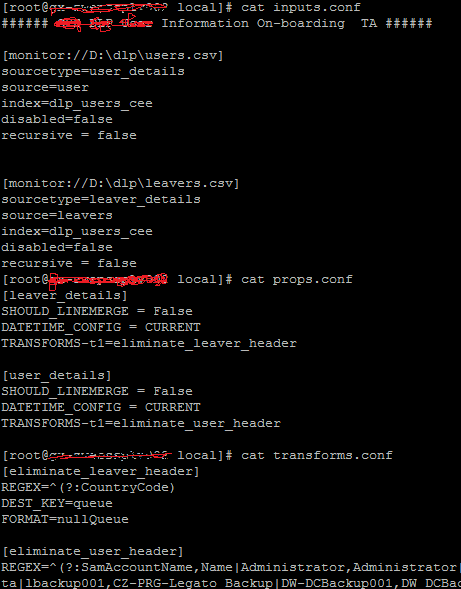

I am facing a bizarre problem in csv file monitoring. I am monitoring a csv file from a server path. The records are being indexed. But sometimes, some records of the csv file is being merged into a single event. Attached is the screenshot of the config files that I have used to onboard these data.

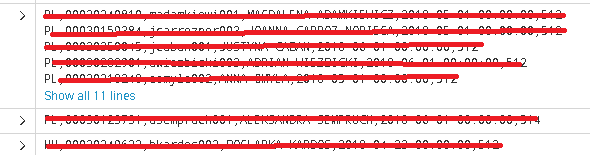

Below is the screenshot of the output that I'm getting. First event is the combination of multiple records, which is incorrect. The next two are fine.

Could anyone please help me to resolve this so that I can have single event for each record?

Thanks in advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you everybody for your comments. But I got the answer and it's working.

In my case, the monitored file is in a server where UF is installed. I am on-boarding the data using UF. So I had deployed all the configuration files in that server that is in UF. But the parsing doesn't happen at UF. It only happens at HF or Indexer. That's why in my case the props.conf and transforms.conf was not in any use.

What I needed to do is to create an app for HF with the props.conf and transforms.conf and deploy that app to HF, thru which the data are flowing to Indexer from UF server.

So I have changed my original TA and kept the inputs.conf file only in that TA and deployed that into UF server. Then I created another TA with the props.conf and transforms.conf file and deployed this TA to HF.

Now indexing happens properly.

So in short - UF TA ---> only inputs.conf

HF TA ----> props.conf and transforms.conf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you everybody for your comments. But I got the answer and it's working.

In my case, the monitored file is in a server where UF is installed. I am on-boarding the data using UF. So I had deployed all the configuration files in that server that is in UF. But the parsing doesn't happen at UF. It only happens at HF or Indexer. That's why in my case the props.conf and transforms.conf was not in any use.

What I needed to do is to create an app for HF with the props.conf and transforms.conf and deploy that app to HF, thru which the data are flowing to Indexer from UF server.

So I have changed my original TA and kept the inputs.conf file only in that TA and deployed that into UF server. Then I created another TA with the props.conf and transforms.conf file and deployed this TA to HF.

Now indexing happens properly.

So in short - UF TA ---> only inputs.conf

HF TA ----> props.conf and transforms.conf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the props.conf specified that

SHOULD_LINEMERGE = false

needed to specify LINE_BREAKER for breaking the events properly

OR specify

INDEXED_EXTRACTIONS = CSV

INDEXED_EXTRACTIONS = < CSV|W3C|TSV|PSV|JSON>

* Tells Splunk the type of file and the extraction and/or parsing method

Splunk should use on the file.

CSV - Comma separated value format

TSV - Tab-separated value format

PSV - pipe "|" separated value format

W3C - W3C Extended Extended Log File Format

JSON - JavaScript Object Notation format

* These settings default the values of the remaining settings to the

appropriate values for these known formats.

* Defaults to unset.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have used the SHOULD_LINEMERGE=False and LINE_BREAKER=([\r\n]+) attributes. But it's not working.