- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to use streamstats and identify consecutive ev...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have applications Availability data in splunk.

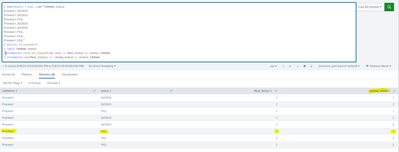

With below SPL, I got this data.

Base_SPL..| streamstats reset_on_change=true count as Real_Status by status,JonName

The challenge is to identify, if 2 or more successive failure have happened.

Only show ALL Fail events, if 2 or more successive failures.

In the below table, only the contents highlighted in RED needs to be shown. (Ignore Failure, highlighted in GREEN)

If i filter with below SPL, then i will miss the 1st FAIL event (Highlighted in RED)

where Real_Status > 1

So how do I solve this challenge?

|

JobName |

status |

Real_Status |

|

Process1 |

SUCCESS |

1 |

|

Process1 |

SUCCESS |

2 |

|

Process1 |

FAIL |

1 |

|

Process1 |

SUCCESS |

1 |

|

Process1 |

FAIL |

1 |

|

Process1 |

FAIL |

2 |

|

Process1 |

FAIL |

3 |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Building on solutions from both @richgalloway and @yeahnah , try something like this

| streamstats reset_on_change=true count as Real_Status by status,JobName

| reverse

| streamstats reset_on_change=true max(Real_Status) as conseq_status by status,JobName

| where status="FAIL" AND conseq_status>1- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Building on solutions from both @richgalloway and @yeahnah , try something like this

| streamstats reset_on_change=true count as Real_Status by status,JobName

| reverse

| streamstats reset_on_change=true max(Real_Status) as conseq_status by status,JobName

| where status="FAIL" AND conseq_status>1- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add another streamstats to find the maximum value of Real_Status and then filter out events with a max value < 2.

Base_SPL..

| streamstats reset_on_change=true count as Real_Status by status,JobName

| eventstats max(Real_Status) as conseq_status by status,JobName

| where conseq_status > 1

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are still missing the first event in the series of consecutive failures.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see what I did wrong. I've fixed my answer.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's only a consecutive failure once the second occurrence happens so the first occurrence needs to be captured expanded out as an result row again.

Extending on @richgalloway's better solution, something like this should work...

| streamstats reset_on_change=true count as Real_Status by status,JobName

| streamstats values(Real_Status) as status_values max(Real_Status) as conseq_status by status,JobName

| where status="FAIL" AND conseq_status>=2

| eval status_count=mvcount(status_values)

,status_values=if(status_count=2, status_values, null())

| mvexpand status_values

| eval Real_Status=if(status_count=2, status_values, Real_Status)

| table JobName status Real_Status

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gvk_us

This run anywhere example should meet your requirement...

| makeresults | eval _raw="JobName,status

Process1,SUCCESS

Process1,SUCCESS

Process1,FAIL

Process1,SUCCESS

Process1,SUCCESS

Process1,FAIL

Process1,FAIL

Process1,FAIL"

| multikv forceheader=1

| table JobName,status

| streamstats window=2 count as Real_Status BY status JobName

| where status="FAIL" AND Real_Status>=2Hope that helps

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

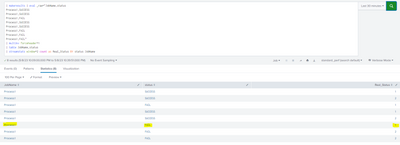

This solution is missing the 1st event of the consecutive events, as highlighted.