Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to group events based on certain value?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to group certain values within a certain time frame, lets say 10 minutes, the values are just fail or success,

the grouping of these events within the 10 min wasn't a problem, but it seems Splunk just puts all the values without time consideration together, so i cant see which value was the first or the last, for example: I first want to see the first 4 fail events and then create a trigger if the 5th value is success,

what command should i be using? I tried transaction with stats or cluster etc.

group the events within a time frame if they meet a certain criteria:

first 4 events are fail and 5th event is success

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Like this (if you have a problem with reset_after😞

index=* sourcetype=linux_secure tag=authentication action="failure" OR action="success"

| reverse

| streamstats count(eval(match(action,"success"))) AS sessionID BY user

| stats count AS action_count first(action) AS last_action range(_time) AS duration first(_raw) AS final_success last(_raw) AS first_failure BY user sessionID

| eval action_count = action_count - if((action="success"), 1, 0)

| search action_count>=4 AND last_action="success"

This way gives more context, too; if you get rid of the AND last_action="success" part you will see those that are still under attack without a successful login.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Like this (if you have a problem with reset_after😞

index=* sourcetype=linux_secure tag=authentication action="failure" OR action="success"

| reverse

| streamstats count(eval(match(action,"success"))) AS sessionID BY user

| stats count AS action_count first(action) AS last_action range(_time) AS duration first(_raw) AS final_success last(_raw) AS first_failure BY user sessionID

| eval action_count = action_count - if((action="success"), 1, 0)

| search action_count>=4 AND last_action="success"

This way gives more context, too; if you get rid of the AND last_action="success" part you will see those that are still under attack without a successful login.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

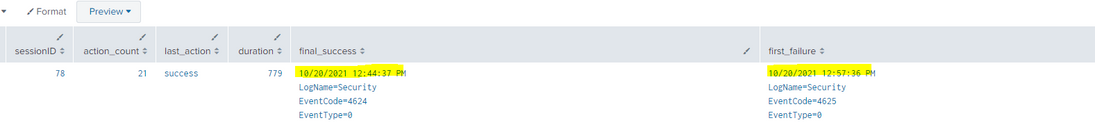

@woodcock I ran your query on my Splunk instance, and it shows "First Failure" time to be ahead of "Final Success" time. Screenshot below

IF the search is for Multiple Failed logins followed by a successful login how can a success event timestamp be before the Failure ? Hope you are getting me.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I played a lot yesterday with the last solution of yours and it seems I have version 6.3.3 , so probably the reset_after option isn't supported , will try this solution

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

index=* sourcetype=linux_secure tag=authentication action="failure" OR action="success" | bucket span=5m _time|

| streamstats count(eval(match(result_login,"success"))) AS sessionID BY user

| stats count AS action_count first(result_login) AS last_action range(_time) AS duration first(_raw) AS final_success last(_raw) AS first_failure BY user sessionID

| eval action_count = action_count - if((action="success"), 1, 0)

| search action_count>=4 AND last_action="success"AND sessionID=1

I have removed " reverse" , otherwise it looks at the events from latest date to earliest, I have added " bucket span=5m _time to look for events within 5 minutes span .

Also added sessionID=1 , otherwise the query counts after success event for another failure until it sees a second or even more successes ,

the queury looks very good, but still have to test it extensively , just wondering if the bucket span is fine,

but so far it looks good, thank you very much for your input, highly appreciated

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You mustn't remove the reverse; if you do then the success event will be associated with the incorrect group of failure events.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the problem with the reverse command is the _time is backwards, so the queury calculates backwards, for example: failed login at 13:10, second failed login at 13:09, third login is at 13:08 ,

when doing the test, i look at the raw events and compare them to the search results and it works very good, so i am surprised you say it wont work properly

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you pulling in the events using |inputcsv or |dbxquery or something like that because for "normal" searches Splunk ALWAYS returns the NEWEST event first (on top), and we need to walk backwards through them with the OLDEST event first (thus the need for reverse).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this solution doesn't work quite right, it does see the first (fail) and last message (success) , but the count is not correct, for example it counts 4 in total but I see within the total 2 success and 2 failures, it doenst see the sequence of first 4 failures, anyway

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are quite correct; it is counting the "success" event, too, I have corrected the answer to fix this off-by-one error.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it seems that the queury looks for failures and success in time range, but it calculates backwards, so for example: first event day 1 at 1500 hours, event day day 2 1400 hours etc..

it counts the time backwards

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what about time frame , lets sya you want to look within 10 min, should I add: | bucket span=10m _time | ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you saying that if the time between login attempts is longer than 10 minutes, it should be considered a new session/attempt-group?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Like this:

index=* sourcetype=linux_secure tag=authentication action="failure" OR action="success"

| reverse

| streamstats sum(eval(match(action,"failure"))) AS action_count reset_after="("match(action,\"success\")")" BY user

| where match(action,"success") AND action_count>=4

Make sure to keep the escape characters the way that they look for the double-quotes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

that queury looks good, but I am getting an error, the streamstats command looks good though:

index=*action=failure OR action=success

| streamstats sum(eval(match(action,"failure"))) AS action_count reset_after="("match(action,\"success\")")" BY user

| where match(action,"success") AND action_count>=4

error:

Error in 'streamstats' command: The argument 'reset_after=(match(action,"success"))' is invalid.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was just doing this on a demo system on v6.5.0 and it worked just fine like this:

index=* sourcetype=linux_secure

| reverse

| streamstats sum(eval(match(action,"failure"))) AS action_count reset_after="("match(action,\"success\")")" BY user

| where match(action,"success") AND action_count>=4

Do note that I forgot the very important reverse command (I will fix the original answer), so maybe reset_after is not in your version. I will post a different answer.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A shot in the dark..

your base search earliest=-10m@m latest=@m | stats list(status) as status

This will give list of status in the order they are seen in Splunk (reverse chronological). You can then check different elements using mvindex(status,N) function. Use N=-1 to see last, N=-2 to 2nd last,...N=1 for 2nd and N=0 for first element.