Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to extract fields from a CSV file that has...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi There!

I have an issue with a field extraction. I have a Windows CSV file, that has several fields that have commas in the fields itself, forcing it to break at the wrong position.

Is there any clever way I could fix this? Unfortunately I'm not in the position to edit the source.

Here's an example of the so-called-csv:

"ssrv0052,true,,,,,,,unix,,,""Sunday, February 7, 2016 8:05:15 PM CET"",Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,IPv4,,,,,,,,,,,,false,,,5de7125b74af5305076f03648a900aea,false,""Sunday, February 7, 2016 8:05:15 PM CET"",""Sunday, February 7, 2016 8:05:15 PM CET"",,ssrv0052,,,false,,,,,,,,,,,,[server],,,,,,,,,,,,,,,Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,"

Take a close look at the date field. It shows the issue I have.

Thank you in advance!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, if you can't change the source and get it cleaned up (and you should probably still try to do that), you'll have to do some cleanup using props/transforms. Try this:

props.conf

[dirtycsv]

SEDCMD-cleanupMess = s/^\"//g s/\"$//g s/\"\"/"/g

REPORT-cleanFields = cleancsv_fields

SHOULD_LINEMERGE=false

NO_BINARY_CHECK = true

transforms.conf

[cleancsv_fields]

DELIMS = ","

FIELDS = f01,f02,f03,f04,f05,f06,f07,f08,f09,f10,f11,f12,f13,f14,f15,f16,f17,f18,f19,f20,f21,f22,f23,f24,f25,f26,f27,f28,f29,f30,f31,f32,f33,f34,f35,f36,f37,f38,f39,f40

Change your FIELDS list to the field names you want to assign to each of the field names in the - now structurally cleaned up - csv file and you should be on a happier path.

The SEDCMD above contains a space-separated list of three SED replacements:

- Remove double quote at beginning of line

- Remove double quote at end of line

- Replace double-double quotes around timestamp fields with single double quote

There may be a more efficient way to do this with RegEx, but this should work. The most efficient way would be to clean up the source before it is indexed into Splunk.... 😉

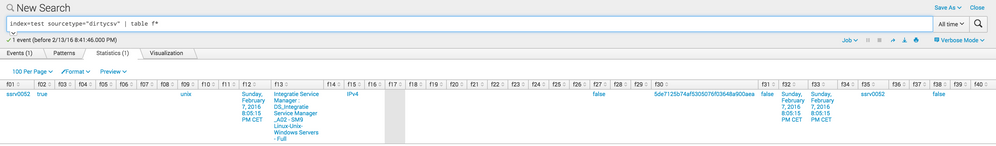

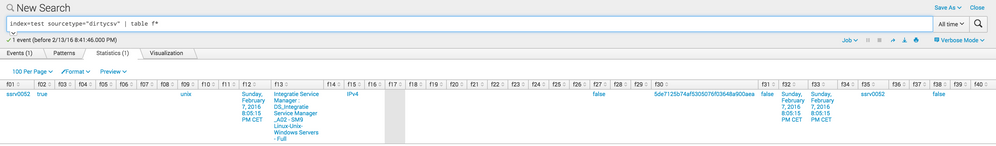

I tested this on my local 6.3.x install:

props/transforms need to be on your first parsing tier, either indexer or heavy forwarder, not on universal forwarder.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, if you can't change the source and get it cleaned up (and you should probably still try to do that), you'll have to do some cleanup using props/transforms. Try this:

props.conf

[dirtycsv]

SEDCMD-cleanupMess = s/^\"//g s/\"$//g s/\"\"/"/g

REPORT-cleanFields = cleancsv_fields

SHOULD_LINEMERGE=false

NO_BINARY_CHECK = true

transforms.conf

[cleancsv_fields]

DELIMS = ","

FIELDS = f01,f02,f03,f04,f05,f06,f07,f08,f09,f10,f11,f12,f13,f14,f15,f16,f17,f18,f19,f20,f21,f22,f23,f24,f25,f26,f27,f28,f29,f30,f31,f32,f33,f34,f35,f36,f37,f38,f39,f40

Change your FIELDS list to the field names you want to assign to each of the field names in the - now structurally cleaned up - csv file and you should be on a happier path.

The SEDCMD above contains a space-separated list of three SED replacements:

- Remove double quote at beginning of line

- Remove double quote at end of line

- Replace double-double quotes around timestamp fields with single double quote

There may be a more efficient way to do this with RegEx, but this should work. The most efficient way would be to clean up the source before it is indexed into Splunk.... 😉

I tested this on my local 6.3.x install:

props/transforms need to be on your first parsing tier, either indexer or heavy forwarder, not on universal forwarder.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow ssievert,

thanx for the effort you put in! I'm gonna try this as soon as possible, and report back!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thx ssievert, you helped me out here!

Works like a charm.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all, let me rephrase my question:

I have a data input I cannot modify by the source. It was supposed to be a csv, but doesn't really comply.

This is an example line/event from the input:

"ssrv0052,true,,,,,,,unix,,,""Sunday, February 7, 2016 8:05:15 PM CET"",Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,IPv4,,,,,,,,,,,,false,,,5de7125b74af5305076f03648a900aea,false,""Sunday, February 7, 2016 8:05:15 PM CET"",""Sunday, February 7, 2016 8:05:15 PM CET"",,ssrv0052,,,false,,,,,,,,,,,,[server],,,,,,,,,,,,,,,Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,"

I'm affraid my only resort for extracting the fields is to use a regex, because the input is devided in 9 blocks within quotes. However, the data contains 40+ fields. These are the blocks I can distinguish in the data:

"ssrv0052,true,,,,,,,unix,,,"

"Sunday, February 7, 2016 8:05:15 PM CET"

",Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,IPv4,,,,,,,,,,,,false,,,5de7125b74af5305076f03648a900aea,false,"

"Sunday, February 7, 2016 8:05:15 PM CET"

","

"Sunday, February 7, 2016 8:05:15 PM CET"

",,ssrv0052,,,false,,,,,,,,,,,,[server],,,,,,,,,,,,,,,Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,"

Who can help me out?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like the values that have commas in it are quoted, so you shouldn't have that issue. Specifying the list of fields as they occur in the file configured with FIELDS= in props.conf or a header row in the file itself and CHECK_FOR_HEADER=true should work without issues.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the date field you are right, but looking at the original data, I can distinguish 9 "blocks" that are set within quotes:

"ssrv0052,true,,,,,,,unix,,,"

"Sunday, February 7, 2016 8:05:15 PM CET"

",Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,IPv4,,,,,,,,,,,,false,,,5de7125b74af5305076f03648a900aea,false,"

"Sunday, February 7, 2016 8:05:15 PM CET"

","

"Sunday, February 7, 2016 8:05:15 PM CET"

",,ssrv0052,,,false,,,,,,,,,,,,[server],,,,,,,,,,,,,,,Integratie Service Manager : DS_Integratie Service Manager _A02 - SM9 Linux-Unix-Windows Servers - Full,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,"

If I understand you correct, that would mean that I create 9 pretty useless fields, right?

What I really would like is a way to distinguish all original fields, any thoughts on that?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you provide us with your regular expression that is breaking? Are you trying to create a field for each piece of text between the comas?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm sorry, I misread your question. I haven't created a regex yet, not the first thing I should put up on my resume 🙂

I tried extracting by delimiter in the gui until now. Also see my reply to ssievert that naming the fields in props.conf doesn't lead to the desired fields. Any help appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Most certainly, brace yourself:

[Computer]:Display Label,[Computer]:Allow CI Update,[Computer]:BiosAssetTag,[Computer]:BiosDate,[Computer]:BiosSerialNumber,[Computer]:BiosSource,[Computer]:BiosUuid,[Computer]:BiosVersion,[Computer]:CI Type,[Computer]:CalculatedLocation,[Computer]:ChassisType,[Computer]:Create Time,[Computer]:Created By,[Computer]:DefaultGatewayIpAddress,[Computer]:DefaultGatewayIpAddressType,[Computer]:Description,[Computer]:DiscoveredContact,[Computer]:DiscoveredDescription,[Computer]:DiscoveredLocation,[Computer]:DiscoveredModel,[Computer]:DiscoveredOsName,[Computer]:DiscoveredOsVendor,[Computer]:DiscoveredOsVersion,[Computer]:DiscoveredVendor,[Computer]:DnsServers,[Computer]:DomainName,[Computer]:Enable Aging,[Computer]:ExtendedNodeFamily,[Computer]:ExtendedOsFamily,[Computer]:Global Id,[Computer]:Is Candidate For Deletion,[Computer]:Last Access Time,[Computer]:LastModifiedTime,[Computer]:MemorySize,[Computer]:Name,[Computer]:NetBiosName,[Computer]:Node Boot Time,[Computer]:Node Is Complete,[Computer]:Node Is Route,[Computer]:Node Is Virtual,[Computer]:Node Key,[Computer]:Node NNM UID,[Computer]:Node Operating System Installation type,[Computer]:Node Operating System Release,[Computer]:Node Operating System accuracy,[Computer]:Node Server Type,[Computer]:Node is Desktop,[Computer]:NodeFamily,[Computer]:NodeModel,[Computer]:NodeRole,[Computer]:Note,[Computer]:OS Architecture,[Computer]:Origin,[Computer]:OsDescription,[Computer]:OsFamily,[Computer]:OsVendor,[Computer]:PAE Enabled,[Computer]:PrimaryDnsName,[Computer]:ProcessorFamily,[Computer]:SerialNumber,[Computer]:SnmpSysName,[Computer]:SwapMemorySize,[Computer]:SysObjecttId,[Computer]:UdUniqueId,[Computer]:Updated By,[Computer]:User Label,[Computer]:Vendor,[RunningSoftware]:Display Label,[RunningSoftware]:Allow CI Update,[RunningSoftware]:CI Type,[RunningSoftware]:Create Time,[RunningSoftware]:Created By,[RunningSoftware]:Description,[RunningSoftware]:Enable Aging,[RunningSoftware]:Global Id,[RunningSoftware]:Is Candidate For Deletion,[RunningSoftware]:Last Access Time,[RunningSoftware]:LastModifiedTime,[RunningSoftware]:Name,[RunningSoftware]:Note,[RunningSoftware]:Origin,[RunningSoftware]:Updated By,[RunningSoftware]:User Label,[RunningSoftware]:Vendor,[RunningSoftware]:Application Category,[RunningSoftware]:Application IP,[RunningSoftware]:Application IP Routing Domain,[RunningSoftware]:Application IP Type,[RunningSoftware]:Application Installed Path,[RunningSoftware]:Application Listening Port Number,[RunningSoftware]:Application Timeout,[RunningSoftware]:Application Username,[RunningSoftware]:Application Version Description,[RunningSoftware]:Container name,[RunningSoftware]:DiscoveredProductName,[RunningSoftware]:ProductName,[RunningSoftware]:StartupTime,[RunningSoftware]:Version

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This looks like the CSV file, can you provide us with the regular expression that is parsing out the fields?