Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How to edit my search to display my results in a t...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to edit my search to display my results in a trendline?

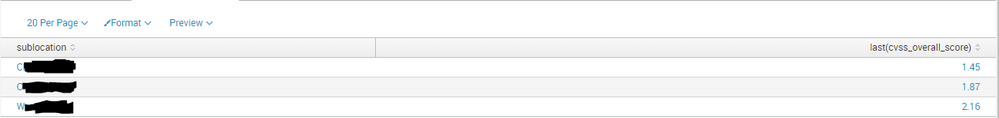

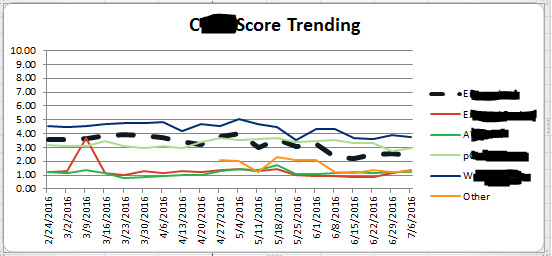

I'm trying to graph this same type of trendline (2nd Screenshot) in Splunk with daily results from 12pm-12pm. I'm using the following search which gives me my results per 24 hours (1st screenshot) but I'm unable to find the trendline command to give me results like in the 2nd screenshot:

index=#### sourcetype=#####

| lookup csirt_asset_list ip OUTPUT sublocation

| search hasBeenMitigated=0 sublocation=*

| stats dc(ip) as Total1 by sublocation

| join [ search index=#### sourcetype=##### pluginID<1000000 baseScore>0

| lookup csirt_asset_list ip OUTPUT sublocation

| search hasBeenMitigated=0 sublocation=*

| stats dc(ip) as Total2 by sublocation]

| join [search index=#### sourcetype=##### pluginID<1000000 baseScore>0

| lookup csirt_asset_list ip OUTPUT sublocation

| search hasBeenMitigated=0 sublocation=*

| stats count as counted by baseScore, sublocation

| fields + sublocation, baseScore, counted

| sort-baseScore

| lookup weight_lookup baseScore OUTPUT wmultiplier

| eval aaa=(counted * wmultiplier)

| eventstats sum(aaa) as test1, sum(counted) as test2

| eval bbb=(test1 / test2)

| eval bbb=round(bbb,2)]

| eval cvss_overall_score=bbb*(Total2/Total1)

| stats last(cvss_overall_score) by sublocation

Thanks for your help.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jhampton3rd - Were you able to test out DalJeanis' solution? Did it work? If yes, please don't forget to resolve this post by clicking on "Accept". If you still need more help, please provide a comment with some feedback. Thanks!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll be trying these suggestions tomorrow when I get back to the office. Thanks for everyone's input. Was away for a couple of weeks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spent a long time analyzing your query. Good thing that the issue was not a rattlesnake, because I would probably be dead by now. 😉

Basically, the issue is that you don't have any _time associated with your summary results, so there's nothing there to timechart. If you want the results for past days, you're going to have to work a time factor into your query that extracts the data. You've probably had it windowed to a single day's data when you run it.

Here's a first crack at that. Notice that I've taken each subquery and been extremely explicit at what it sends back to match to the original records.

index=#### sourcetype=##### hasBeenMitigated=0

| lookup csirt_asset_list ip OUTPUT sublocation

| search sublocation=

| eval mydate=relative_date(_time,"-0d@d")

| stats dc(ip) as Total1 by sublocation mydate

| join

[ search index=#### sourcetype=##### hasBeenMitigated=0 pluginID0

| eval mydate=relative_date(_time,"-0d@d")

| fields + ip mydate

| lookup csirt_asset_list ip OUTPUT sublocation

| search sublocation=

| stats dc(ip) as Total2 by sublocation mydate

| fields + sublocation mydate Total2

]

| join

[search index=#### sourcetype=##### hasBeenMitigated=0 pluginID0

| eval mydate=relative_date(_time,"-0d@d")

| fields + ip baseScore mydate

| lookup csirt_asset_list ip OUTPUT sublocation

| search sublocation=

| stats count as counted by baseScore, sublocation, mydate

| fields + sublocation, baseScore, counted, mydate

| sort 0 -baseScore

| lookup weight_lookup baseScore OUTPUT wmultiplier

| eval aaa=(counted wmultiplier)

| eventstats sum(aaa) as test1, sum(counted) as test2 by mydate

| eval bbb=(test1 / test2)

| eval bbb=round(bbb,2)

| fields + sublocation mydate bbb

]

| eval cvss_overall_score=bbb*(Total2/Total1)

| stats last(cvss_overall_score) as cvss_overall_score by sublocation mydate

| eval _time = mydate

If I understood the original query correctly, the last() was always operating against a single summary value, so first() or avg() would have gotten the same results. if so, then that is still the case here.

edited to use sort 0 rather than sort, in case the subsearch returns more than 100 records

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On an ongoing basis, it might be better to take those subqueries and send their daily results out to a csv file, reading the file in every day and appending just one-day's new calculations.

Honestly, if (as I suspect) bbb is a single value for each day, then at the end of the second subquery you should replace everything from | eventstats on with

| stats sum(aaa) as test1, sum(counted) as test2 by mydate

| eval bbb=round((test1/test2),2)

| fields + mydate, bbb

]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks DalJeanis,

I think I'm going to try sending the results to a .csv file. I believe this will be the easiest approach. Just trying to get that to work since this will be the first time I've done this. Thanks for the suggestions though. Still wasn't able to get the query to produce a trendline.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, if I understand your code, hasBeenMitigated is a field on the events, not the lookup table. IF so, I'd move that specification up to each initial search rather than running lookups on records in the search and subsearches that you don't care about and that you are going to discard.

It also seems to me that the second join is going to end up with a single value for bbb which will be applied to every single record by the subsequent eval statement. have I interpreted that correctly?