Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Generating _events_ in search

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello there.

I was wondering... is there any way to generate _events_ in search?

I mean, I know of the makeresults command of course but it generates stats results, not events per se.

Is there any way to generate events search-time, to - for example - test parsing rules?

Something like (pseudocode):

| <generate_my_events> | eval _raw="blah blah" | eval source="syslog" | eval sourcetype="whatever:syslog"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tried that 🙂 That was my first obvious choice.

It generates the results as stats, not as events.

As I said, the point is mostly to check parsing without the need of external event source.

But I just thought of something else 🙂

I still can't generate the event "on the fly" but I can generate it with makeresults and then write it to a test index with collect. It doesn't give me much flexibility in the host/time/source area but those are indexed fields anyway. But sourcetype can be specified with collect so I think I'm good.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's possible to append makeresults to an events search so to generate events instead of a stats table, with that syntax :

index=dummy earliest=-1s

| append [| makeresults count=8935 | eval _time=('_time' - (random() % 86400))]

After that you can play with the number of events and the timrange (here with a backfill of 24h).

You can improve the events content with some random data before closing the subsearch:

| eval ip=((((("10." . (((random() % 4) * 10) + 10)) . ".") . ((random() % 16) + 17)) . ".") . ((random() % 16) + 33))

| eval _spacelist="Lungdanum Londres Londra Londar Landan Londin Lonn London Paris Parigi Paras Lutetia Parais Paras Pari Paries Pariis Pariisi Parijs Ba-le Parisium New-York Berlin Washington Kathmandu"

| makemv delim=" " _spacelist

| eval city=mvindex(_spacelist,((random() % mvcount(_spacelist)) - 1))

| eval _dashlist=" problem- issue- situation- operation- intervention-"

| makemv delim="-" _dashlist

| eval operation=mvindex(_dashlist,((random() % mvcount(_dashlist)) - 1))

And end up with:

| eval _raw=_time.operation." in ".city." from ".ip

- There nothing indexed.

- Events are generated on the fly.

- You can create your own lists with or without a lookup.

- You can add that to a dashboard to make it more interactive.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that's a neat trick!

It seems that it indeed does generate events instead of stats table.

Unfortunately, those events are not parsed.

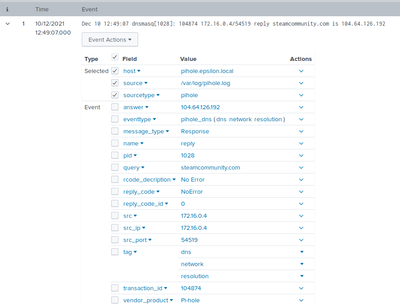

For example - the same event as a result from the normal search:

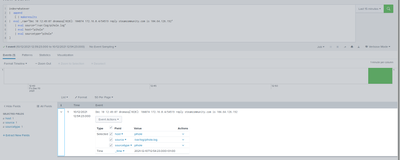

And the same event re-created with makeresults:

Of course I wouldn't expect index-time extractions to work but apparently search-time transforms are not applied either.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want it indexed and parsed, you can make it a scheduled saved search to run every 5' with an extra

| outputcsv append=true tobeindexed.csv

Then ensure you monitor that file and you can define whatever parsing settings you want.

Personally, I use a dashboard to generate some events with either a log format or a JSON format, and I add a filter to reduce activity by a random of 50% for night hours and 20% for weekends.

I can generate thousands of events in a blip, export them as a file, and test my parsing settings easily.

For more advanced types and inputs, I use the SA_eventgen.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gentimes is another event generating command particularly if you want time-based dummy data

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use makeresults to generate events then use eval commands to add the desired fields to those events.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tried that 🙂 That was my first obvious choice.

It generates the results as stats, not as events.

As I said, the point is mostly to check parsing without the need of external event source.

But I just thought of something else 🙂

I still can't generate the event "on the fly" but I can generate it with makeresults and then write it to a test index with collect. It doesn't give me much flexibility in the host/time/source area but those are indexed fields anyway. But sourcetype can be specified with collect so I think I'm good.