Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Duration of all events without time overlap in...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone, beginning on Splunk and asking for your help

I've got something like this in my transaction :

Event 1 :

9:00:00 Start and 11:00:00 Stop

Event 2 :

10:00:00 Start and 11:30:00 Stop

Event 3 :

13:00:00 Start and 14:00:00 Stop

Event 4 :

13:20:00 Start and 13:40:00 Stop

I want to determine the duration of how long were my events combined ON START during the day.

This means we need AT LEAST ONE transaction on START for the duration to grow.

In our case :

9:00 until 11:30 and 13:00 until 14:00 = 3 hours and 30 minutes in total.

So I would like to get 3.5 hours as a result when I have something like what I just showed.

I hope this is not too confusing

Looking forward to your answers

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

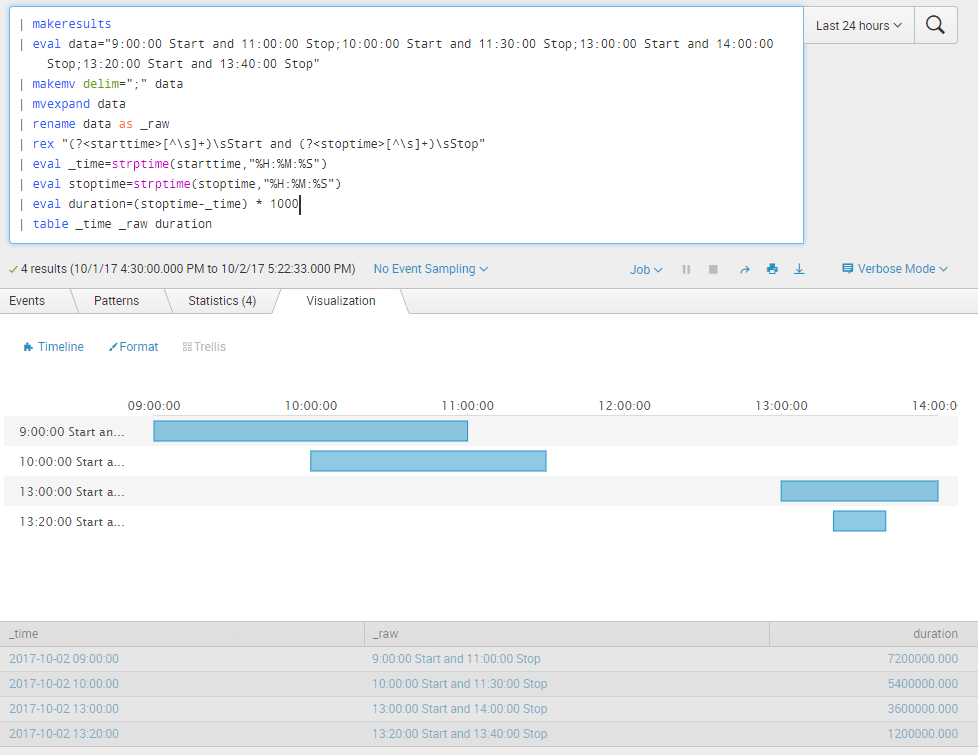

Based on the sample data you can try out the following run anywhere search. Commands till | rename data as _raw created dummy data as per the question. Splunk rex command extracts the starttime and endtime. If there is something in the data to uniquely identify event as well, then the same should be used. I have used _raw data itself as event since the information was not present.

Use strptime()function to convert HH:MM:SS to epoch time. Calculate duration as the difference between stoptime and starttime to get duration as seconds and then multiple by 1000 before feeding to Timeline custom visualization

| makeresults

| eval data="9:00:00 Start and 11:00:00 Stop;10:00:00 Start and 11:30:00 Stop;13:00:00 Start and 14:00:00 Stop;13:20:00 Start and 13:40:00 Stop"

| makemv delim=";" data

| mvexpand data

| rename data as _raw

| rex "(?<starttime>[^\s]+)\sStart and (?<stoptime>[^\s]+)\sStop"

| eval starttime=strptime(starttime,"%H:%M:%S")

| eval stoptime=strptime(stoptime,"%H:%M:%S")

| eval duration=(stoptime-starttime) * 1000

| table starttime _raw duration

PS: While the query works for start and stop time within a single day, it would not work it stop time spanned to the next day or the day after. It is better to log date-time to handle such scenarios rather than handling in the query.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on the sample data you can try out the following run anywhere search. Commands till | rename data as _raw created dummy data as per the question. Splunk rex command extracts the starttime and endtime. If there is something in the data to uniquely identify event as well, then the same should be used. I have used _raw data itself as event since the information was not present.

Use strptime()function to convert HH:MM:SS to epoch time. Calculate duration as the difference between stoptime and starttime to get duration as seconds and then multiple by 1000 before feeding to Timeline custom visualization

| makeresults

| eval data="9:00:00 Start and 11:00:00 Stop;10:00:00 Start and 11:30:00 Stop;13:00:00 Start and 14:00:00 Stop;13:20:00 Start and 13:40:00 Stop"

| makemv delim=";" data

| mvexpand data

| rename data as _raw

| rex "(?<starttime>[^\s]+)\sStart and (?<stoptime>[^\s]+)\sStop"

| eval starttime=strptime(starttime,"%H:%M:%S")

| eval stoptime=strptime(stoptime,"%H:%M:%S")

| eval duration=(stoptime-starttime) * 1000

| table starttime _raw duration

PS: While the query works for start and stop time within a single day, it would not work it stop time spanned to the next day or the day after. It is better to log date-time to handle such scenarios rather than handling in the query.

| makeresults | eval message= "Happy Splunking!!!"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Nilay,

I do have a same requirement of calculating downtime duration withing overlaping events. As per below data, downtime start at ( 09:05:41.031) and ends at (09:14:16.802).

Basically I want to skip those UP events if any other Service goes down before it.

Could you please help to build the query?

2019-03-27 09:05:41.031 Service_1 DOWN

2019-03-27 09:05:43.783 Service_2 DOWN

2019-03-27 09:06:13.332 Service_3 DOWN

2019-03-27 09:07:32.118 Service_1 UP

2019-03-27 09:07:34.742 Service_1 DOWN

2019-03-27 09:07:40.743 Service_2 UP

2019-03-27 09:07:41.594 Service_1 UP

2019-03-27 09:07:45.288 Service_1 DOWN

2019-03-27 09:07:51.441 Service_1 UP

2019-03-27 09:08:33.786 Service_1 DOWN

2019-03-27 09:09:22.265 Service_1 UP

2019-03-27 09:14:10.797 Service_4 DOWN

2019-03-27 09:15:39.382 Service_3 UP

2019-03-27 09:14:16.802 Service_4 UP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello friends,

Sorry for the delay, I was absent from work for 3 weeks but I'm back at it now!

I'm really thankful for your answers, and testing it atm!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, I strongly suspect that whatever black magic that this app uses could be hijacked for this purpose:

https://splunkbase.splunk.com/app/3120/

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use the concurrency command to do this. Let's assume that _time is set from Stop (this is the best thing to do; fix it if you used Start) and that you are OK with 15-minute granularity. Run this search for any even multiple of 15-minutes:

| makeresults

| addinfo

| eval info_min_time=strftime(info_min_time, "%m/%d/%y:%H:%M:%S")

| eval info_max_time=strftime(info_max_time, "%m/%d/%y:%H:%M:%S")

| eval span="15m"

| eval duration=15*60

| map [search Your Base Search Here

| eval Start=strptime(Start, "%H:%M:%S")

| eval Stop=strptime(Stop, "%H:%M:%S")

| eval duration = Stop - Start

| appendpipe [|gentimes start=$info_min_time$ end=$info_max_time$ increment=$span$ | table starttime | rename starttime AS _time | eval duration=$duration$ | eval KeepMe="YES"]

| concurrency duration=duration start=Start

| search KeepMe="YES"

| stats count(eval(concurrency>1)) AS concurrency

| eval onSeconds = $duration$ * concurrency]

I wrote this without testing so there may be a bug or 2 there but the concept should work.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am still unable to get this to work. It never identifies any concurrent durations.

anything you can see wrong here? These would be events ranging between 15 minutes and possibly many hours.

index=pd "log_entries{}.incident.status"=resolved "log_entries{}.incident.priority.name"=P1

| rename log_entries{}.* as *

| dedup incident.incident_number

| eval Start=strptime('incident.created_at',"%Y-%m-%dT%H:%M:%SZ")

| eval Stop=strptime('incident.last_status_change_at', "%Y-%m-%dT%H:%M:%SZ")

| eval duration = Stop - Start

| eval _time=Stop

| appendpipe

[| stats min(_time) as mintime max(_time) as maxtime

| rename COMMENT as "set your duration here"

| eval duration=900

| eval mintime= duration*floor(mintime/duration)

| eval Start=mvrange(mintime,maxtime+duration,duration)

| table Start duration

| mvexpand Start

| eval _time=Start

| eval KeepMe=duration

]

| concurrency duration=duration start=Start

| search isnotnull(KeepMe)

| stats count(eval(concurrency>1)) AS concurrency max(KeepMe) as duration

| eval onSeconds = duration * concurrency

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If it is broken, don't worry; this is so crazy that @DalJeanis will not be able to resist testing it. He will let us know if it works or not. He grades all of my homework.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@woodcock - Looking at a partial credit grade here. Add this into the gentimes subsubsearch

| eval Start=_time

And then it works as advertised.

lines 8-10 I'd probably just pseudocode, since they are so heavily dependent on the results of the underlying search.

[search Your Base Search Here giving _time Stop Start duration

where Start and Stop are in epoch time and duration is in seconds, and Stop = _time

... and, once I got finish whittling, by changing the gentimes to mvrange based on the min and max values for _time, then addinfo from the outer query became unnecessary so the map could be dropped, leaving this...

search Your Base Search Here giving _time Stop Start duration

where Start and Stop are in epoch time and duration is in seconds, and Stop = _time

| appendpipe

[| stats min(_time) as mintime max(_time) as maxtime

| rename COMMENT as "set your duration here"

| eval duration=900

| eval mintime= duration*floor(mintime/duration)

| eval Start=mvrange(mintime,maxtime+duration,duration)

| table Start duration

| mvexpand Start

| eval _time=Start

| eval KeepMe=duration

]

| concurrency duration=duration start=Start

| search isnotnull(KeepMe)

| stats count(eval(concurrency>1)) AS concurrency max(KeepMe) as duration

| eval onSeconds = duration * concurrency

...which, after retaining the duration figure through Keepme, beats my prior version for elegance.

Therefore, this one gets a team grade of B+.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's one way.

Let's suppose you want to measure time in 5 minute increments. (300 seconds)

Your query that gets start time and stop time (both in epoch) for each event

| table starttime stoptime

| rename COMMENT as "Set your span increment here"

| eval myspan=300

| rename COMMENT as "this creates a separate record for each time span that an event is running"

| eval mytimes=mvrange(myspan*floor(starttime/myspan), myspan*floor(endtime/myspan)+1, myspan)

| mvexpand mytimes

| eval _time = mytimes

| rename COMMENT as "the first stats sums up the number of running events by timespan"

| stats count as running max(myspan) as myspan by _time

| rename COMMENT as "the second stats counts how many there were for each day"

| bin _time span=1d

| stats count as numspans max(myspan) as myspan by _time

| eval duration=numspans*myspan

| table _time duration

This creates some test data for you.

| makeresults

| eval thetimes="1504753200,1504757703 1504756820,1504757100 1504753800,1504756400 1504758200,1504758703 1504787400,1504792620 1504785600,1504790020"

| makemv thetimes

| mvexpand thetimes

| makemv delim="," thetimes

| eval starttime=mvindex(thetimes,0), endtime=mvindex(thetimes,1)

| table starttime endtime