- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Multiple stats file from summary index

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Splunkers!!

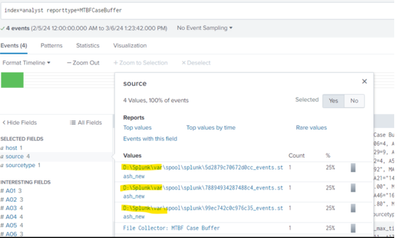

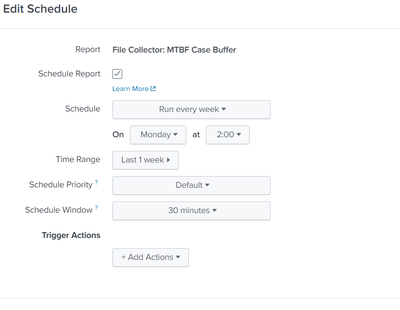

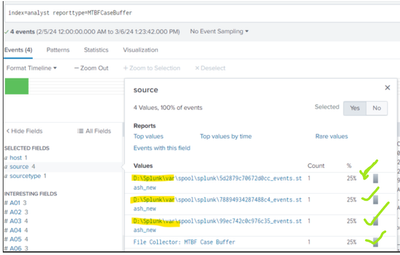

Every week, my report runs and gathers the results under the summary index=analyst. You can see that several stash files are being created for the specific report in the screenshot below. Conversely, multiple stash files won't be created for other reports.

Report with multiple stash files.

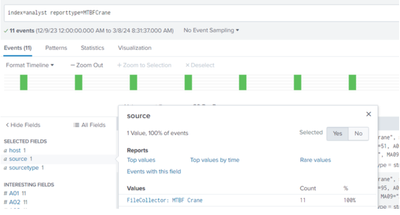

Report with no duplicate no stash files.

Please provide me an assistance on this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

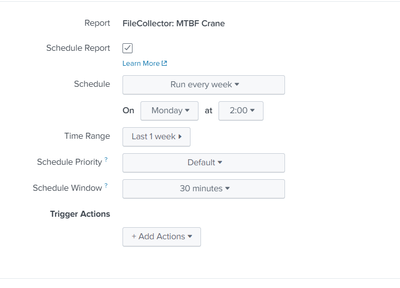

It is not clear whether there is an issue - to me it looks like the reports that were run on Feb 29th were done manually / ad hoc to back-fill the summary index for the earlier weeks before the schedule was set up and running correctly.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

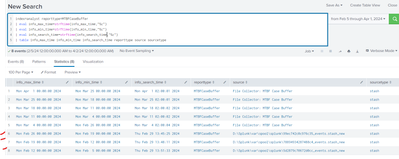

I have further investigated and seen that the Info_search_time for all the stash file is same. Please suggest any significance behind it ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the issue?

Stash files are used by Splunk to serialise the events so that they can be indexed.

The source can be overridden in the collect command.

These are from two different reports - if you are interested, you should look at the settings for those reports to see the differences in how they are sent to the summary index.

As for the times, where the times are almost identical, this is likely to be due to a cron job, which potentially is from a unix-based system, whereas the sources with more different times look like they are from a Windows-based system, which doesn't usually have cron.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Both the Saved searches are running at the same time. In your view, Is this causing the issue ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is not clear whether there is an issue - to me it looks like the reports that were run on Feb 29th were done manually / ad hoc to back-fill the summary index for the earlier weeks before the schedule was set up and running correctly.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ITWhisperer Is that possible to check who run the adhoc search of backfill of summary index from the _audit index ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ITWhisperer

What caused the creation of these "D:\Splunk\var\spool\splunk\99ec742c0c976c35_events.stash_new" files? Instead of spool files, that should be the name of the report.

Do stash-spool files get created when a saved search is used ad hoc or backfill? When there are no spool files being created by scheduled?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The stash files are usually created by the collect command.

Depending on your retention settings, you may be able to find out who ran the report from your _audit index.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Manual runs of the search and to collect into summary index create those stash files. It is unrelated to the occurrence of duplicate events. The allocation of all sources is equal (25%) , as you can see below. Is that correct ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These are consistent with the info_search_time graphic you shared earlier - is that what you are asking?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ITWhisperer I think this is the best suitable answer for my question as you posted earlier.

"it looks like the reports that were run on Feb 29th were done manually / ad hoc to back-fill the summary index for the earlier weeks before the schedule was set up and running correctly."