Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- no line breaks in CSV log file

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no line breaks in CSV log file

using splunk 7.2.1

hello, Im ingesting an iotop I/O log thats in a csv format (using forwarder to send log to indexer)

heres what the log looks like,

09:24:53, 1709, root, 59.43 K/s, 0.00 B/s, 0.06, 0.00, sshd:

09:24:54, 20152, root, 0.00 B/s, 11.88 K/s, 0.00, 0.00, splunkd-p8089

09:24:57, 168, root, 0.00 B/s, 27.75 K/s, 0.00, 0.08,

09:25:01, 223, root, 932.67 K/s, 0.00 B/s, 0.16, 1.12,

09:25:01, 389, root, 1095.39 K/s, 0.00 B/s, 0.27, 0.78, NetworkManager

09:25:01, 388, polkitd, 1543.86 K/s, 0.00 B/s, 0.11, 0.35, polkitd--no-debug

09:25:01, 1, root, 1928.83 K/s, 0.00 B/s, 0.24, 0.27, systemd--switched-root--system--deserialize

09:25:01, 365, root, 694.54 K/s, 0.00 B/s, 0.17, 0.21,

09:25:01, 730, root, 388.94 K/s, 0.00 B/s, 0.04, 0.15, rsyslogd-n

09:25:01, 305, root, 130.97 K/s, 3.97 K/s, 0.00, 0.07,

09:25:01, 366, dbus, 746.13 K/s, 0.00 B/s, 0.12, 0.06, dbus-daemon--system--address=systemd:--nofork--nopidfile

09:25:01, 731, root, 174.63 K/s, 7.94 K/s, 0.02, 0.06, rsyslogd-n[rs:main

09:25:01, 402, root, 464.35 K/s, 0.00 B/s, 0.64, 0.02, NetworkManager--no-daemon

09:25:01, 361, polkitd, 202.41 K/s, 0.00 B/s, 0.16, 0.01, polkitd

09:25:01, 396, root, 182.56 K/s, 0.00 B/s, 0.03, 0.01, crond

09:25:02, 1, root, 39.67 K/s, 0.00 B/s, 0.06, 0.00, systemd--switched-root--system--deserialize

09:25:02, 31444, root, 31.74 K/s, 0.00 B/s, 0.01, 0.00, sshd:

In splunk, each event is counted as few consecutive lines, it never line breaks each event into a new event row,

I tried playing around with /opt/splunkforwarder/etc/apps/myapp/local/props.conf

added both regex and LINE_BREAKER but it doesnt line break this simple CSV log,

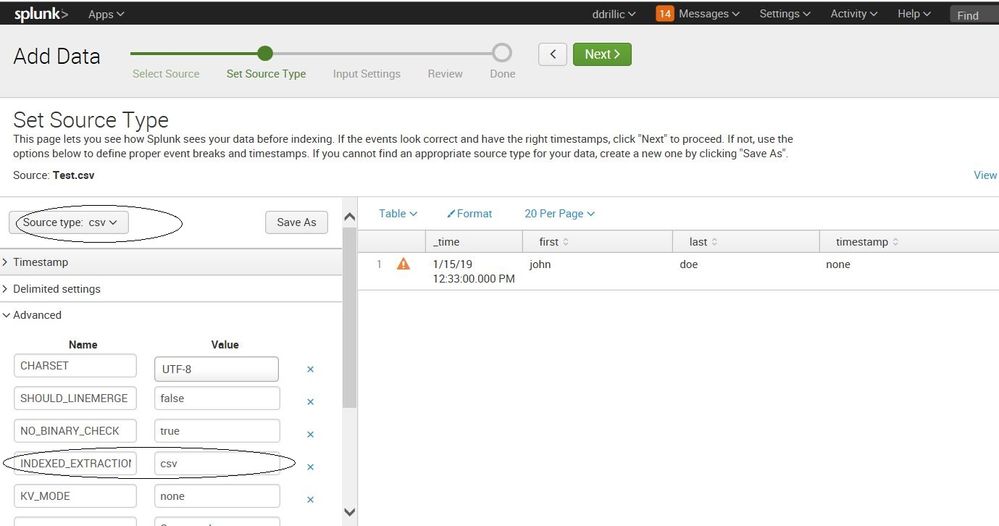

[iotop]

INDEXED_EXTRACTIONS = CSV

CHARSET = AUTO

KV_MODE = none

SHOULD_LINEMERGE = false

NO_BINARY_CHECK = true

LINE_BREAKER = ([\r\n]+)

pulldown_type = true

Still cant get it to parse the log correctly. Am I missing something? THanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ran an error check

index="_internal" log_level=WARN OR log_level=ERROR

and turns out it was spitting out errors about failed parsing of timestamp,

I fixed it by adding a props.conf to my indexers /opt/splunk/etc/system/local/props.conf, restart splunk master, and events are coming in ok now.

DATETIME_CONFIG=CURRENT

[iotop]

LINE_BREAKER=([\r\n]+)

SHOULD_LINEMERGE=false

NO_BINARY_CHECK=true

DATETIME_CONFIG=CURRENT

#pulldown_type = 1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the version of your Splunk?

I am using 7.1.1 and when I manually upload the file, there is an option to break the events at every line. This happens at the "Set Source Type" page.

Give a try.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When i try to ingest your sample data i only see 1 problem:

When you set indexed_extractions = CSVsplunk takes the first line in your file as a header for auto-field extraction.!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I removed that line, restarted the forwarder, but data still comes in bulked together,

[iotop]

LINE_BREAKER=([\r\n]+)

SHOULD_LINEMERGE=false

NO_BINARY_CHECK=true

pulldown_type = 1

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need the INDEXED_EXTRACTIONS = CSV and you need in the csv file a new line with the csv field names.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The indexer is doing the parsing of your events. So thats the right place for your props.conf 🙂

Maybe reread this section: https://docs.splunk.com/Documentation/Splunk/7.2.3/Indexer/Howindexingworks