Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- how to import some columns from csv

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how to import some columns from csv

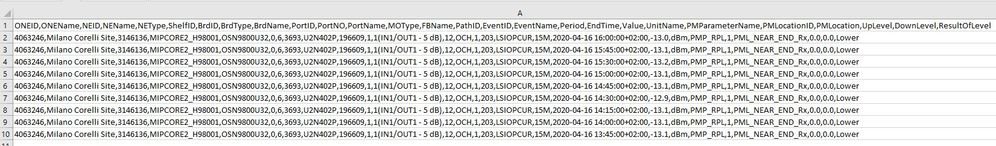

I have a csv file to import by app data ->monitor

i would to import some columns (not all) before to index.

It's possible?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

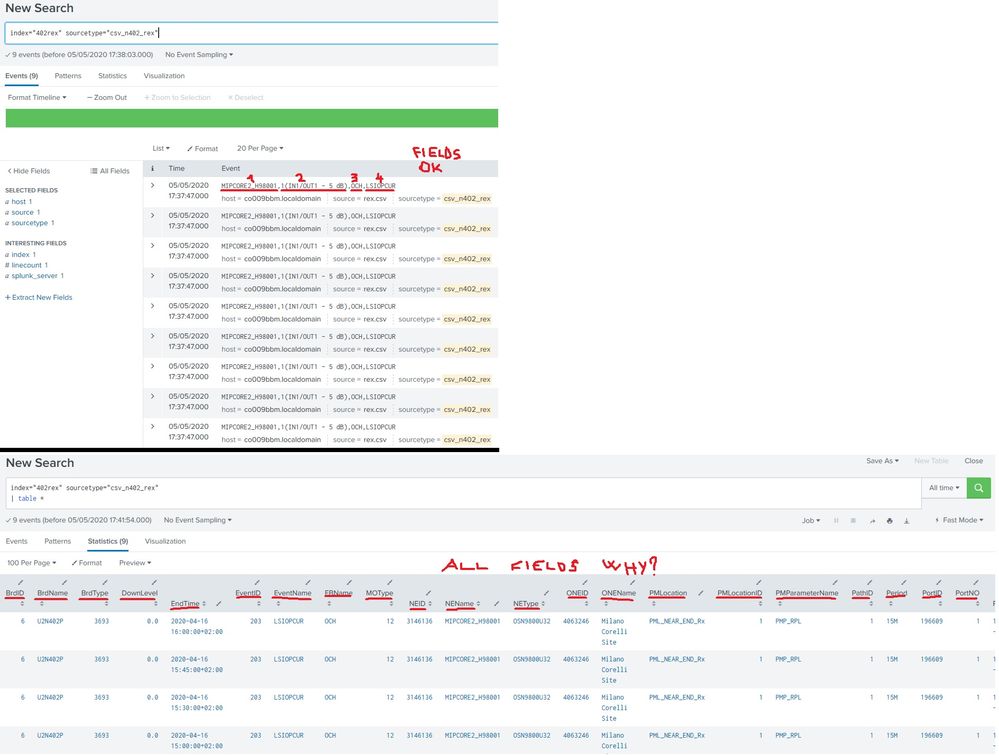

I've created a new csv to do a test:

did this in props.conf:

[csv_n402_rex]

BREAK_ONLY_BEFORE_DATE =

DATETIME_CONFIG =

FIELD_DELIMITER = ,

INDEXED_EXTRACTIONS = csv

KV_MODE = none

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

SEDCMD-rex = s/([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).([^,]+).*/\4,\12,\14,\17\n/

SHOULD_LINEMERGE = false

category = Structured

description = Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled = false

pulldown_type = 1

In search works, when I add *| table ** in the search, it shows me all fields. Why?

I suppose regex is just a view, so I'm indexing all the fields.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

because INDEXED_EXTRACTIONS = csv is before SEDCMD-rex

|table * display all extracted fields.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so I'm indexing all the fields?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes , I guess

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried to reverse as you said but the sorting of the fields would seem to be automatic and so like in the props.conf file above

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How about transforms.conf ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

not present, i'll try to configure it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do this in props.conf:

[YourSourcetypeHere]

SEDCMD-trim_raw = s/([^,]+),(?:[^,]+,){2}(.*$)/\1\2/

For proof try this:

| makeresults

| fields - _time

| eval _raw="_time,f1,f2,f3,f4,f5,f6,f7,f8,f9,f10"

| rex mode=sed "s/([^,]+),(?:[^,]+,){2}(.*$)/\1\2/"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My rule on CSVs is this: If the file does NOT contain a timestamp, it should NOT be indexed (do not use Add data. Instead, it should be uploaded as a lookup. If you must index this data, then use SEDCMD to skip (erase) columns in your data as it is indexed:

https://docs.splunk.com/Documentation/SplunkCloud/6.6.0/Data/Anonymizedata

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi splunk6161,

I didn't tried to do this, but you could delete columns that you don't want to index using a SEDCMD command.

e.g. if you have a csv like this

field1,field2,field3,field4,field5,field6

aaa,bbb,ccc,ddd,eee,fff

and you don't want to index field4

you could insert in props.conf stanza:

[your_sourcetype]

SED-alter=s/[^,],[^,],[^,],[^,],[^,],[^,]/[^,],[^,],[^,],[^,],,[^,]/g

Try it

Bye.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It doesn't work

I have 10columns plus 1column "_time" as first column.

I would keep the first column, skip the second and the third, keep the rest.

Is correct this scenario?

SEDCMD-alter=s/[^,],[^,],[^,],[^,],[^,],[^,],[^,],[^,],[^,],[^,],[^,]/[^,],,,[^,],[^,],[^,],[^,],[^,],[^,],[^,],[^,]/g

thanks