Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Why am I losing events when neither the cold p...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why am I losing events when neither the cold path usage or maxage are being met?

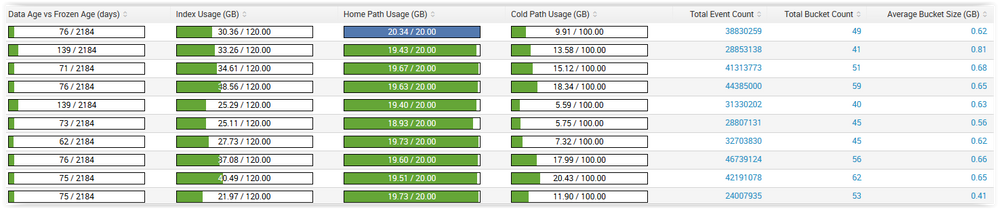

I have an index I'm using to backfill a bunch of data, and as I'm tracking the event count by sources, I'm seeing splunk throw away events literally by the millions randomly (I'll keep track of the events of one of my sources, then I'll check again 5 minutes later and the number is over a million less than it used to be.). None of the limits set should be getting hit. The only thing being hit is the warm path but that should just be rolling into the much larger allotment I gave for Cold, and Cold isn't even near being full, yet I'm getting events thrown out left and right.

What can I look into here? I'm having trouble trusting the integrity of my data when I see the event counts moving backwards even though Cold Path isn't even close to being filled up, and data age isn't close to being hit either.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try a search like:

index=_internal `indexerhosts` sourcetype=splunkd "BucketMover - will attempt to freeze"

| rex field=bkt "(rb_|db_)(?P<newestDataInBucket>\d+)_(?P<oldestDataInBucket>\d+)"

| eval newestDataInBucket=strftime(newestDataInBucket, "%+"), oldestDataInBucket = strftime(oldestDataInBucket, "%+")

| eval message=coalesce(message,event_message)

| table message, oldestDataInBucket, newestDataInBucket

Borrowed from Alerts for Splunk Admins (github savedsearches.conf)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It really seems like it's just throwing out everything on the Cold path. My home path usage hovers between 19 and 20 gb (20 gb is the limit for warm) but the cold path on several is just 0 / 100, as if it threw everything out of the cold path. As far as actual space on the cold path drive on the indexers there should be tons, I have several TB free on each of them, I was being quite conservative with the 100 gb cold limit.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

as @gjanders mention, look at the search results and see what it says ... you can add this to the end of the search:

| rex field=message "\'\sbecause\s(?<why>.*+)" | table why

also, the fact you have plenty of storage behind, doesnt mean you mounted the storage to the right drive where the indexes path are configured to ...

worthwhile to double check

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gjanders I got about 540 results from that search, what does that search imply?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Narrow down to the index you mentioned and see if you still see results, its implying that the freezing of the buckets is occurring, you should see a message to as "why"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is the "candidate" field the field I can narrow down based on index? If so it's claiming none of these freezes occurred on that index.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting, the candidate if the field from memory that would be the bucket to be frozen.

Perhaps try a more generic search for "BucketMover" and the index and see if there is anything?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have volume settings? how much free disk space do you have overall on your indexers?