Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Splunk duplicates events from a custom-made JS...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk duplicates events from a custom-made JSON event file

What I'm doing is: I am doing stuff by my own an then parsing all the information as a JSON in order to append it to the end of an Splunk indexed file.

The problem is: Splunk, instead of just adding the information that wasn't there when the file was last updated, adds all the information again as if it was completely new, and thus giving me duplicate information.

I tried to append the information without altering what was already there, but this doesn't seem to solve anything. What would you do to only add the new info?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Vyber90,

I thought you were seeing duplicate fields on Interesting Fields, but you see duplicate raw events. Could you please share your inputs.conf for that json file?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

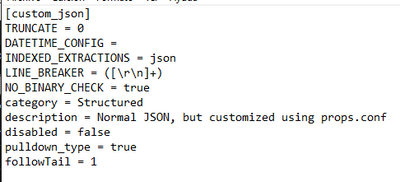

Here you go:

In order to not change JSON's original stuff I created this new sourcetype. The lines I've manually added are TRUNCATE and followTail (the last one was added as a solution proposed in this very thread).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you add the below config in the inputs.conf file for your stanza?

followTail = 1

ReF: Splunk Ans

Check props and conf of followTail here: Inputsconf

If you find my solution/debugging steps fruitful, an upvote would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Vyber90,

If you are parsing JSON by yourself, you should disable automatic KV extraction of Splunk.

You can disable it by adding KV_MODE = none into your sourcetype.

[your_sourcetype]

KV_MODE = none- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm sorry to tell you that it doesn't work for me (I put that line in a new sourcetype in the props.conf file, where I think it should go).

As I said, I put that line in and it was today that I added information after the latest event of the JSON. As I said in the question, now instead of 7 events (6 + the new event), I have 13 (6 events + their 6 duplicate events + the new event).

By the way, I don't know if this is obvious or not, so I'll tell you this piece of information.

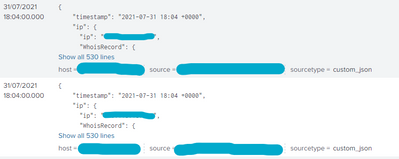

Duplicate events would look like this:

The duplicated events are carbon copies. They have the same information, the same timestamps, the same quantity of lines, and they do even filter out when using dedup.