Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Help needed with Props.conf

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help needed with Props.conf

Hello,

Can some one please help me with props.conf for the below log?

Timestamp Process TID Area Category EventID Level Message Correlation

03/23/2023 06:10:20.73 w1wp.exe (0x12G8) 0x1F8D SharePoint Foundation Authentication Authorization ag6al Medium OAuth app principal Name=i:0i.t|ms.sp.ext|92d4232b-12w3-57d5-b038-a2c108d5dd18@9a211ce9-5e5a-4dab-8256-6748538485fc, IsAppOnlyRequest=True, UserIdentityName=0i.t|00000003-0110-0gg1-ce00-000000000000|app@sharepoint, ClaimsCount=11 0dd5c32e-121d-adcd-9284-75f41116e8c5

03/23/2023 07:11:27.53 w2wp.exe (0x17F8) 0x1Z74 SharePoint Foundation General af8sw Medium ListRootFolderUrl=/tops/ops/abcd/Forms, CAML query: <View Scope="RecursiveAll"><Query><Where><Eq><FieldRef Name="ID" /><Value Type="Counter">21170</Value></Eq></Where></Query><ViewFields><FieldRef Name="Position_x0010_3" /><FieldRef Name="ID" /></ViewFields><RowLimit Paged="TRUE">1</RowLimit></View> 0dd9b01e-002d-adcd-b28b-91e7hg71fdd9

03/23/2023 09:11:27.73 w8wp.exe (0x25F0) 0x1E9C SharePoint Foundation App Management tempo Medium AppMngMinDb: Got SubscriptionId 0c6554b-12d0-400e-91c6-2bd25af4be5b from partion key. SubscriptionId 00000000-1111-1110-0000-000000444400 is in the SPServiceContext. 0mm9b62e-202d-adcd-9277-75f41666e8c0

03/23/2023 08:45:27.73 w0wp.exe (0x17F8) 0x1V4C SharePoint Foundation App Management tempo Medium AppMngMinDb: Executing query: dbo.proc_AM_GetAppPrincipalPerms on Legacy db with context subId: 00990000-0440-0100-0000-004444400000 and compositeKeyId: 0c98423b-34d0-438m-91c6-2ac25av4ce5d 0dd9b21e-111d-adcd-3333-7111111c0

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What have you tried so far? What separates each field?

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @richgalloway ,

i tried the below props.conf

TIME_FORMAT =%m/%d/%Y %H:%M:%S.%2N

TIME_PREFIX = ^

LINE_BREAKER = ([\r\n]+)

SHOULD_LINEMERGE = false

I am getting the below error for the header

"failed to parse timestamp, defaulting to file modtime", how to fix this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Roy_9 ,

I think you won't be able to use %N variable different from the available ones:

| %N | The number of subsecond digits. The default is %9N. You can specify %3N = milliseconds, %6N = microseconds, %9N = nanoseconds. |

Hope this helps you, have a good day,

Fabrizio

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @LRF / @richgalloway

It is n't working even after applying %N or %3N to the time format, I am seeing the error like below.

could not use strp time to parse timestamp from "Timestamp process TID Area ".

Any help would be highly appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Roy_9 ,

My bad, I did not understand where you were having the issue.

Splunk is giving you that error because header doesn't have a timestamp and can't interpret it.

To prevent this, you should remove the header with SEDCM (props.conf) or combining props.conf and transforms to discard that specific line.

Hope this helps you, have a nice day,

Fabrizio

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the info, can you help me with the SEDCM Command in order to remove the header? I don’t know how to use it with the props.conf.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

place the following in the props.conf file:

SEDCMD-removeheader = s/^Timestamp\sProcess\sTID\sArea\sCategory\sEventID\sLevel\sMessage\sCorrelation.*//gGeneral sintax explained in the props.conf documentation:

* replace - s/regex/replacement/flags

* regex is a perl regular expression (optionally containing capturing

groups).

* replacement is a string to replace the regex match. Use \n for back

references, where "n" is a single digit.

* flags can be either: g to replace all matches, or a number to

replace a specified match.Hope this helps, have a nice day,

Fabrizio

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much @LRF and just a quick question

What if we want to make this events structured, if we want to get the values with respect to each field let's say under process, process value should be displayed,

under TID, TID value should be displayed, like the same way i wanted for area, category, Event ID Level, Message and correlation.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With your dataset, it is difficult to use any indexed extraction or similar in props.conf

for reference:

INDEXED_EXTRACTIONS = <CSV|TSV|PSV|W3C|JSON|HEC>

I would personally find a pattern to parse the logs at search time and generate the field extractions for each field

Hope this helps, have a nice day,

Fabrizio

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just applied the below to the props.conf but still seeing the same error, unable to remove the header.

SEDCMD-removeheader

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Roy_9 ,

have you specified the entire configuration?

SEDCMD-removeheader = s/^Timestamp\sProcess\sTID\sArea\sCategory\sEventID\sLevel\sMessage\sCorrelation.*//g

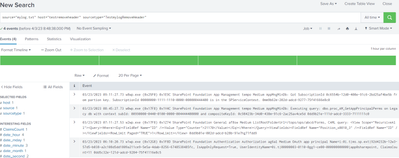

I tested it in the Add data preview and the header gets correctly removed as you can see below:

Also, header removal won't be applied to already ingested data

Hope this helps you, have a nice day,

Fabrizio

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, for some reason, header is not getting removed, I am manually testing it by uploading through Add data section via UI, it is not already indexed.

If you don't mind, can you copy your props.conf stanza and put it here so that i will compare with mine and see if something is missing.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note that SEDCMD replaces text - it doesn't delete events. So, in the end, you may still end up with an (empty) event with no timestamp.

To delete the header, use a transform or Ingest Action that routes the header to nullQueue.

Props.conf:

[mysourcetype]

TRANSFORM-noheader = removeHeaderTransforms.conf:

[removeHeader]

REGEX = ^Timestamp

DEST_KEY = queue

FORMAT = nullQueue

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @richgalloway ,

I agree with the transforms/props solution.

I further tested the SEDCMD solution and saw that (at least on my instance) is working as expected.

Is there any known mechanism that automatically remove that empty line?

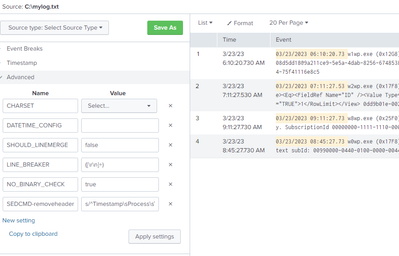

that's my sourcetype:

[TestmylogRemoveHeader]

DATETIME_CONFIG =

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

SEDCMD-removeheader = s/^Timestamp\sProcess\sTID\sArea\sCategory\sEventID\sLevel\sMessage\sCorrelation.*//g

SHOULD_LINEMERGE = false

category = Custom

pulldown_type = true

Produced result:

Any suggestion is highly appreciated! I'll eventually use the props/transforms as preferred method for these cases.

Have a nice day!

Fabrizio

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with @LRF The problem is the log header line doesn't have a timestamp (and doesn't need one). You can ignore the error or, as suggested, remove it.

If this reply helps you, Karma would be appreciated.