Join the Conversation

- Find Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Getting duplicate record after uploading json ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have uploaded a json file to splunk and using spath command to get output, but the output shows two rows for a single record.

The json file sample is:

[

{

"id": 707860,

"name": "Hurzuf",

"country": "UA",

"timestamp":"2017-09-02T06:44:14,799 MDT",

"coord": {

"lon": 34.283333,

"lat": 44.549999

},

"ports":[

{

"port": 1,

"utilization": 140,

"error": {

"tx": 1000.00,

"rx": 500

}

},

{

"port": 2,

"utilization": 110,

"error": {

"tx": 1002.00,

"rx": 420

}

}

]

},

{

"id": 519188,

"name": "Novinki",

"country": "RU",

"timestamp":"2017-09-03T06:50:14,799 MDT",

"coord": {

"lon": 37.666668,

"lat": 55.683334

},

"ports":[

{

"port": 1,

"utilization": 120,

"error": {

"tx": 1020.00,

"rx": 400

}

},

{

"port": 2,

"utilization": 120,

"error": {

"tx": 1002.00,

"rx": 400

}

}

]

}]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sawgata12345,

I think your each event contain multiple records.And each record has it's own timestamp country name id fields moreover ports field also contain multiple values like port: 1 & port:2. So you have to write a search which can maintain relation of each field of port and relative id.

Your Sample Event:

[{

"id": 707860,

"name": "Hurzuf",

"country": "UA",

"timestamp": "2017-09-02T06:44:14,799 MDT",

"coord": {

"lon": 34.283333,

"lat": 44.549999

},

"ports": [{

"port": 1,

"utilization": 140,

"error": {

"tx": 1000.00,

"rx": 500

}

},

{

"port": 2,

"utilization": 110,

"error": {

"tx": 1002.00,

"rx": 420

}

}]

},

{

"id": 519188,

"name": "Novinki",

"country": "RU",

"timestamp": "2017-09-03T06:50:14,799 MDT",

"coord": {

"lon": 37.666668,

"lat": 55.683334

},

"ports": [{

"port": 1,

"utilization": 120,

"error": {

"tx": 1020.00,

"rx": 400

}

},

{

"port": 2,

"utilization": 120,

"error": {

"tx": 1002.00,

"rx": 400

}

}]

}]

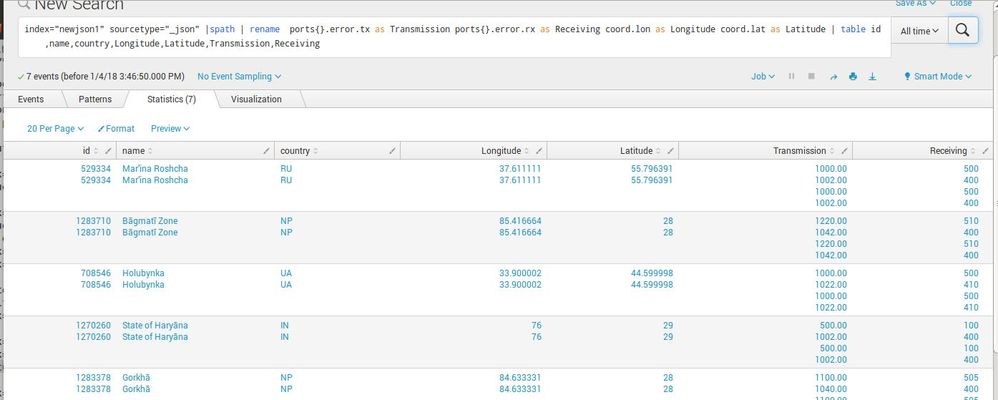

Can you please try below search:

index="newjson1" sourcetype="_json"

| spath {} output=even

| stats count by even

| eval _raw=even

| spath

| rename coord.lat as coord_lat coord.lon as coord_lon ports{}.error.rx as ports_error_rx ports{}.error.tx as ports_error_tx ports{}.port as ports_port ports{}.utilization as ports_utilization

| eval tempField=mvzip(mvzip(mvzip(ports_error_tx,ports_error_rx),ports_port),ports_utilization)

| stats count by tempField timestamp country name id

| eval ports_error_tx=mvindex(split(tempField,","),0), ports_error_rx=mvindex(split(tempField,","),1),ports_port=mvindex(split(tempField,","),2), ports_utilization=mvindex(split(tempField,","),3) | sort timestamp id

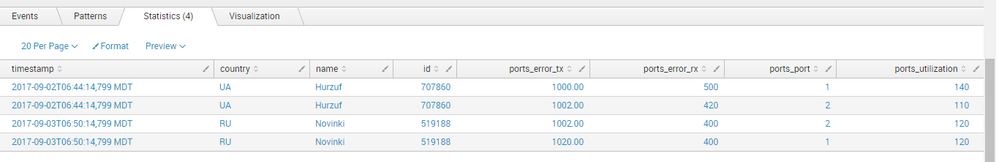

| table timestamp country name id ports_error_tx ports_error_rx ports_port ports_utilization

My Sample Search:

| makeresults

| eval _raw="[ { \"id\": 707860, \"name\": \"Hurzuf\", \"country\": \"UA\", \"timestamp\":\"2017-09-02T06:44:14,799 MDT\", \"coord\": { \"lon\": 34.283333, \"lat\": 44.549999 }, \"ports\":[ { \"port\": 1, \"utilization\": 140, \"error\": { \"tx\": 1000.00, \"rx\": 500 } }, { \"port\": 2, \"utilization\": 110, \"error\": { \"tx\": 1002.00, \"rx\": 420 } } ] }, { \"id\": 519188, \"name\": \"Novinki\", \"country\": \"RU\", \"timestamp\":\"2017-09-03T06:50:14,799 MDT\", \"coord\": { \"lon\": 37.666668, \"lat\": 55.683334 }, \"ports\":[ { \"port\": 1, \"utilization\": 120, \"error\": { \"tx\": 1020.00, \"rx\": 400 } }, { \"port\": 2, \"utilization\": 120, \"error\": { \"tx\": 1002.00, \"rx\": 400 } } ] }]"

| spath {} output=even

| stats count by even

| eval _raw=even

| spath

| rename coord.lat as coord_lat coord.lon as coord_lon ports{}.error.rx as ports_error_rx ports{}.error.tx as ports_error_tx ports{}.port as ports_port ports{}.utilization as ports_utilization

| eval tempField=mvzip(mvzip(mvzip(ports_error_tx,ports_error_rx),ports_port),ports_utilization)

| stats count by tempField timestamp country name id

| eval ports_error_tx=mvindex(split(tempField,","),0), ports_error_rx=mvindex(split(tempField,","),1),ports_port=mvindex(split(tempField,","),2), ports_utilization=mvindex(split(tempField,","),3) | sort timestamp id

| table timestamp country name id ports_error_tx ports_error_rx ports_port ports_utilization

Happy Splunking

Thanks

Kamlesh

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sawgata12345,

I think your each event contain multiple records.And each record has it's own timestamp country name id fields moreover ports field also contain multiple values like port: 1 & port:2. So you have to write a search which can maintain relation of each field of port and relative id.

Your Sample Event:

[{

"id": 707860,

"name": "Hurzuf",

"country": "UA",

"timestamp": "2017-09-02T06:44:14,799 MDT",

"coord": {

"lon": 34.283333,

"lat": 44.549999

},

"ports": [{

"port": 1,

"utilization": 140,

"error": {

"tx": 1000.00,

"rx": 500

}

},

{

"port": 2,

"utilization": 110,

"error": {

"tx": 1002.00,

"rx": 420

}

}]

},

{

"id": 519188,

"name": "Novinki",

"country": "RU",

"timestamp": "2017-09-03T06:50:14,799 MDT",

"coord": {

"lon": 37.666668,

"lat": 55.683334

},

"ports": [{

"port": 1,

"utilization": 120,

"error": {

"tx": 1020.00,

"rx": 400

}

},

{

"port": 2,

"utilization": 120,

"error": {

"tx": 1002.00,

"rx": 400

}

}]

}]

Can you please try below search:

index="newjson1" sourcetype="_json"

| spath {} output=even

| stats count by even

| eval _raw=even

| spath

| rename coord.lat as coord_lat coord.lon as coord_lon ports{}.error.rx as ports_error_rx ports{}.error.tx as ports_error_tx ports{}.port as ports_port ports{}.utilization as ports_utilization

| eval tempField=mvzip(mvzip(mvzip(ports_error_tx,ports_error_rx),ports_port),ports_utilization)

| stats count by tempField timestamp country name id

| eval ports_error_tx=mvindex(split(tempField,","),0), ports_error_rx=mvindex(split(tempField,","),1),ports_port=mvindex(split(tempField,","),2), ports_utilization=mvindex(split(tempField,","),3) | sort timestamp id

| table timestamp country name id ports_error_tx ports_error_rx ports_port ports_utilization

My Sample Search:

| makeresults

| eval _raw="[ { \"id\": 707860, \"name\": \"Hurzuf\", \"country\": \"UA\", \"timestamp\":\"2017-09-02T06:44:14,799 MDT\", \"coord\": { \"lon\": 34.283333, \"lat\": 44.549999 }, \"ports\":[ { \"port\": 1, \"utilization\": 140, \"error\": { \"tx\": 1000.00, \"rx\": 500 } }, { \"port\": 2, \"utilization\": 110, \"error\": { \"tx\": 1002.00, \"rx\": 420 } } ] }, { \"id\": 519188, \"name\": \"Novinki\", \"country\": \"RU\", \"timestamp\":\"2017-09-03T06:50:14,799 MDT\", \"coord\": { \"lon\": 37.666668, \"lat\": 55.683334 }, \"ports\":[ { \"port\": 1, \"utilization\": 120, \"error\": { \"tx\": 1020.00, \"rx\": 400 } }, { \"port\": 2, \"utilization\": 120, \"error\": { \"tx\": 1002.00, \"rx\": 400 } } ] }]"

| spath {} output=even

| stats count by even

| eval _raw=even

| spath

| rename coord.lat as coord_lat coord.lon as coord_lon ports{}.error.rx as ports_error_rx ports{}.error.tx as ports_error_tx ports{}.port as ports_port ports{}.utilization as ports_utilization

| eval tempField=mvzip(mvzip(mvzip(ports_error_tx,ports_error_rx),ports_port),ports_utilization)

| stats count by tempField timestamp country name id

| eval ports_error_tx=mvindex(split(tempField,","),0), ports_error_rx=mvindex(split(tempField,","),1),ports_port=mvindex(split(tempField,","),2), ports_utilization=mvindex(split(tempField,","),3) | sort timestamp id

| table timestamp country name id ports_error_tx ports_error_rx ports_port ports_utilization

Happy Splunking

Thanks

Kamlesh

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kamlesh

This query as you suggested gives me "No result" after executing.

what is this _raw=even(this "_raw" according to your query you have stored the whole json content but for me the data is already uploaded and indexed)

index="newjson1" sourcetype="_json"

| spath {} output=even

| stats count by even

| eval _raw=even

| spath

| rename coord.lat as coord_lat coord.lon as coord_lon ports{}.error.rx as ports_error_rx ports{}.error.tx as ports_error_tx ports{}.port as ports_port ports{}.utilization as ports_utilization

| eval tempField=mvzip(mvzip(mvzip(ports_error_tx,ports_error_rx),ports_port),ports_utilization)

| stats count by tempField timestamp country name id

| eval ports_error_tx=mvindex(split(tempField,","),0), ports_error_rx=mvindex(split(tempField,","),1),ports_port=mvindex(split(tempField,","),2), ports_utilization=mvindex(split(tempField,","),3) | sort timestamp id

| table timestamp country name id ports_error_tx ports_error_rx ports_port ports_utilization

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sawgata12345,

| eval _raw=even

| spath

I'm overwriting _raw with new generated event from even field. So next statement spath will execute as per expectation. This is because your given event contains multiple records.

Have you tried to execute this search in parts?

like ..

1)

index="newjson1" sourcetype="_json"

| spath {} output=even

2)

index="newjson1" sourcetype="_json"

| spath {} output=even

| stats count by even

3)

index="newjson1" sourcetype="_json"

| spath {} output=even

| stats count by even

| eval _raw=even

| spath

Can you please execute above searches and give me output?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sawgata12345,

If you have indexed file twice and if it is possible to clean index then clean it and while indexing new file add crcSalt in inputs.conf so that splunk won't index duplicate file.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@nikita_p

i cleared the index and uploaded fresh data and added crcSalt in inputs.conf. Even then the rows are coming twice. This could be because of the multiple port details per json event. But in that case if 50 port details then the row gets repeated 50 times. Its not good right?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you uploaded files twice ? Maybe previously as well ? Have you tried this query index="newjson1" sourcetype="_json" | dedup _raw| spath ..... ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@harsmarvania57

I cleaned the index and uploaded the file once fresh, but even then its same. Even dedup _raw before the |spath also giving the same result. Is it because of multiple port details in each single json event?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Found the issue, remove | spath from your query and it will display only single value not duplicate.