Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Dashboards & Visualizations

- :

- Re: Visualization of COVID-19 with splunk

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

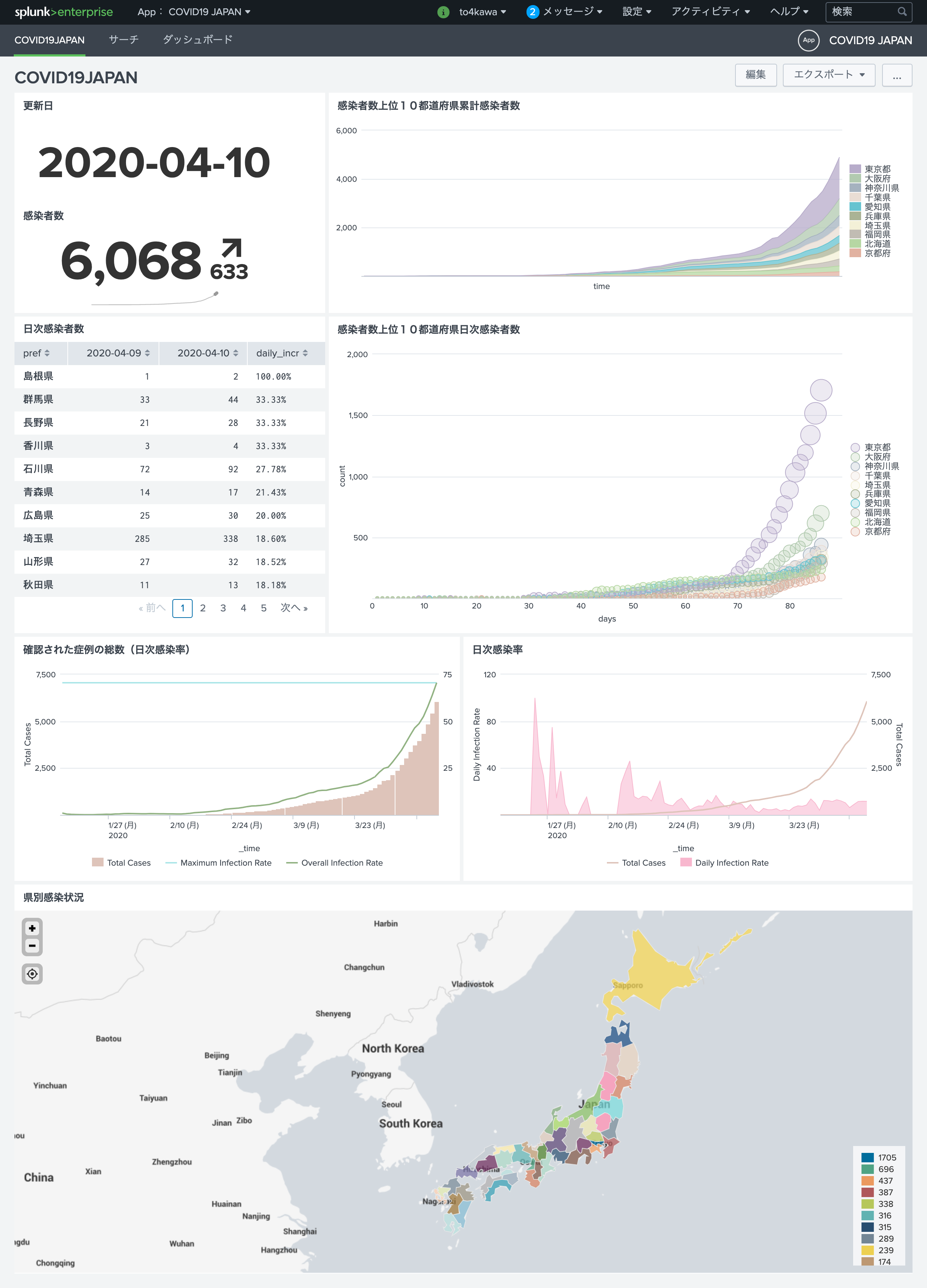

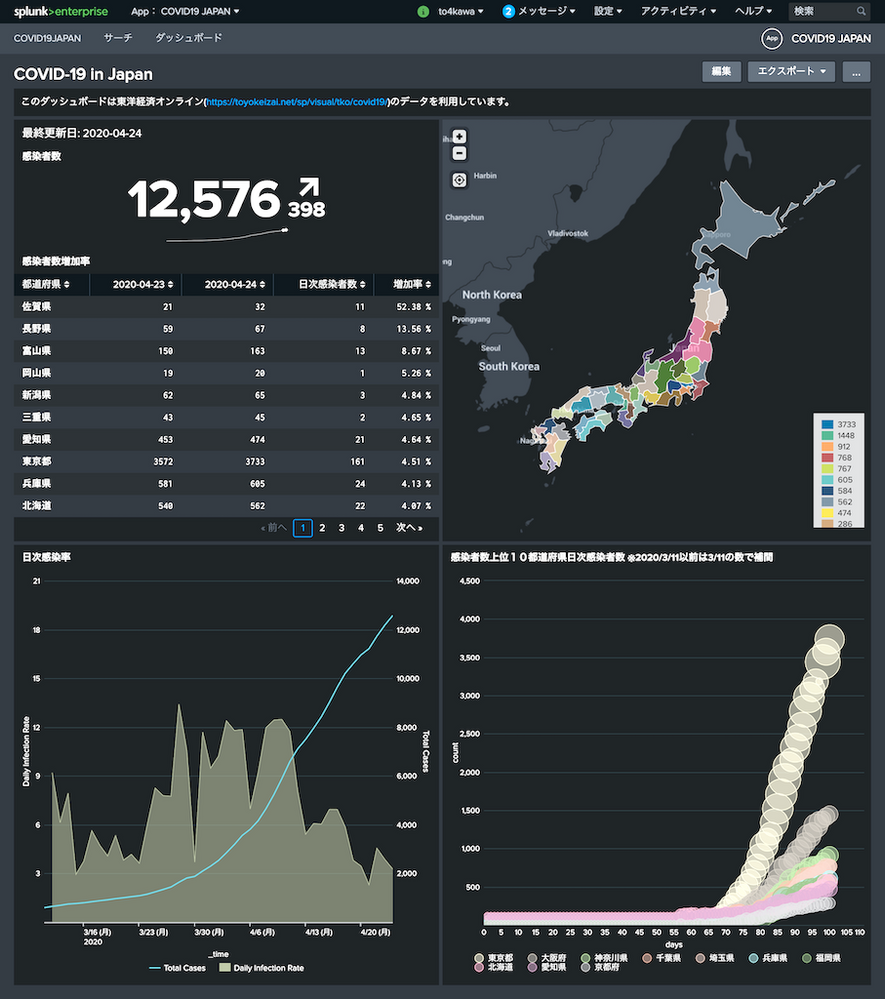

If you want to analyze COVID-19 data, you need to create or collect the data.

- Your country is the United States or China: use Johns Hopkins data https://github.com/CSSEGISandData/COVID-19

- Your country is another country: D.I.Y

It would be nice to have geographic data (e.g. geo_us_states).

- Your country is the United States: Splunk have

- Your country is others: D.I.Y.

anyone know the procedure?

- I don't know. I asked a question here, but I had almost to do it myself.

If you just want to know the current situation, there are other good sites.

If you want to predict what the future will be from the current situation, you can use Splunk.

If you want to search and show data for your country, here is a list of information.

If you have any questions, I will respond if you receive a comment.

Thank you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would love to collaborate on a Japan dashboard @to4kawa. Please reach out to me on Slack and let's see what we can work on together!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want to analyze COVID-19 data, you need to create or collect the data.

- Your country is the United States or China: use Johns Hopkins data https://github.com/CSSEGISandData/COVID-19

- Your country is another country: D.I.Y

It would be nice to have geographic data (e.g. geo_us_states).

- Your country is the United States: Splunk have

- Your country is others: D.I.Y.

anyone know the procedure?

- I don't know. I asked a question here, but I had almost to do it myself.

If you just want to know the current situation, there are other good sites.

If you want to predict what the future will be from the current situation, you can use Splunk.

If you want to search and show data for your country, here is a list of information.

If you have any questions, I will respond if you receive a comment.

Thank you

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

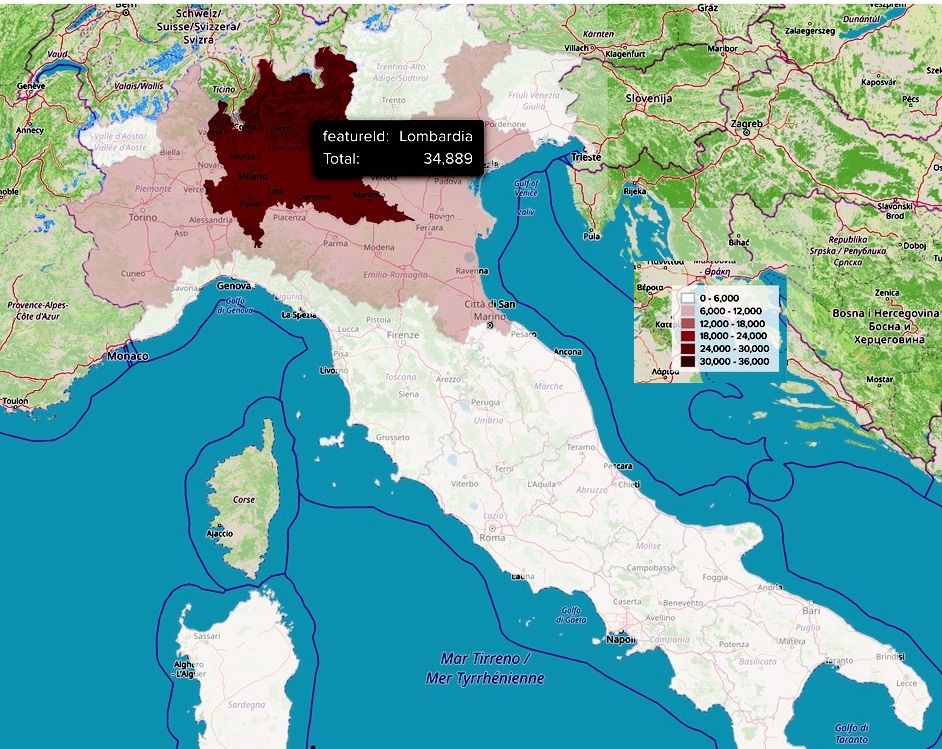

Italy_region.csv

featureId,abbreviation

Abruzzo,ABR

Apulia,PUG

Basilicata,BAS

Calabria,CAL

Campania,CAM

Emilia-Romagna,EMR

Friuli-Venezia Giulia,FVG

Lazio,LAZ

Liguria,LIG

Lombardia,LOM

Marche,MAR

Molise,MOL

Piemonte,PIE

Sardegna,SAR

Sicily,SIC

Toscana,TOS

Trentino-Alto Adige,TN

Umbria,UMB

Valle d'Aosta,VDA

Veneto,VEN

https://biogeo.ucdavis.edu/data/gadm3.6/shp/gadm36_ITA_shp.zip

Italy region map data.

| inputlookup geo_Italy

| fieldsummary

| table field values

| where field!="geom"

field values

count [{"value":"0","count":20}]

featureCollection [{"value":"geo_Italy","count":20}]

featureId [{"value":"Abruzzo","count":1},{"value":"Apulia","count":1},{"value":"Basilicata","count":1},{"value":"Calabria","count":1},{"value":"Campania","count":1},{"value":"Emilia-Romagna","count":1},{"value":"Friuli-Venezia Giulia","count":1},{"value":"Lazio","count":1},{"value":"Liguria","count":1},{"value":"Lombardia","count":1},{"value":"Marche","count":1},{"value":"Molise","count":1},{"value":"Piemonte","count":1},{"value":"Sardegna","count":1},{"value":"Sicily","count":1},{"value":"Toscana","count":1},{"value":"Trentino-Alto Adige","count":1},{"value":"Umbria","count":1},{"value":"Valle d'Aosta","count":1},{"value":"Veneto","count":1}]

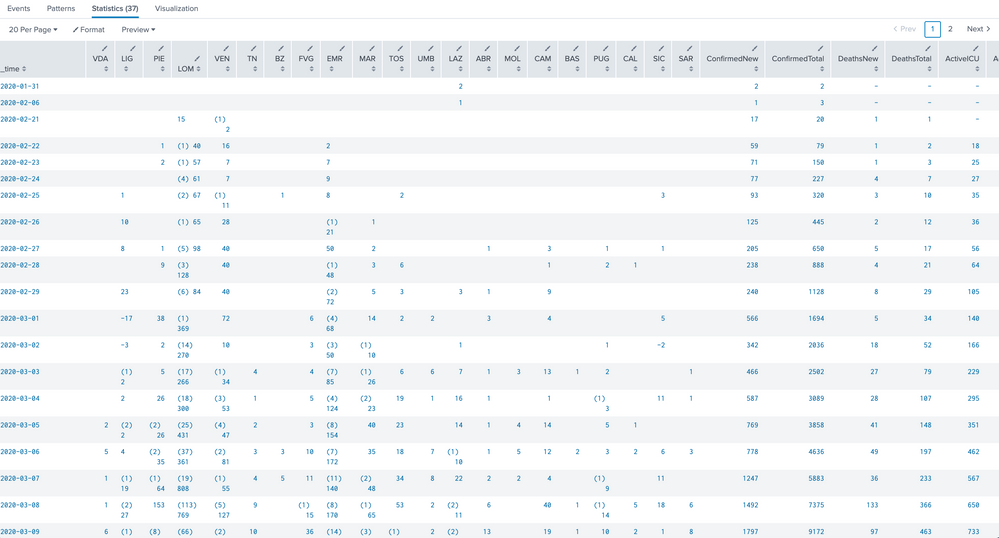

https://en.wikipedia.org/wiki/2020_coronavirus_pandemic_in_Italy

Italy data

I scraped the web and make csv

| inputlookup italy_covid19.csv

| eval _time=strptime(Date,"%F")

| table _time VDA LIG PIE LOM VEN TN BZ FVG EMR MAR TOS UMB LAZ ABR MOL CAM BAS PUG CAL SIC SAR Confirmed* Deaths* Active*

my script wiki_get.py getting web data:

import requests

import pandas as pd

from bs4 import BeautifulSoup

url = 'https://en.wikipedia.org/wiki/2020_coronavirus_pandemic_in_Italy'

res = requests.get(url)

soup = BeautifulSoup(res.content, "lxml")

data=soup.find_all('table', {"wikitable mw-collapsible"})

df = pd.read_html(str(data), keep_default_na=False)[0]

df=df.iloc[:,0:28]

df.columns=['Date','VDA','LIG','PIE','LOM','VEN','TN','BZ','FVG','EMR','MAR','TOS','UMB','LAZ','ABR','MOL','CAM','BAS','PUG','CAL','SIC','SAR','ConfirmedNew','ConfirmedTotal','DeathsNew','DeathsTotal','ActiveICU','ActiveTotal']

daf=df[df['Date'].str.contains('^2020')]

daf.to_csv('Splunk_COVID19_Italy.csv', index=False)

my test query:

| inputlookup italy_covid19.csv

| eval _time=strptime(Date,"%F")

| table _time VDA LIG PIE LOM VEN TN BZ FVG EMR MAR TOS UMB LAZ ABR MOL CAM BAS PUG CAL SIC SAR

| foreach VDA LIG PIE LOM VEN TN BZ FVG EMR MAR TOS UMB LAZ ABR MOL CAM BAS PUG CAL SIC SAR [eval <<FIELD>>=replace('<<FIELD>>',"\(\d*\)","")

| eval <<FIELD>>=replace('<<FIELD>>',"\,","")]

| transpose 0 header_field=_time column_name=abbreviation

| addtotals

| table Total abbreviation

| lookup italy_region.csv abbreviation OUTPUT featureId

| table Total featureId

| geom geo_Italy

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

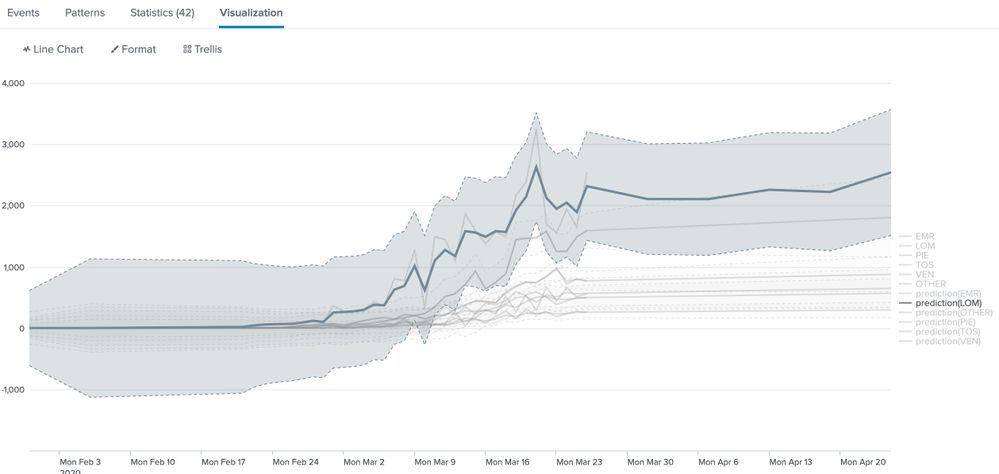

|makeresults

| eval _raw=" _time EMR LOM PIE TOS VEN OTHER

2020-01-31 2

2020-02-06 1

2020-02-21 15 2

2020-02-22 2 40 1 16

2020-02-23 7 57 2 7

2020-02-24 9 61 7

2020-02-25 8 67 2 11 5

2020-02-26 21 65 28 11

2020-02-27 50 98 1 40 16

2020-02-28 48 128 9 6 40 7

2020-02-29 72 84 3 40 41

2020-03-01 68 369 38 2 72 34

2020-03-02 50 270 2 10 15

2020-03-03 85 266 5 6 34 70

2020-03-04 124 300 26 19 53 65

2020-03-05 154 431 26 23 47 88

2020-03-06 172 361 35 18 81 111

2020-03-07 140 808 64 34 55 146

2020-03-08 170 769 153 53 127 220

2020-03-09 206 1280 42 74 205

2020-03-10 147 322 103 56 112 237

2020-03-11 206 1489 48 56 167 347

2020-03-12 208 1445 79 44 361 514

2020-03-13 316 1095 260 106 211 559

2020-03-14 381 1865 33 160 342 716

2020-03-15 449 1587 238 151 235 930

2020-03-16 429 1377 405 85 301 636

2020-03-17 409 1571 381 187 231 747

2020-03-18 594 1493 444 277 510 889

2020-03-19 689 2171 591 152 270 1449

2020-03-20 754 2380 529 311 547 1465

2020-03-21 737 3251 291 219 586 1473

2020-03-22 850 1691 668 265 505 1581

2020-03-23 980 1555 441 184 383 1246

2020-03-24 719 1942 654 238 443 1253

2020-03-25 800 1643 509 273 494 1491

2020-03-26 762 2543 560 254 493 1591"

| multikv forceheader=1

| eval _time=strptime(trim(time),"%F")

| table _time EMR LOM PIE TOS VEN OTHER

| foreach * [eval <<FIELD>> = if(match('<<FIELD>>',"\d+"),<<FIELD>>,NULL) | fillnull <<FIELD>>]

| predict EMR LOM PIE TOS VEN OTHER

try with MLTK apps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

.shp to .kml:

kml to geo lookup:

https://docs.splunk.com/Documentation/Splunk/latest/Knowledge/Configuregeospatiallookups

xpath:

https://docs.splunk.com/Documentation/Splunk/latest/SearchReference/Xpath

kml may require xpath

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pandas on python

https://cmdlinetips.com/2018/03/how-to-change-column-names-and-row-indexes-in-pandas/

rename dataframe

https://pandas.pydata.org/pandas-docs/stable/user_guide/io.html#io-read-html

web scraping

https://stackoverflow.com/questions/12625650/pandas-grep-like-function

grep row

https://github.com/to4kawa/Splunk_COVID19_Italy/blob/master/wiki_get.py

my script

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://www.splunk.com/en_us/blog/tips-and-tricks/splunking-web-pages.html

scrape web

Italy data is good for wikipedia

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://gadm.org/download_country_v3.html

shapes of each countries

only .shp is needed from gadm data. Splunk can't accept kml and kmz data.

use GADL command OR QGIS

and convert .shp to .kml

ogr2ogr -f "KML" '../geo_italy.kml' gadm36_ITA_1.shp

you can create Geo lookup with XPATH

/Placemark/ExtendedData/SchemaData/ SimpleData[@name='NAME_1']

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KML at Germany Austria Switzerland

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is an app for that. See https://splunkbase.splunk.com/app/4925/.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, @richgalloway

China and USA, originaldata has state or provinces, others don't.

geo_japan lookup is nothing officialy.

others has geo lookup and data?

I don't know.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wish they would make the code for https://covid-19.splunkforgood.com/coronavirus__covid_19_ public.

And add a second page to show the data in relationship to the overall population of the countries.

thx

afx

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you @afx

I checked query, query

is same corona_virus.xml

maybe ,the dashboard is made by Splunk dashboards Apps beta.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@afx

https://translate.google.co.jp/translate?hl=ja&sl=ja&tl=en&u=https%3A%2F%2Fqiita.com%2Ftoshikawa%2Fi...

I make splunk dashboard.

please see this link.