Join the Conversation

- News & Events

- :

- Blog & Announcements

- :

- Community Blog

- :

- Testing out the OpenTelemetry Collector With raw D...

Testing out the OpenTelemetry Collector With raw Data

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark Topic

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog post is part of an ongoing series on OpenTelemetry.

The OpenTelemetry project is the second largest project of the Cloud Native Computing Foundation (CNCF). The CNCF is a member of the Linux Foundation and besides OpenTelemetry, also hosts Kubernetes, Jaeger, Prometheus, or Helm among others.

OpenTelemetry defines a model to represent traces, metrics, and logs. Using this model, it orchestrates libraries in different programming languages to allow folks to collect this data. Just as important, the project delivers an executable named the OpenTelemetry Collector, which receives, processes, and exports data as a pipeline.

The OpenTelemetry Collector uses a component-based architecture, which allows folks to devise their own distribution by picking and choosing which components they want to support. At Splunk, we manage the distribution of our version of the OpenTelemetry collector under this open-source repository. The repository contains our configuration and hardening parameters as well as examples.

This blog post will walk you through using the Splunk HEC raw endpoint to send data to the OpenTelemetry collector.

WARNING: WE ARE DISCUSSING A CURRENTLY UNSUPPORTED CONFIGURATION. When sending data to Splunk Enterprise, we currently only support use of the OpenTelemetry Collector in Kubernetes environments. As always, use of the Collector is fully supported to send data to Splunk Observability Cloud.

When Splunk announced the HTTP Event Collector, it created a way for folks to send structured data using JSON payload describing an event taking place in their infrastructure. Typically, events contain the timestamp, source, sourcetype, and of course the payload of the event. Optionally, customers can define the index of the event so it is routed properly.

Sometimes though, you don’t have the luxury to encapsulate data. Splunk HEC supports sending data to a specific path (/services/collector/raw). The content of the request body is the raw message.

The OpenTelemetry Collector supports a simplified version of the raw endpoint, with the ability to accept data from POST requests to the /services/collector/raw path.

This is supported out of the box when you define a Splunk HEC receiver endpoint. Here is how you’d typically define such a receiver in your configuration file:

receivers:

splunk_hec:

In our case, we want to give it a sensible name. So let’s call it splunk_hec/raw:

receivers:

splunk_hec/raw:

From this receiver, we will send the data to a Splunk Enterprise instance. Let’s create that pipeline, and in particular its exporter:

pipelines:

logs:

receivers: [splunk_hec/raw]

processors: [batch]

exporters: [splunk_hec/logs]

exporters:

splunk_hec/logs:

token: "00000000-0000-0000-0000-0000000000000"

endpoint: "https://splunk:8088/services/collector"

source: "output"

index: "logs"

max_connections: 20

disable_compression: false

timeout: 10s

insecure_skip_verify: true

This exporter defines the configuration settings of a Splunk HEC endpoint. More documentation and examples are available as part of the OpenTelemetry Collector Contrib github repository. This particular Splunk endpoint says it will send data to the logs index, under the source “output”, to a Splunk instance located under the Splunk hostname, with a HEC token that is just a set of zeroes.

This is similar to how we set up in our blog post on log ingestion. Similarly, we set up the Splunk Enterprise instance so it’s ready to accept data. We want to send data periodically to the collector over HTTP. We are going to use Curl to send simple messages to our instance.

Here is what a simple curl invocation would look like:

curl -XPOST -k localhost:8088/services/collector/raw -d "your message here"

- -XPOST tells curl to use the HTTP POST method

- -k removes the need to check for SSL certificates (we’re targeting localhost)

- localhost:8088/services/collector/raw is the url of the endpoint published by the collector.

- -d "your message here" is the data being sent as the request body, which will be considered the log message.

In our example, we use Docker Compose to create a curl service to periodically send messages to the collector. Here is the curl service:

sender:

image: ubuntu:latest

command: 'bash -c "apt update && apt install curl -y && while true; do curl -XPOST -k otelcollector:8088/services/collector/raw -d \"$$(date) new message\"; sleep 5; done"'

container_name: curl

Our Docker Compose also runs the collector and Splunk Enterprise, similarly to our log ingestion example.

We have put this all together into an example that lives under Splunk’s OpenTelemetry Collector github repository.

To run this example, you will need at least 4 gigabytes of RAM, as well as git and Docker Desktop installed.

First, check out the repository using git clone:

git clone https://github.com/signalfx/splunk-otel-collector.git

Using a terminal window, navigate to the folder examples/otel-logs-splunk-raw-hec.

Type:

docker-compose up

This will start the OpenTelemetry Collector, our program generating traces, and Splunk Enterprise.

Your terminal will display information as Splunk starts. Eventually, Splunk will display the same information as in our last blog post to let us know it is ready.

Now, you can open your web browser and navigate to http://localhost:18000. You can log in as admin/changeme.

You will be met with a few prompts as this is a new Splunk instance. Make sure to read and acknowledge them, and open the default search application.

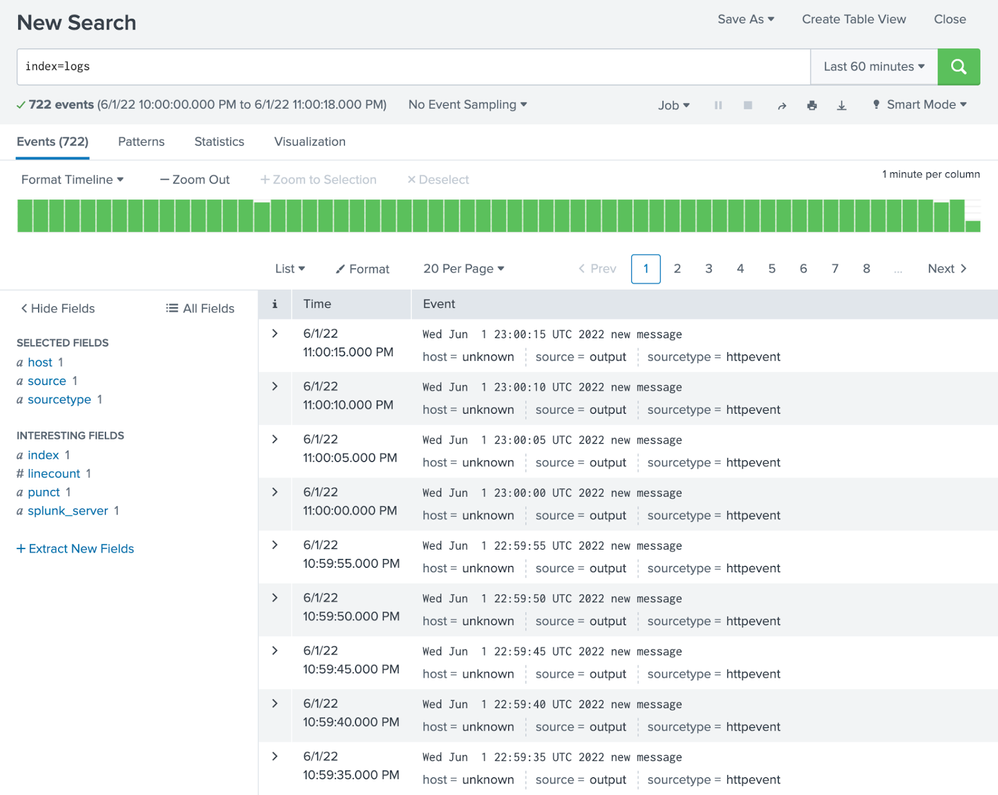

Now, we can interrogate our logs index. Enter the search: index=logs to see the logs sent to Splunk:

To send your very own message and see it appear, use the curl command below:

curl -XPOST -k localhost:18088/services/collector/raw -d "your message here"

When you have finished exploring this example, you can press Ctrl+C to exit from Docker Compose. Thank you for following along! This concludes our raw log collection example. We have used the OpenTelemetry Collector to successfully ingest raw data and it to our Splunk Enterprise instance.

If you found this example interesting, feel free to star the repository! Just click the star icon in the top right corner. Any ideas or comments? Please open an issue on the repository.

— Antoine Toulme, Senior Engineering Manager, Blockchain & DLT

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Application management with Targeted Application Install for Victoria Experience

Index This | What goes up and never comes down?

Splunkers, Pack Your Bags: Why Cisco Live EMEA is Your Next Big Destination

-

.conf25

31 -

App Dev

28 -

Career Report

9 -

Cisco Live

4 -

Cloud Platform

37 -

Community

58 -

Community Spotlight

22 -

Developer Spotlight

8 -

Enterprise

40 -

MVP

5 -

Observability

101 -

Office Hours

26 -

OpenTelemetry

49 -

Product Announcements

13 -

Security

51 -

Splunk Lantern

60 -

Splunk Love

7 -

SplunkTrust

31 -

Tech Talks

12 -

User Groups

7