Join the Conversation

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- AWS TA - S3 Generic input. Insane Memory Usage!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have an interesting problem which I can’t work out with the AWS-TA specifically for an S3 input.

I am collecting CloudTrail logs from an S3 bucket (no SQS, because the existing environment was preconfigured) I am collecting the logs with a Generic S3 input, but I have limited the collection window to only events that have been written to S3 in the last week (ish).

As an aside, the bucket is already lifecycle managed and only has 90 days of logs within it.

I am running the TA on an AWS HF instance with the necessary IAM roles, and data is collected, however.. there is a significant delay between logs being written to S3, collected and indexed.

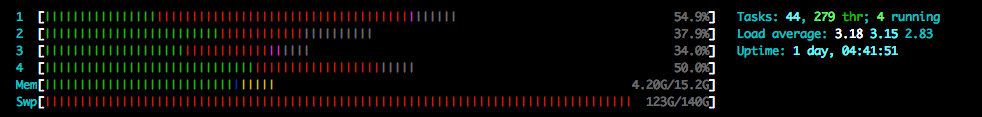

After some investigation, I have discovered that the HF is chewing through its entire Swap disk, whilst the physical (well, virtual) memory is max ~4gb of total available.

I have been debugging this issue on a variety of ec2 instance types (c5.4xl/2xl/xl) and the system memory/core count has no impact on the behaviour.

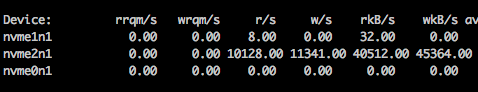

The behaviour of this was such that the swap file located on the / volume would be heavily written to, this is in turn would eat the available IOPS on the volume, which when depleted cause high IOwait and eventually the system becomes unresponsive.

To check if this was a problem with the process needing a high amount of virt. mem for the initial ‘read’ I have moved the instance to an m5d, which provides an ephemeral 140GB ssd, which I have used as a dedicated swap device as it is not IOP limited (except by the underlying hardware device). As predicted, this has solved the IOPS depleting and the iowait condition is prevented.

The box has a steady load avg of ~3 (yes I know it’s only got 4 cores) but is otherwise quite happy.

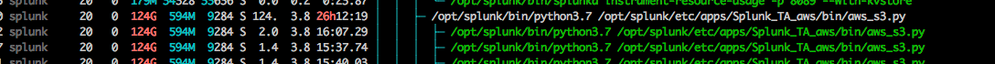

However, the S3 python process has consumed 124GB of Virtual Memory, of which 123GB is on Swap.

I have never seen anything like this before with this TA across many deployments on AWS.

The logs from the TA report nothing untoward in the relevant log file, and whilst CT logs are getting in, they are taking 2-4 hours to arrive, rather than 30mins as configured in the input.

I have dumped the /swap partition with strings, and I can see that the swap file contains the data from the CT log files read from s3, so my present assumption is that the entire buckets log files are being read into swap (multiple times, as there is only 7-8GB of logs in the bucket), it seems that once swap is full, the oldest logs are evicted from swap, and the HF finally processes them, and sends them to the indexers.

Side note – if I run the HF without swap, it gets oomkilled and restarts. No crashlog is generated. Even so, physical memory usage never peaks at more than 3GB.

Does anyone have any ideas what could be up?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An update on this:

This was related to my recent post about memory allocation on systemd.

I managed to work around the problem by giving the host a large fast swap disk, but the crux of the issue was the constrained memory limit in the systemd unit file.

Fixing the root caused eradicated the issue!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An update on this:

This was related to my recent post about memory allocation on systemd.

I managed to work around the problem by giving the host a large fast swap disk, but the crux of the issue was the constrained memory limit in the systemd unit file.

Fixing the root caused eradicated the issue!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PavelP

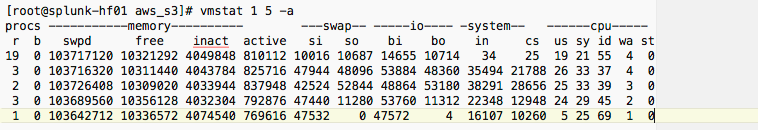

Thanks for your suggestions, see the results of vmstat and iostat below.

As you can see this is real swapping, (vmstat formatted for clairty)

And in iostat you can see the highest io is on the dedicated swap device nvme2n1

I'm not worried about the load avg, I was just highlighting that I know the system in the screenshot is below suggested minimum spec, but the behaviour is just the same on a 16core 64gb instance.

Thanks for the note on vmtouch - I had not come across that tool before!

A new discovery is that the checkpoint file which the TA writes is now over 800mb in size. This is utter madness because the bucket that is being processed only has a few thousand files in it, and a quick look inside the checkpoint file has many thousands of repeated bucket names. There is clearly something very strange occurring with this python input process.

It was remiss of me not to post version info in the original question, but this is Splunk Enterprise 8.0.3 with version 5.0 of the TA. (latest)

Its a fresh deployment, so no upgrade legacy inputs.

I am close to writing this one up as a bug and throwing it over to support.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I cannot see the screenshots, getting 403:

https://answers.splunk.com/storage/temp/288600-screen-shot-2020-04-20-at-185854.png

https://answers.splunk.com/storage/temp/288601-screen-shot-2020-04-20-at-182553.png

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @nickhills ,

just to be sure and not to confuse the virtual memory allocation and active swapping (=paging): do you actually see active swapping? Use vmstat or iostat (part of sysstat package):

vmstat

iostat -xd 1

If there is no active swapping (constantly many MBs/s in vmstat or about 99-100 %util in iostat output) for a long time then there is no need to worry.

It is better to use vmtouch (https://hoytech.com/vmtouch/) and lsof instead of strings to check which files are mapped to the virtual memory space, because strings will show you old bytes from the previous use (linux don't fill the space with zeros after use).

The cause of triggered OOM-Killer: you have to see "cannot allocate memory" or similar in dmesg or /var/log/messages or using journalctl. Splunk cannot write a crashlog yet because it cannot allocate memory to start.

The loadavg is still OK (below 4, the CPU count). You would have it two digits high if it being swapping.

I haven't worked with Splunk_TA_aws yet and cannot check why it try to allocate so much memory.

If it is being swapping I'll call splunk support.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to note, this is a dedicated HF for AWS, its not running any searches, and the other AWS inputs all work fine and in a timely fashion, this issue is constrained just to the generic s3 task (of which this is the only one)

I have seen this doc https://docs.splunk.com/Documentation/Splunk/7.2.4/Troubleshooting/Troubleshootmemoryusage but as described above this is not related to searches, or even the splunkd process.