Are you a member of the Splunk Community?

- Find Answers

- :

- Apps & Add-ons

- :

- All Apps and Add-ons

- :

- Re: 1 day 24 hours behind

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting and weird thing with the Event Hub input. I have an Event Hub where the data is always almost exactly 24 hours behind. I created a capture to explore the data, and it is in the event hub with data and current time stamps.

even if i create a new input, the add-on seems to immediately grab all the data, but only up to the last 24 hours, or 1 day ago. Sometimes it even falls a little behind so the time picker for 24 hours shows no results found. could it be mis-counting the timestamp or date? Maybe using a timezone thing? This is the only event hub doing this.

is there a way to debug and see exactly which events are coming in and when?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We found the answer.

Don't go big, go small!

Because there was so much data coming in, a larger batch size simply buffered in memory, and then crashed. So, decreasing the batch size allows the add-on to finish, and then go ahead and write to disk. We also brought the polling interval down, so once it completes one batch, it will almost immediately start a new one.

We also increased the partitions (which means a new event hub) to 20, and the thread count to 20. So The add-on will have plenty of threads to bring in a lot of small batches, very quickly.

so, the moral of the story? Bigger is not always better.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have 3.0.1 of the add on installed (https://splunkbase.splunk.com/app/3757) But I don't see an option to set threads in the UI. 3.1.0 is out but the notes say event hub is deprecated.

What am I missing? Thanks.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We found the answer.

Don't go big, go small!

Because there was so much data coming in, a larger batch size simply buffered in memory, and then crashed. So, decreasing the batch size allows the add-on to finish, and then go ahead and write to disk. We also brought the polling interval down, so once it completes one batch, it will almost immediately start a new one.

We also increased the partitions (which means a new event hub) to 20, and the thread count to 20. So The add-on will have plenty of threads to bring in a lot of small batches, very quickly.

so, the moral of the story? Bigger is not always better.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By default EVENTHUB generates the data in UTC timezone. So if your timezone is behind the UTC zone you probably face this issue. To deal with problem set your timezone as UTC in Splunk Environment it will fix the issue. Another way to get rid this issue in the props.conf use TZ for indexing the data in a proper timezone.

https://docs.splunk.com/Documentation/Splunk/latest/Admin/Propsconf

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

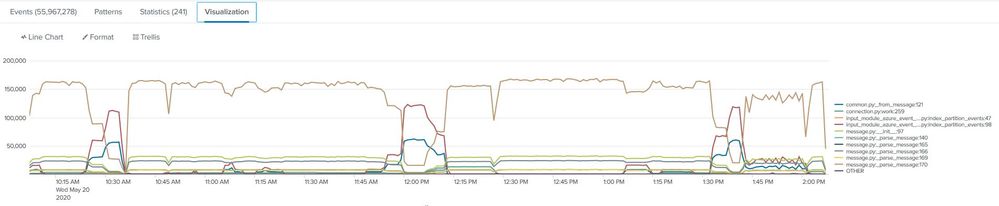

Thank you for the response. However, that is not the issue. I actually did think it was for a bit. So what is happening is that the data is coming in so fast, the add-on could not keep up. The Event Hub is receiving about 40GB data per day.

So, we created a new hub with 20 partitions and 20 DTU. Then we set the add-on to 20 threads to match the 1:1 recommendation.

it still could not keep up. In fact it generated errors. so, I disabled/enabled the input, and finally overnight it jumped really high and caught up.

I suspect that it was gathering data, holding it in memory and then finally wrote it to disk. Maybe a variable to adjust how much it holds before sending to Splunk would be a luxury enhancement.

Now, I've just increased the vCPU on the Heavy Forwarder to 32vCPU in Azure. That took about 20 minutes, so the data is running about 30 minutes behind right now. I'm hoping it will catch up.

I would like to try using HEC for this. Do you know how to send data to Splunk from Event Hub to HEC?

2020-05-20 01:19:46,481 WARNING pid=8830 tid=ThreadPoolExecutor-0_1 file=connection.py:work:255 | ConnectionClose(u'ErrorCodes.UnknownError: Connection in an unexpected error state.',)

2020-05-20 01:19:46,471 INFO pid=8830 tid=ThreadPoolExecutor-0_1 file=cbs_auth.py:handle_token:143 | CBS error occured on connection 'EHConsumer-bef1b46f-78de3574eb81-partition1'.

2020-05-20 01:19:46,462 INFO pid=8830 tid=ThreadPoolExecutor-0_1 file=connection.py:_state_changed:181 | Connection with ID 'EHConsumer-bef1b46f-78de3574eb81-partition1' unexpectedly in an error state. Closing: False, Error: None

2020-05-20 01:19:46,447 INFO pid=8830 tid=ThreadPoolExecutor-0_1 file=cbs_auth.py:handle_token:143 | 'Error in write_outgoing_bytes.' ('/data/src/vendor/azure-uamqp-c/deps/azure-c-shared-utility/adapters/tlsio_openssl.c':'tlsio_openssl_send':1374)

2020-05-20 01:19:46,443 INFO pid=8830 tid=ThreadPoolExecutor-0_1 file=cbs_auth.py:handle_token:143 | 'Error in xio_send.' ('/data/src/vendor/azure-uamqp-c/deps/azure-c-shared-utility/adapters/tlsio_openssl.c':'write_outgoing_bytes':641)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Have your find any solution for this, in my case, we are not getting logs from event hub and in internal logs intermittently can see below error messages. I am using current version of Microsoft Cloud Services add on (4.1.1).