Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Other Using Splunk

- :

- Alerting

- :

- Re: How to search and alert on anomalies/spikes in...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to search and alert on anomalies/spikes in multiple fields simultaneously?

I've searched around, but failed to come up with an answer to the problem I'm facing:

My data set contains events that correspond to different categories. I'd like to find the counts of these events for each category per day, and then pick out spikes in each of these counts (a spike could be defined as three times the 5-point moving average).

Here's an example search that picks out the number of events for all categories [the category field is bg]:

index=bg_data earliest=-1y bg="*" | timechart span=1d count(start_time) as num_events by bg

And here's an example search that detects spikes for a single category at a time:

index=bg_data earliest=-1y bg="test_bg" | timechart span=1d count(start_time) as num_events | trendline sma5(num_events) as moving_avg | eval spike=if(num_events > 3 * moving_avg, 500, 0) | search spike>0

I've tried using join, or map, and other search tricks, but I have yet to find a way of combining the above 2 searches. What I'd like is a way to set up an alert that fires each time a spike is detected for the previous 24 hours of events, and a table that displays all spikes. For example, it might be something like:

_time | category_a_spike | category_b_spike | category_c_spike

day 1 | ...

day 2 | ...

day 3 | ...

where "category_x_spike" is the value of the spike for that particular category.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rgag : the by clause is your friend. Stay in stats-land, then xyseries/timechart only if appropriate.

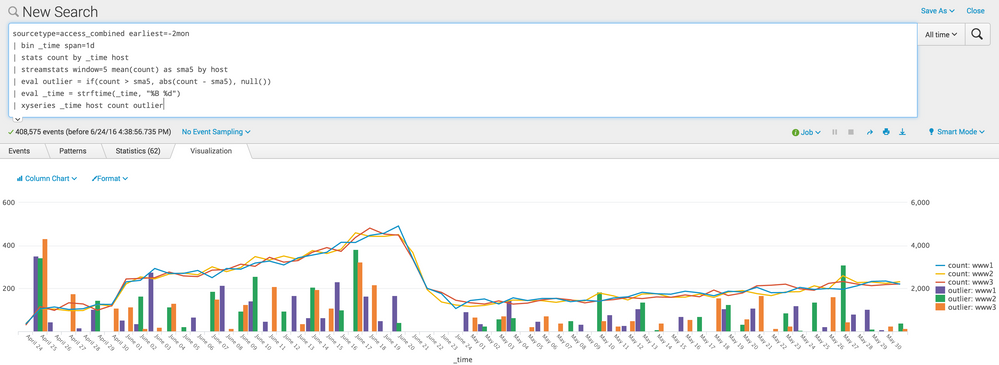

Here is a similar example.

I have three hosts, ww1, ww2, and ww3. I'd like to see if any day count is over the 5 day moving average, right? So, instead of using timechart, which turns my host values into fields (to make it chartable), I'm going to stay within stats. However, that requires that I bin up _time myself:

sourcetype=access_combined

| bin _time span=1d

| stats count by host _time

This gives us our basic timechart, albeit row-centric and currently not chartable. That is ok. Thats what we want. Next, use streamstats instead of trendline. You were using the sma function in your example, so we'll just do the same with streamstats.

| streamstats window=5 mean(count) as sma5 by host

Crucial bit is the by host at the end. Now we get per-host moving averages. We're nearly done.

Let's make an "outlier" field. We can set the outlier to a value to something more informative than just a boolean "1", e.g., the difference from the threshold, that is, how big of a spike:

| eval outlier = if(count > (3 * sma5), count - (3 * sma5), null())

At this point, we can do a little cleaning up of the _time field, since it won't look pretty when we chart.

| eval _time = strftime(_time, "%b %d")

Lastly, we use xyseries to make the whole thing chartable.

| xyseries _time host count outlier

Here is a screenshot of an example, where, for the sake of demonstration, I made the outlier detection very sensitive (whenever the count exceeds the moving average). I also set the counts to be overlays on their own axis whereas the outliers are the default visualization type, a column.

So to get your alert for a spike over the past 24 hours, you'd also want to search on more than 24 hours so the first data point has a stable moving average, and throwaway the first few points:

index=bg_data earliest=-30h bg="*"

| bin _time span=1h

| stats count(start_time) as num_events by bg

| streamstats window=5 mean(num_events) as moving_avg by bg

| eval spike=if(num_events > (3 * moving_avg), 500, 0)

| where _time > relative_time(now(), "-24h") AND spike > 0

And your second search for the table of counts per bg,

index=bg_data earliest=-30h bg="*"

| bin _time span=1h

| stats count(start_time) as num_events by bg

| streamstats window=5 mean(num_events) as moving_avg by bg

| eval spike=if(num_events > (3 * moving_avg), 1, 0)

| where _time > relative_time(now(), "-24h") AND spike > 0

| timechart sum(spike) as spike_count by bg

^ Those likely have some mistakes in them. but the gist is there, well, at least for what I would do 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

wish I could give three upvotes for the awesome answer.

But, WAIT, does | streamstats window=5 mean(count) as sma5 by host work?

I just did some testing, and on 6.4 that combination of terms seems to calculate against only the subset of the last 5 records that were on the same host. Here's the test code that made me conclude that...

| makeresults count=6| streamstats count as thecount | eval _time=_time+thecount, test="test1"| eval junk=thecount%2| streamstats count as mycount by junk window=2

| append

[| makeresults count=6| streamstats count as thecount | eval _time=_time+thecount+10, test="test2"| eval junk=thecount%2| sort 0 junk _time| streamstats count as mycount by junk window=2 ]

| table _time test thecount junk mycount

| sort 0 _time

Ah, you have established an implicit order in the | stats by host _time. Got it. So some of my prior code may have worked accidentally because immediately prior I got the order right in the stats command. It will only fail when working with unsorted raw data.

... and, I found that global=true gives me the results I was expecting from windowed streamstats.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So what's the correct search?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DalJeanis sorting is crucial - it can be extra confusing with streamstats in particular since normal event searches return events in reverse chronological order whereas things like timechart will sort for you in chronological order.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm attempting to do they same thing and have tried the above search however my 'num_events' and 'moving_avg' are showing the same number. I'm not sure why it's not showing the averages.

index="my_data" earliest=-10day

|bin _time span=1h

|stats count(EventId) as num_events by UserName

|streamstats window=1 mean(num_events) as moving_avg by UserName

| eval spike=if(num_events > (3 * moving_avg), 1, 0)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You told it window=1, so it's averaging one event. You also added up ALL the events for each user, rather than adding them up by day.

Change to ...

index="my_data" earliest=-10d@d

| bin _time span=1h

| stats count(EventId) as num_events by UserName _time

| streamstats window=5 mean(num_events) as moving_avg by UserName

| eval spike=if(num_events > (3 * moving_avg), 1, 0)

| where _time > relative_time(now(), "-6d@d")

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This seems to have done the trick however for some reason both my Table and Graph are doing some grouping of items into an "OTHER" group as appose to have each UserName a distinct value.

index="my_data" earliest=-10d@d

| bucket _time span=1h

| stats count(EventId) as num_events by UserName _time

| streamstats window=5 mean(num_events) as moving_avg by UserName

| eval spike=if(num_events > (4 * moving_avg),1,0)

|timechart sum(spike) as spike_count by UserName

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fixed this last issue by modifying the last line to:

|timechart sum(spike) as spike_count by UserName useother=0