- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why are my access_logs inconsistent?

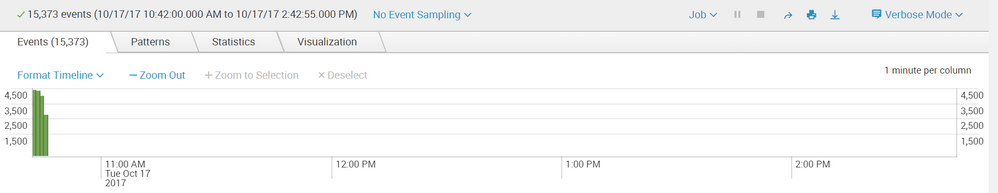

My access_logs files are not being pulled constantly. There are large gaps between the pulling of logs.

The logs are being updated within the server path (timestamp shows this), but they are not all being pulled. The source files being pulled tends to be inconsistent; on some occasions it will pull all the proper source files, on other occasions, it will pull 1-2 source files (all from same path).

I attached an image as well (for visual reference).

By the way, our inputs.conf file has a very basic setup:

[monitor:path*.log]

sourcetype =log

index = name

Any help would be greatly appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Normally applications have more traffic during normal working hours due to employees and/or customers actually using the applications and related infrastructure at these times. I can see from your screenshot that the peaks in your log retrieval are indeed approximately during the normal working hours. 14. Oct and 15. Oct is a Saturday and a Sunday (weekend), so it makes sense that there is less activity during these days.

If this doesn't explain the behavior, perhaps check the index time lag of the logs, as explained in the following thread.

https://answers.splunk.com/answers/48731/determining-logging-lag-and-device-feed-monitoring.html

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As @hettervi has said, this seems normal. You don't have gaps, you just have less data during certain hours.. The screenshot that you provided shows data at all hours across the time period of the search. It is probably just the nature of the business days as he has suggested.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@cpetterborg + @hettervi thank you both for your answers. I think the photo I initially attached was not the best example. I attached another one (screenshot-4) that gives a better explanation. I will look into that article you attached @hettervi.

Thanks again

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you checked the index time lag? I linked to another discussion on index time lag in my original answer. If the index time lag of your logs is consistent and low, it is probably your logging system that is inconsistent, not Splunk. Might be worth to check out?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would look at whether or not new data is actually being sent to Splunk. There are a couple of things I'd look at.

- restart the forwarder - probably not the case, but it is a quick thing to do

- check on the rotation of the log data and see if the log may be waiting for there to be data beyond the end of the data pointer that the forwarder had for the file. If the forwarder thinks that it doesn't need to send more data because the logrotate didn't do something that it should, or the CRC of the first 256 bytes is the same, it's going to think that the file is the same file that was there before the rotate and it's going to wait to see new data beyond the pointer in the fishbucket.

Gaps in the data are not going to be that it just doesn't send for a short time and them pick up at some time in the future without filling in the data between the connect times. Splunk wants to finish the job it started. So my guess would be something along the lines of #2 above.

![![alt text][1] ![alt text][1]](/t5/image/serverpage/image-id/3680iC4C41D08C751B30A/image-size/large?v=v2&px=999)