Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: What's the delay between last event written to...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What's the delay between last event written to disk and now()?

All our cyber alerts are now based on the last five minutes of indexed data. Therefore we wondered about a potential delay in writing to disk and current time.

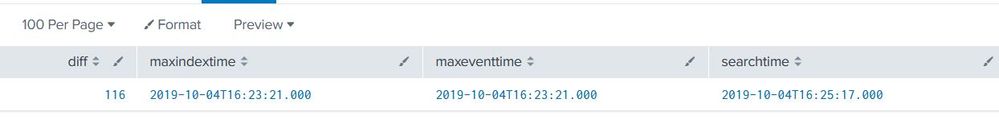

Running the following -

| tstats max(_indextime) as last_event max(_time) as etime

| eval diff = now()-last_event

| eval searchtime= strftime(now(),"%Y-%m-%dT%H:%M:%S.%Q")

| eval maxindextime= strftime(last_event, "%Y-%m-%dT%H:%M:%S.%Q")

| eval maxeventtime= strftime(etime, "%Y-%m-%dT%H:%M:%S.%Q")

| table diff maxindextime maxeventtime searchtime

We see for the diff, values that range from 2 - 120 and it's all seconds, right?

Does it make sense?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| eval lag=(_indextime-now())

You can do an avg of lag field. I think this will give you what you're looking for.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I run index=* | eval lag=( now() - _indextime ) the lag number makes sense - few seconds.

However, when running -

index=* | eval lag=( now() - _indextime )

| stats avg(lag)

When we watch the query running, it starts with low numbers – couple of seconds and as it keeps running, the avg(lag) keeps steadily increasing to 260 and above. Why is it?

Go figure out how now() behaves when the query runs for a couple of minutes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A developer on the team said the following -

-- When we look at the query we are calculating the time difference between now (which is the time when the search has started), and _indextime (which is the time the data was indexed into Splunk) for all the events in past 15 minutes. The now() time is fixed, and the _indextime varies between zero to 15 mins for each event. When we take a difference of these 2 times for all events and calculate the average it will keep increasing as the time difference between all the events and now() keeps increasing.

Does it make sense?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's exactly what's happening. You can do "_indextime-_time" that would keep you avg down.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The funny thing is that we went through a similar case with @adonio just two weeks ago at How to determine what causes the unevenness of the indexing rate across the indexers?

By adding where index=<index name> the diff is couple of seconds most of the time.

Thank you @adonio and @sandeepmakkena.