- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Using timechart command isn't working for renaming...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

When using timechart without a BY this works.

index IN (idx)

AND host IN (server)

AND source IN (ssl_access_log)

AND sourcetype=access_combined

AND method IN (GET,POST)

AND file="confirm.jsp"

AND date_hour>=6 AND date_hour<=22 latest=+1d@d

| eval certsFiled=case(file="confirm.jsp","1")

| timechart count span=2min

| timewrap d series=short

| where _time >= relative_time(now(), "@d+6h+55min") AND _time <= relative_time(now(), "@d+22h")

| eval colname0 = strftime(relative_time(now(), "@d"),"%D-%a")

| eval colname1 = strftime(relative_time(now(), "-d@d"), "%D-%a")

| eval colname2 = strftime(relative_time(now(), "-2d@d"), "%D-%a")

| eval {colname0} = s0

| eval {colname1} = s1

| eval {colname2} = s2

| fields - s* col*

However, once adding the BY clause, the logic no longer works.

index IN (idx) sourcetype IN (ssl_access_log)

AND date_hour>=17 AND date_hour<=20 Exception OR MQException earliest=-7d@d latest=+1d@d

| rex "\s(?<exception>[a-zA-Z\.]+Exception)[:\s]"

| search exception=*

| eval exception=case(exception="MQException","mqX",

exception="com.ibm.mq.MQException","mqXibm")

| timechart count span=1m BY exception

| timewrap d series=short

| where _time >= relative_time(now(), "@d+17h") AND _time <= relative_time(now(), "@d+20h")

| eval colname0 = strftime(relative_time(now(), "@d"),"%D-%a")

| eval colname1 = strftime(relative_time(now(), "-d@d"), "%D-%a")

| eval colname2 = strftime(relative_time(now(), "-2d@d"), "%D-%a")

| eval {colname0} = s0

| eval {colname1} = s1

| eval {colname2} = s2

| fields - s* col*

This includes many more days (colname) and exceptions (removed for brevity).

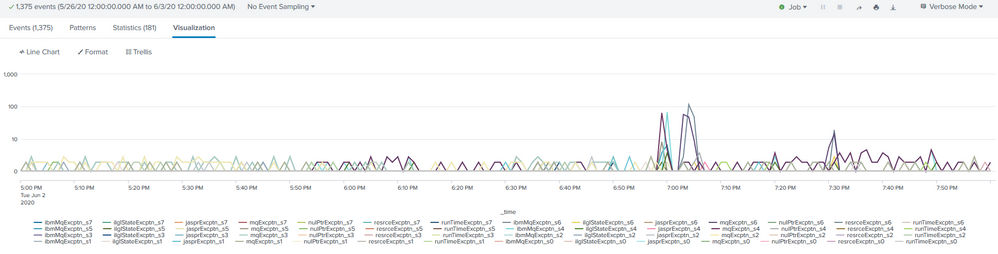

UPDATE: Here is the chart without renaming.

Instead of ibmMqExcpttn_s7 it should read Mon 5/25/20 ibmMqExcptn. _s6 would beTue; _s5 would be Wed; etc.

Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not totally sure what you want to finally see in the visualisation, however, once you use a split BY clause, the fields names will become named according to your data, so the normal way to handle this situation is to use foreach.

So, I would do something like

| eval exception="X_".exception

| timechart count span=1m BY exception

| timewrap d series=short

| where _time >= relative_time(now(), "@d+17h") AND _time <= relative_time(now(), "@d+20h")

| foreach X_* [ eval s=replace("<<MATCHSTR>>", ".*_s(\d+)$", "\1"), f=replace("<<MATCHSTR>>", "([^_]*)_s.*", "\1"), fmt=printf("-%dd@d", s), col=f.strftime(relative_time(_time, fmt), ":%D-%a"), {col}='<<FIELD>>' ]

| fields - X_*

That will give you columns with the original exception name and date for as many exceptions and series you have. What this logic is doing is

- Making the exception value something unique by prefixing the exception value with X_ (use whatever you expect to be unique)

- Get the series # from the new timecharted field name _sNN into new field s

- Remove the series name from the new field name into new field f

- Create the relative date value from the _time field based on series number into new field fmt

- Create the new desired column name into field col

- Finally make the col field become the new column name with the original value and remove original X_ fields

Note, if you change the prefix X_ then the foreach command needs to change to reflect your chosen prefix.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not totally sure what you want to finally see in the visualisation, however, once you use a split BY clause, the fields names will become named according to your data, so the normal way to handle this situation is to use foreach.

So, I would do something like

| eval exception="X_".exception

| timechart count span=1m BY exception

| timewrap d series=short

| where _time >= relative_time(now(), "@d+17h") AND _time <= relative_time(now(), "@d+20h")

| foreach X_* [ eval s=replace("<<MATCHSTR>>", ".*_s(\d+)$", "\1"), f=replace("<<MATCHSTR>>", "([^_]*)_s.*", "\1"), fmt=printf("-%dd@d", s), col=f.strftime(relative_time(_time, fmt), ":%D-%a"), {col}='<<FIELD>>' ]

| fields - X_*

That will give you columns with the original exception name and date for as many exceptions and series you have. What this logic is doing is

- Making the exception value something unique by prefixing the exception value with X_ (use whatever you expect to be unique)

- Get the series # from the new timecharted field name _sNN into new field s

- Remove the series name from the new field name into new field f

- Create the relative date value from the _time field based on series number into new field fmt

- Create the new desired column name into field col

- Finally make the col field become the new column name with the original value and remove original X_ fields

Note, if you change the prefix X_ then the foreach command needs to change to reflect your chosen prefix.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow! @bowesmana that is something else! Thank you.

Now I wish I understood the solution. 🙂

I've used foreach in a previous d/b, but it was a copy-N-paste job. Since then, I've Googled "foreach Splunk" and the returns were less than helpful. Do you know of any resources that explain this in greater detail? I think once I have a grasp of this command I will be able to produce more informative d/b's for my clients.

For instance, if I understood this command fully I could swap the date and the exception. I'll test some changes when I have the time.

Thanks again and God bless.

Stay safe and healthy, you and yours,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've not found much good documentation on foreach, but have learnt mainly through experimentation.

However, you can do neat things, for example if you have fields such as

X_A_nnn

X_A_zzz

X_B_nnn

X_B_zzz

you can do interesting things like

| makeresults

| eval X_A_nnn=1

| eval X_A_zzz=2

| eval X_B_nnn=21

| eval X_B_zzz=22

| foreach X_*_* [ eval newField_<<MATCHSEG2>>_<<MATCHSEG1>>='<<FIELD>>', tmpField_<<MATCHSTR>>="<<MATCHSTR>>" ]

which really give you powerful field processing functionality. What it then does it allow you to ensure you use standardised field naming conventions so that foreach can become useful.

Have fun with it

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The by clause does not rename fields. It groups results by the specified field(s).

You changed more than just timechart. Have you verified the additional lines did not introduce an error?

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@richgalloway

I haven't seen any errors.

I know BY clause is not renaming. What I mean is after adding the BY exception clause the number of columns increased from 3 to 21+ cols. The column name (s0..s2) was prepended by the exception (mqX, mqIbmX).

What we want to be displayed is mqX_Tues-5/26/20, mqIbmX_Wed-5/27/20, etc.

Is there a field name of the columns generated in the timechart/timewrap which we can reference with rename or replace or eval {} ?

Thanks and God bless,

Genesius

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Adding by exception will create a column for each possible value of the exception field.

You may be able to use the foreach command to accomplish the task.

foreach mq* [eval {<<FIELD>> = strftime(relative_time(now(), "@d"),"%D-%a")]

Examine the outputs of the two timestamp commands to see exactly what is available to you.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My bad. I should have written (reversed).

What we want to be displayed is Tues-5/26/20:mqX; Wed-5/27/20:mqIbmX, etc.

Thanks and God bless,

Genesius