- Splunk Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Update output count as percent

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to generate a list of the percentages of response codes by resultCode by app.

A simplified version of events are:

appName=app1, resultCode=500

appName=app1, resultCode=500

appName=app1, resultCode=404

appName=app2, resultCode=404

...

If I do

<initial search that returns the above events> | stats count by appName resultCode

it gets me very close to what I am trying to do and outputs something like this to the Statistics tab:

| appName | resultCode | count |

| app1 | 500 | 25 |

| app1 | 404 | 10 |

| app1 | 200 | 100 |

| app2 | 500 | 14 |

I need to take this one step further, and have an output that instead of showing the count by resultCode, will instead show the percentage each resultCode comprises by appName.

The ideal result is:

| appName | 200 | 404 | 500 |

| app1 | 90 | 2 | 8 |

| app2 | 85 | 10 | 5 |

| ... |

This is ideal, but even if the result was

app1, 200, 90

app1, 404, 2

app1, 500, 8

...

(where the columns are appName, resultCode, and percentage (based on the count of events by code for an app over all events for the app)

I can get a count of events by appName in a separate query to be able to get to the total, but I am just not finding how to use that specific appName's total used for each of the specific app error values all together.

I'm missing how to do

| stats count by appName as appTotal

| stats count by appName resultCode as appResult

| eval resultPerc=rount((appResult*100)/appTotal, 2)

and have that show in a table in a way that can be clearly displayed.

Thanks for any ideas on what I might be missing here would be appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think this SPL should do what you are asking.

<base_search>

| stats

count as count

by appName, resultCode

| eventstats

sum(count) as app_total_count

by appName

| eval

pct_of_total=round(('count'/'app_total_count')*100, 3)

| chart

sum(pct_of_total) as pct_of_total

over appName

by resultCode

| fillnull value=0.000

Using dummy data on my local instance I was able to get the output to look like this.

where each cell value represents the percentage of events that occurred with that status code for each app.

For a full overview of how I simulated locally you can reference this code.

| makeresults

| eval

appName="app1",

resultCode=500

| append

[

| makeresults

| eval

appName="app1",

resultCode=500

]

| append

[

| makeresults

| eval

appName="app1",

resultCode=split("404|404|200|404|500|404|200", "|")

]

| append

[

| makeresults

| eval

appName="app2",

resultCode=split("200|200|404|200", "|")

]

| append

[

| makeresults

| eval

appName="app3",

resultCode=split("404|200|200|200|500|404|200", "|")

]

| mvexpand resultCode

``` below is the relevant SPL ```

| stats

count as count

by appName, resultCode

| eventstats

sum(count) as app_total_count

by appName

| eval

pct_of_total=round(('count'/'app_total_count')*100, 3)

| chart

values(pct_of_total) as pct_of_total

over appName

by resultCode

| fillnull value=0.000

Edit:

And to use this at scale (more that 10 unique status_codes) you would need to add a limit=<number> parameter to the chart command, otherwise it will populate another values named "OTHER" after 10 unique values are transformed.

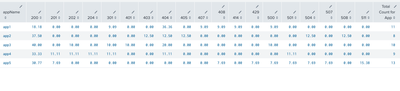

This is what a bigger table would look like

Notice there is also another field at the right of the table to give more context at the total count of status codes seen for each application so that percentages can be inferred to a total count if desired.

Code to do this would look something like this. (Added comments for each line to add detail)

<base_search>

``` simple count of events for each unique combo of appName/resultCode values ```

| stats

count as count

by appName, resultCode

``` sum up the counts accross each unique appName ```

| eventstats

sum(count) as app_total_count

by appName

``` add aditional rows as a Total count for each unique appName value (This is optional since this step is just providing more context to the final table) ```

| appendpipe

[

| stats

sum(count) as count

by appName

| eval

resultCode="Total",

app_total_count='count'

]

``` calculate percentage of occurrence for a particular resultCode across each unique appName

with the exception of the Total rows, for these we want to just carry over the total app count (for context)

```

| eval

pct_of_total=if(

'resultCode'=="Total",

'count',

round(('count'/'app_total_count')*100, 2)

)

```

This chart command builds a sort contingency table, using the derived 'pct_of_total' values and putting them in there respective cell when

appName values are in the left-most column and using the values from resultCode as the table header.

Note: If a specific resultCode that showed for one app that did not show for another, then charting this way will leave a blank slot in that cell.

To get around this we will use a fillnull command in the next step of the search.

Adding the limit=<number> parameter sets your upper limit of the number of columns to generate for each unique resultCode value. This defaults to 10 if not specified and for resultCode values exceeding the top 10 will fall into the bucket "OTHER" and be tabled that way.

Example: If you only want to see the top 3 resultCode values in the table and dont want an additional column "OTHER", you have the option to also set 'useother=false' along with 'limit=3'

```

| chart limit=100 useother=true

sum(pct_of_total)

over appName,

by resultCode

``` renaming the "Total" column name to something more descriptive ```

| rename

Total as "Total Count for App"

``` fill any blank cells with 0 as this is the implied percentage if it is null ```

| fillnull value=0.00

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think this SPL should do what you are asking.

<base_search>

| stats

count as count

by appName, resultCode

| eventstats

sum(count) as app_total_count

by appName

| eval

pct_of_total=round(('count'/'app_total_count')*100, 3)

| chart

sum(pct_of_total) as pct_of_total

over appName

by resultCode

| fillnull value=0.000

Using dummy data on my local instance I was able to get the output to look like this.

where each cell value represents the percentage of events that occurred with that status code for each app.

For a full overview of how I simulated locally you can reference this code.

| makeresults

| eval

appName="app1",

resultCode=500

| append

[

| makeresults

| eval

appName="app1",

resultCode=500

]

| append

[

| makeresults

| eval

appName="app1",

resultCode=split("404|404|200|404|500|404|200", "|")

]

| append

[

| makeresults

| eval

appName="app2",

resultCode=split("200|200|404|200", "|")

]

| append

[

| makeresults

| eval

appName="app3",

resultCode=split("404|200|200|200|500|404|200", "|")

]

| mvexpand resultCode

``` below is the relevant SPL ```

| stats

count as count

by appName, resultCode

| eventstats

sum(count) as app_total_count

by appName

| eval

pct_of_total=round(('count'/'app_total_count')*100, 3)

| chart

values(pct_of_total) as pct_of_total

over appName

by resultCode

| fillnull value=0.000

Edit:

And to use this at scale (more that 10 unique status_codes) you would need to add a limit=<number> parameter to the chart command, otherwise it will populate another values named "OTHER" after 10 unique values are transformed.

This is what a bigger table would look like

Notice there is also another field at the right of the table to give more context at the total count of status codes seen for each application so that percentages can be inferred to a total count if desired.

Code to do this would look something like this. (Added comments for each line to add detail)

<base_search>

``` simple count of events for each unique combo of appName/resultCode values ```

| stats

count as count

by appName, resultCode

``` sum up the counts accross each unique appName ```

| eventstats

sum(count) as app_total_count

by appName

``` add aditional rows as a Total count for each unique appName value (This is optional since this step is just providing more context to the final table) ```

| appendpipe

[

| stats

sum(count) as count

by appName

| eval

resultCode="Total",

app_total_count='count'

]

``` calculate percentage of occurrence for a particular resultCode across each unique appName

with the exception of the Total rows, for these we want to just carry over the total app count (for context)

```

| eval

pct_of_total=if(

'resultCode'=="Total",

'count',

round(('count'/'app_total_count')*100, 2)

)

```

This chart command builds a sort contingency table, using the derived 'pct_of_total' values and putting them in there respective cell when

appName values are in the left-most column and using the values from resultCode as the table header.

Note: If a specific resultCode that showed for one app that did not show for another, then charting this way will leave a blank slot in that cell.

To get around this we will use a fillnull command in the next step of the search.

Adding the limit=<number> parameter sets your upper limit of the number of columns to generate for each unique resultCode value. This defaults to 10 if not specified and for resultCode values exceeding the top 10 will fall into the bucket "OTHER" and be tabled that way.

Example: If you only want to see the top 3 resultCode values in the table and dont want an additional column "OTHER", you have the option to also set 'useother=false' along with 'limit=3'

```

| chart limit=100 useother=true

sum(pct_of_total)

over appName,

by resultCode

``` renaming the "Total" column name to something more descriptive ```

| rename

Total as "Total Count for App"

``` fill any blank cells with 0 as this is the implied percentage if it is null ```

| fillnull value=0.00

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks!!! That is EXACTLY what I was trying to do and just was not getting. The solution makes complete sense and is cleaner than I expected. Thanks for taking the time to give all the information/help!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @xxxxxxxxxxxxxx ,

try the chart command (https://docs.splunk.com/Documentation/Splunk/9.1.2/SearchReference/Chart😞

<initial search that returns the above events>

| chart count OVER appName BY resultCodeCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Giuseppe, thanks for the thought. I did try the chart command. The way you have it does show it more cleanly then I had in my initial question, but I still have the same question/problem of how I can calculate and show the values as the percentages. Everything I do with just chart is still showing the counts and not percents.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

have you look at eventstats with chart?

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey, thanks for the feedback. Yes, I tried stats/eventstats. I can get the counts to show, but I don't see how I can get the query to count up the total per app, then use that to calculate the percent of each error per app. Everything I try, like the snips I showed in my question just don't seem to get me closer to being able to show percentages.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@dtburrows3 already shows you how you could combine those together.

1st count total with event stats and then calculate and present with chart.

Usually these will remember better when you need to learn those by yourself without just getting the correct answer 😉

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generaly yes, If we were to just copypasta the correct solution here then it may have a hard time sticking for them.

I have found that learning by worked examples with detailed explanation for doing things a certain way tends to stick well.

(Provided that the questioner is actually interested in learning and improving their Splunk skills)

So hopefully the OP can takeaway some additional knowledge that can be applied elsewhere on their Splunk journey.

Also at the same time, they do not have to stress about meeting job deadlines trying to figure out the nudges in the correct direction.

I'm new here on the forums and don't quite know the etiquette yet.

Just trying to spread Splunk knowledge in a manner that I feel would be the most beneficial for me if I were posting a question here.

Happy Splunking!