- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Trouble getting a very basic search to work- Is th...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using the "LogPush" feature from Cloudflare to get "log events" put into a Splunk index. The log events are all JSON, but apparently many records are sent in a single "push". My problem is, if I search for a "field=value" it will only match if the "field" is in the first record. If I search with just the "value" the record is found, but I need Splunk to recognize the fields.

I attached a screenshot of the search results when I just searched for "60286da6ca69eb29". I want to find that "record" via "RayID=60286da6ca69eb29". And really, I just want that record, that starts with the RayID field, and ends with the "WorkerSubrequest". If I search for "RayID=60286dc754aac4e8" (the first record) it matches the way I would expect.

So, the question is:

Is there a way to get just that portion of the log entry (i.e. just the portion that has "RayID=60286da6ca69eb29), or is that impossible because of the way the records are put into Splunk?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I see it, that event break setting isn't remotely close. It should be something like

([\r\n]+)\{"RayID"assuming each event starts with {"RayID

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem with the search is caused by improper onboarding of the data. Even if many records are sent in a single "push", Splunk should have no problem treating them as separate events if the properties are set correctly. If you'll share the props.conf stanza for that sourcetype then someone here should be able to help fix it.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I see it, that event break setting isn't remotely close. It should be something like

([\r\n]+)\{"RayID"assuming each event starts with {"RayID

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Keep in mind I am new to Splunk, but I definitely see what you are saying. Crazy that the sourcetype from Cloudflare is so wrong (i.e. this is what comes with the "Cloudflare App.") Having said that, it would appear to me that the basic "([\r\n]+)" should suffice, since as far as I can tell from the output, there is a "new line" at the end of each event.

Although, I tried that, and your suggestion, and it didn't seem to work. Is there anything else I need to do after changing that regular expression in the sourcetype to have it go into effect? Or, does it take some time before the change is reflected in the index? And yes, I am looking at data that was imported after I made the change.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to add comment about Cloudflare App. LineBreaker setting in the app was designed by assuming json logs will start with either Timestamp or CacheCacheStatus. But since Cloudflare log settings can be customized these assumptions does not work always. That is why be careful when you update your Cloudflare log settings on AWS. Also if it works please keep the LineBreaker as @richgalloway suggested, "new line" may not be enough.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@scelikokI asked the product manager (I work for Cloudflare) and he confirmed that there will always be a new line character between log entries, and that the order of the fields is never guaranteed, so there is no reason to try and match on the field after the new line character.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kcantrel, thank you for the information. 👍

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once you change the settings in a sourcetype it takes time for them to propagate to the rest of the instances.

The settings only affect new data. Events already indexed will not change.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info. Can you quantify "takes time"? It's been over an hour since I made a change that should have fixed the problem, but I'm not seeing it. And, yes, I am looking at new data coming in. Thanks!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Takes time" is intentionally vague since Splunk Cloud doesn't say how long it will take. I would say an hour is long enough, though.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay, so there must be something else that is causing my existing HEC to not use the updated sourcetype. I created a new HEC and started using it, and got the desired results. I'm going to stick with the basic "each event is on a separate line" logic, since as far as I have seen that has been the case, and trying to decide what should be after the "{" is non-deterministic.

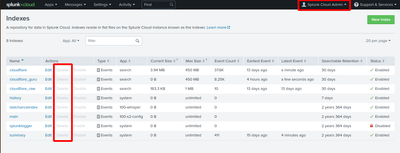

My final question, how can I delete an index? I created a new user and give it all the roles, including "can_delete," but when I go to the index page, the "delete" link is greyed out.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can delete an index in Splunk Cloud from the Settings->Indexes screen. Anyone with the sc_admin role should be able to do it.

The can_delete role/privilege controls use of the delete command in SPL. The command will hide events, but doesn't delete anything.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've logged in as sc_admin, which of course has the sc_admin role, but when I go to the Setting->index screen, this is what I see:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some indexes cannot be deleted. That includes the standard indexes (main, _*, etc.) and those provided by Splunk Cloud. They can be identified by the "app" column - "system" are the built-ins and "100*" are those provided by SC.

The others should be deleteable unless they were configured by the default/indexes.conf file in an app.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I understand all that, but if you look at the screenshot I am showing that ALL the indexes I have are greyed out. That includes the sytem ones, and the ones that I created (e.g. cloudflare_raw).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

bro, can you share your setup with me? I'm going through the same problem. in the indexed extractions did you put JSON? and in line_break put ([\r\n]+)\{"ClientIP" ? mine indexes several clients in the same package and I can't search if it's not the first.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This thread is more than 2 years old with an accepted solution. For better chances at getting an answer, please post a new question.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you created it using the UI then you should be able to delete it using the UI. Consider opening a support case.

If this reply helps you, Karma would be appreciated.