- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Transaction command over a large dataset

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Hoping someone can give some pointers how to solve this problem:

I run a transaction command on the last two weeks, which gives about 20.000 events, and for about 85 percent of events the transaction command combines the events perfectly.

However, for the remaining 13% there are still duplicate 's meaning that the transaction command has not combined them properly.

I think this is due to memory limits in the limits.conf and these could be increased, but it seems that there should be smarter options.

For example appending new events with a transaction command on an existing lookup if that is possible.

Or perhaps there is a better way of combining the information without using transaction at all.

The downside of the dataset is that transactions can occur over the entire two weeks; which means I cannot filter on maxspan, also filters on maxevents don't improve performance since the transactions can vary a lot.

Cheers,

Roelof

the minimal search:

index= sourcetype= earliest=@d-14d

| fields ...

| transaction keeporphans=True keepevicted=True

| outputlookup .csv

This is the full minimal search ^

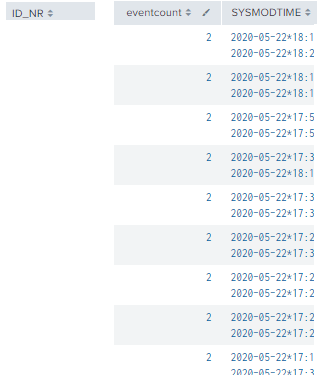

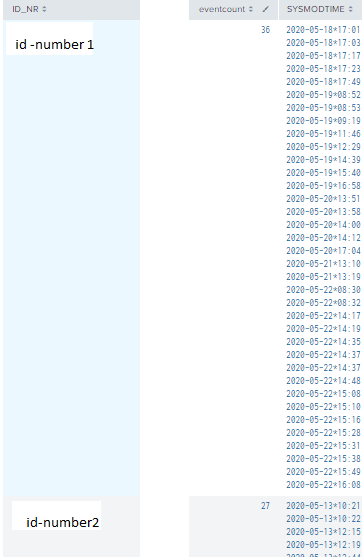

Two examples of the snippets from the correct dataset would be:

(id number deleted, but just an integer on which transaction is performed)

SYSMODTIME is a multivalue field, and there are a couple more mv fields in the complete dataset

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

index= sourcetype= earliest=@d-14d

| stats count as eventcount values(SYSMODTIME) as SYSMODTIME by ID_NR

| outputlookup .csv

your SYSMODTIME is in order, you can use values() not list()

you have to worry about limits.conf.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

index= sourcetype= earliest=@d-14d

| stats count as eventcount values(SYSMODTIME) as SYSMODTIME by ID_NR

| outputlookup .csv

your SYSMODTIME is in order, you can use values() not list()

you have to worry about limits.conf.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your feeedback, but I need 4 multivalue fields, I don't see how stats could do that, ideally I would also like to keep all fields with single values for each unique id_number, which are 20 more fields

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

stats can aggregate many fields.

use sort and append sorter by eval . and use stats values()

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This seems to work well to4kawa, thanks, example of what the search looks like now:

|stats count values(SYSMODTIME) as SYSMODTIME values(mvfield2) as mvfield2 values(mv3) as etc values(singlevaluefield) by id_number

|eval test=mvindex(SYSMODTIME,1)

produces a table with 1 unique id_number and multiple multivalue fields, and single value fields

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You r minimal search unfortunately lost a lot of code when posted.

In general, transaction is not the best tool for most times it is used. If you explained the use case, what the underlying data looks like, and what you are getting as your result from the transaction, we might be able to give you a code model that works 100% of the time and uses less machine time at the same time.

The splunk soup model is the way to aim for here, but without even pseudocode for your search, we can't narrow it down much.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DalJeanis

what's the splunk soupmodel?

I hear google, but I can't find related.

https://answers.splunk.com/answers/561130/how-to-join-two-tables-where-the-key-is-named-diff.html

Is this?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've edited the initial question to include some sample data, hope this provides enough information