Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Max/Min/Avg TPS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I have this query:

| timechart span=1s count AS TPS

| eventstats max(TPS) as MaxPeakTPS

| stats avg(TPS) as avgTPS first(peakTPS) as peakTPS first(peakTime) as peakTime

| fieldformat peakTime=strftime(peakTime,"%x %X")

This currently outputs Max TPS when Max TPS took place as well as the AVG TPS. I was wondering if it's possible to also display Min TPS and when that took place?

TIA

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume that there is a typos in your MaxPeakTPS in the eventstats command and your use of peakTPS in the following stats and also the use of peakTime, which does not exist as a field.

You can do this

| timechart span=1s count AS TPS

``` Calculate min and max TPS ```

| eventstats max(TPS) as max_TPS min(TPS) as min_TPS

``` Now work out average TPS, actual min and max TPS and then the first

occurrence of the min/max TPS ```

| stats avg(TPS) as avgTPS values(*_TPS) as *_TPS

min(eval(if(TPS=max_TPS, _time, null()))) as maxTime

min(eval(if(TPS=min_TPS, _time, null()))) as minTime

| fieldformat maxTime=strftime(maxTime,"%x %X")

| fieldformat minTime=strftime(minTime,"%x %X")The min(eval... statements just look for the first _time when TPS is either min or max to get the earliest time when these occurred.

Note the use of field naming conventions min_TPS/max_TPS that allows the use of wildcards in the stats.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume that there is a typos in your MaxPeakTPS in the eventstats command and your use of peakTPS in the following stats and also the use of peakTime, which does not exist as a field.

You can do this

| timechart span=1s count AS TPS

``` Calculate min and max TPS ```

| eventstats max(TPS) as max_TPS min(TPS) as min_TPS

``` Now work out average TPS, actual min and max TPS and then the first

occurrence of the min/max TPS ```

| stats avg(TPS) as avgTPS values(*_TPS) as *_TPS

min(eval(if(TPS=max_TPS, _time, null()))) as maxTime

min(eval(if(TPS=min_TPS, _time, null()))) as minTime

| fieldformat maxTime=strftime(maxTime,"%x %X")

| fieldformat minTime=strftime(minTime,"%x %X")The min(eval... statements just look for the first _time when TPS is either min or max to get the earliest time when these occurred.

Note the use of field naming conventions min_TPS/max_TPS that allows the use of wildcards in the stats.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

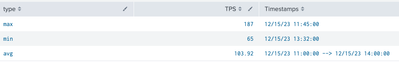

Tried this out and came back with this. Format might be a little different than what you asked for but I think tells the same story.

| bucket span=1m _time

| stats

count as TPS

by _time

| eventstats

min(TPS) as min_TPS,

max(TPS) as max_TPS

| foreach *_TPS

[

| eval

<<MATCHSTR>>_TPS_epoch=if(

'TPS'=='<<MATCHSTR>>_TPS',

mvappend(

'<<MATCHSTR>>_TPS_epoch',

'_time'

),

'<<MATCHSTR>>_TPS_epoch'

)

]

| stats

avg(TPS) as avg_TPS,

first(*_TPS) as *_TPS,

first(*_TPS_epoch) as *_TPS_epoch

| eval

avg_TPS=round('avg_TPS', 2)

| foreach *_TPS_epoch

[

| eval

<<MATCHSTR>>_TPS_timestamps=case(

mvcount('<<FIELD>>')==1, strftime('<<FIELD>>', "%x %X"),

mvcount('<<FIELD>>')>1, mvmap('<<FIELD>>', strftime('<<FIELD>>', "%x %X"))

),

<<MATCHSTR>>_TPS_json=json_object(

"type", "<<MATCHSTR>>",

"TPS", '<<MATCHSTR>>_TPS',

"Timestamps", '<<MATCHSTR>>_TPS_timestamps'

),

combined_TPS_json=mvappend(

'combined_TPS_json',

'<<MATCHSTR>>_TPS_json'

)

]

| fields + combined_TPS_json, avg_TPS

| addinfo

| eval

search_time_window_end=strftime(info_max_time, "%x %X"),

search_time_window_start=strftime(info_min_time, "%x %X"),

avg_TPS_time_window='search_time_window_start'." --> ".'search_time_window_end'

| eval

combined_TPS_json=mvappend(

'combined_TPS_json',

json_object(

"type", "avg",

"TPS", 'avg_TPS',

"Timestamps", 'avg_TPS_time_window'

)

)

| mvexpand combined_TPS_json

| fromjson combined_TPS_json

| fields - combined_TPS_json

| fields + type, TPS, Timestamps

Output should look something like this.

You should also be able to change the time bucket span form 1m back to 1s since that is how it was setup in your initial query.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @WanLohnston you can try something like this :

| timechart span=1d count(myfield) as nb_myfield | eventstats min(myfield) as min_fields max(myfield) as max_fields avg(myfield) as moy_fields

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Thanks for taking the time, sadly this didn't work out for me.

Ideally if I can keep the same format of:

| timechart span=1s count AS TPS

| eventstats max(TPS) as peakTPS

| eval peakTime=if(peakTPS==TPS,_time,null())

| stats avg(TPS) as avgTPS first(peakTPS) as peakTPS first(peakTime) as peakTime

| fieldformat peakTime=strftime(peakTime,"%x %X")

With the addition of a couple lines for Min TPS and when it took place that would be ideal.