Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Match Common Values from Multiline Fields from...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, thank you for taking the time to read and consider my question.

I'm trying to integrate a .json file which contains a list of suspicious domain's into a scheduled search that compares that data with a field that contains destination urls for web traffic.

I've already designated an index and sourcetype of the suspicious urls (which will be updated daily, so neither of these are static files with any predictable or constant values).

What I'm looking to do now is basically ingest the dest_hostname field from the web traffic as well as bad_domains field from the .json file and find any matching/common fields among them.

Here's an example of the data and what I would like to accomplish:

dest_hostname bad_domain matched_url

facebook.com reddit.com amazon.com

amazon.com amazon.com splunk.com

google.com splunk.com

splunk.com nfl.com

Once again, thank you for taking the time to read this, and any ideas or solutions would be greatly appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. Since you apparently have your ipv4{} as multivalue field, you might indeed need to do the expansion (dedup is not strictly needed but it tidies up the results of a subsearch.

Firstly, you moved the | table dest_ip out of the subsearch into the main search. That's wrong. This way you'd limit the results from the whole search to just this field.

To debug you can try two things.

Firstly - you want to make sure your subsearch produces proper results - just search for

index=threat earliest=-24h@h latest=now | rename ipv4{} as dest_ip | mvexpand dest_ip | dedup dest_ip | table dest_ipYou should get a table of dest_ip values as a result. If you're not getting it this way, there's something not right with the subsearch.

If you're getting proper results though, try the base search on its own with some values you know are present in your data:

index=pan ( dest_ip=1.2.3.4 OR dest_ip=3.4.5.6 OR dest_ip=5.6.7.8)

and so on. As I said - replace the IP values with values from your own events. If you're not finding anything, it means either your time range is wrong, IP-s are wrong or your field is differently named after all.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @cfloquet

Try with a map command here. Table both the fields you want to compare. Say fields : bad_domain & dest_hostname from respective files. I have used .csv file.

Then we are using map command,passing the baddomain field using $baddomain$ to look for a match in field dest_hostname in "desthostnames.csv" and display the matching result.

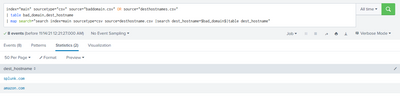

index="main" sourcetype="csv" source="baddomain.csv" OR source="desthostnames.csv"

| table bad_domain,dest_hostname

| map search="search index=main sourcetype=csv source=desthostname.csv |search dest_hostname=$bad_domain$|table dest_hostname"

Note: Map command consumes more resources and takes time for execution.

If this helps, please give an upvote 🙂

Happy Splunking 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Argh! No.

Don't use map if you can avoid it. Especially for big sets of results to iterate over.

Map spawns separate search for every single result so it's very heavy on the splunk environment (especially in terms of spawned searches).

If I understand the OP correctly, he wants to simply dynamically build set of conditions based on a result of a remote service query saved into an index as set of events.

You can simply use a straight subsearch which will be be default rendered to a OR-ed set of conditions.

<your base search conditions> [ search <your_feed_index> | rename bad_domain as dest_hostname | table dest_hostname ]

I'd probably "accelerate" it by creating a lookup and defining a scheduled search populating the lookup from the feed-supplied index so you don't have to run active search across your indexers but just read | inputlookup instead.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you both for your responses, however I am still having some trouble with this search.

I not only need to match the fields between the two multifield values, but I need to combine that result with the existing data from the traffic logs so I'm able to find the source IP, user, time, etc of the matched event.

index=pan earliest=-10m@m latest=now vsys_name=Browser | dedup dest_ip

| append [ search index=threats earliest=-24h@h latest=now | rename ipv4{} as bad_ip | mvexpand bad_ip | dedup bad_ip ]

| eval ipv4List = mvappend(dest_ip,bad_ip)

| stats count by ipv4List

| search count>1 The search above is what I currently have working that returns IPs found on both of these lists, but I don't see any way to actually return the fields associated with this address within dest_ip without running another subsearch using join to actually get that information, which is obviously less than ideal from a resource utilization standpoint.

I know that I am making this harder on myself than needed, but I am very new to SPL so any syntax that would fully encompass what I am trying to accomplish would be greatly appreciated and rewarded with karma.

Any ideas or advice are not taken for granted, and thank you in advance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm still a bit confused what you're trying to achieve 🙂

Can you please post a sample event(s) from both indexes and the desired result of your search?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick thank you for your quick response, and I apologize for my lack of clarity.

Here is what I am trying to accomplish in a bit more detail:

index="pan" will have fields like: user, dest_ip, _time, bytes_in, source_ip, etc.

index="threat" has only one field: "ipv4{}"

I would like to combine these searches into one, where every 10-15 minutes or so it looks at strictly the dest_ip field in the "pan" index, as well as the "ipv4{}" field from the "threat" index, and finds matches between those two fields, and then returns those matches AS WELL AS the corresponding fields from index="pan" such as user, _time, bytes_in, etc.

For example:

Within index="pan"

dest_ip user _time

1.1.1.1 santa 4pm

172.168.1.1 tom 2pm

8.8.8.8 daniel 1pm

10.0.5.1 peter 4am

Within index="threat"

ipv4{}

10.0.5.1

1.1.1.1

Would return a table that looks like this:

dest_ip user _time

1.1.1.1 santa 4pm

10.0.5.1 peter 4am

I think this is much more simple than I am currently making it in my head, as this seems like it would be a common search that many admins would need to implement. Once again, any help or guidance would be very much appreciated, and showered with karma!

Thanks in advance

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. If Iunderstand correctly, the most straightforward solution would be to simply

index=pan [ index=threat earliest=-24h | rename ipv4{} as dest_ip ]Check if this is what you need. If so, we'll think if we can get rid of the subsearch 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick

I have since found what I believe is the correct syntax for what you suggested:

index=pan [ search index=threat earliest=-24h@h latest=now | rename ipv4{} as dest_ip | mvexpand dest_ip | dedup dest_ip ] | table dest_ipHowever this search returns no results, when both dest_ip from the pan index, as well as the ipv4{} field from the threat index are populated with data.

Any ideas for where to go from here? Could be that I'm just barely missing something

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. Since you apparently have your ipv4{} as multivalue field, you might indeed need to do the expansion (dedup is not strictly needed but it tidies up the results of a subsearch.

Firstly, you moved the | table dest_ip out of the subsearch into the main search. That's wrong. This way you'd limit the results from the whole search to just this field.

To debug you can try two things.

Firstly - you want to make sure your subsearch produces proper results - just search for

index=threat earliest=-24h@h latest=now | rename ipv4{} as dest_ip | mvexpand dest_ip | dedup dest_ip | table dest_ipYou should get a table of dest_ip values as a result. If you're not getting it this way, there's something not right with the subsearch.

If you're getting proper results though, try the base search on its own with some values you know are present in your data:

index=pan ( dest_ip=1.2.3.4 OR dest_ip=3.4.5.6 OR dest_ip=5.6.7.8)

and so on. As I said - replace the IP values with values from your own events. If you're not finding anything, it means either your time range is wrong, IP-s are wrong or your field is differently named after all.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick thank you for your thorough and detailed response.

In terms of ruling out potential issues, I have confirmed that running the following search returns the correct results for undesirable IPv4 addresses from index=threat:

index=threat earliest=-24h@h latest=now | rename ipv4{} as dest_ip | mvexpand dest_ip | dedup dest_ip | table dest_ipWhen I run the following, I actually get exactly the information I want, which is incredible, and thank you so much for walking me through this process:

index=pan earliest=-40m@m latest=now vsys_name=Browser

[search index=threat earliest=-24h@h latest=now | rename ipv4{} as dest_ip | mvexpand dest_ip | dedup dest_ip | table dest_ip]However this search is probably the lengthiest one in terms of time taken to complete (atleast 90 seconds both times I have tried it, which to me is infeasible long term). Would you say the solution to this would be loading the JSON threat list into a lookup table and accessing the information that way? Are CSVs generally preferred for lookup tables, or does JSON work just as well? Thank you again for helping me with this, as this search works absolutely perfectly, and even though I can't really understand why, it's really all about reducing the time it takes to complete now. Karma earned and given!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The question is how long does your subsearch take. Because the subsearch is evaluated first then its results are pasted into the main search and the main search is run.

So if your subsearch takes long to complete, it's reasonable to "export" the results to a lookup especially if you run the main search often. You must also remember that subsearches can be tricky because they are limited in terms of number of results and they can get terminated silently if they run too long thus producing incomplete results.

And the "normal" lookups are based on csv files.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I run the subsearch it doesn't take painfully long, but your advice is definitely noted and I'll likely proceed forward with the use of CSVs rather than JSON to improve speed/efficiency of the search result.

Thank you again for all your help, I'll be sure to accept your answer as the solution for others who may have a similar issue down the road.