- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- List all unique keys present under certain json pa...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Community,

I stumbled across a scenario where I have events present in the JSON format as follows

Event 1: {

"severity": "INFO",

"message": "msg",

"details": {

"key1": "val1",

"key2": "val2",

"key3": "val3"

}

}

.

.

.

Event n: {

"severity": "INFOn",

"message": "msgn",

"details": {

"key1n": "val1",

"key2n": "val2",

"key3n": "val3"

}

}

I want to list all the unique keys present under the path "details." I tried querying it using mvexpand and json_keys, but nothing seems to be working. I would greatly appreciate some assistance.

The expected output should be as follows:

uniqueKeys:

key1

key2

key3

.

.

.

key1n

key2n

key3n

Basically, I want to list down all the unique keys present under the "details" JSON path across all the events..

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @GaetanVP

I tried this same query using makeresult as suggested by @yuanliu , and it worked perfectly fine, I am not quite sure why it is not working with my production raw data.

due to some security reasons I may not be able to provide you raw data screenshot but it looks something like this

{

"severity": "INFO",

"time": "2023-07-09 18:53:53.930",

"Stats": {

"discrepancy" : 10

},

"discrepancyDetails": {

"record1/0": "#DEL",

"record2/1": "#DEL",

"record3": "expected => actual",

}

}

I want all unique discrepancyDetails and its count,

anyway, I was able to get it through this query

index="demo1" sourcetype="demo2"

| search discrepancyDetails AND Stats

| spath "Stats.discrepancy"

| search "Stats.discrepancy" > 0

| stats count(discrepancyDetails.*) as discrepancyDetails.*

| transpose

I have some follow up queries on this. I may create a new thread for the same.

Thanks for your prompt response, really appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does this work any better?

| makeresults

| eval data="{

\"severity\": \"INFO\",

\"message\": \"msg\",

\"details\": {

\"key1\": \"val1\",

\"key2\": \"val2\",

\"key3\": \"val3\"

}

}#{

\"severity\": \"INFOn\",

\"message\": \"msgn\",

\"details\": {

\"key1n\": \"val1\",

\"key2n\": \"val2\",

\"key3n\": \"val3\"

}

}"

| eval data=split(data,"#") | mvexpand data | eval _raw=data | fields - data

``` Above creates demo data. Ignore IRL" ```

| spath path=details

| eval keys=json_keys(details)

``` Strip out JSON array chars ```

| rex mode=sed field=keys "s/[\[\]\\\"]//g"

``` Separate each key into different events ```

| eval keys=split(keys,",")

| mvexpand keys

``` Get the unique key names ```

| stats values(keys) as keysIf this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

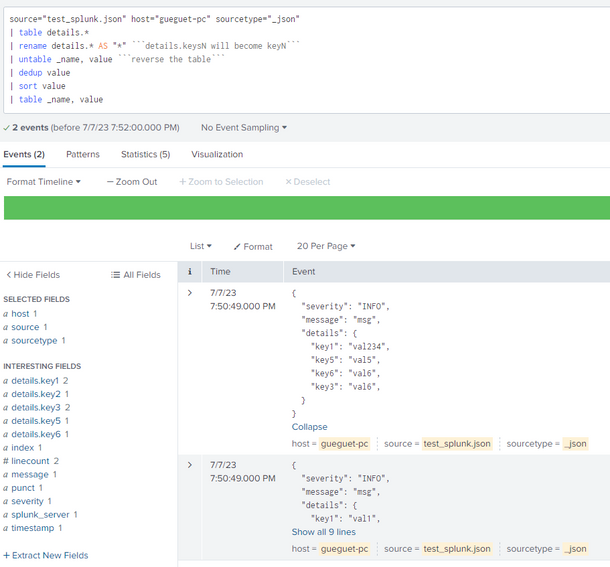

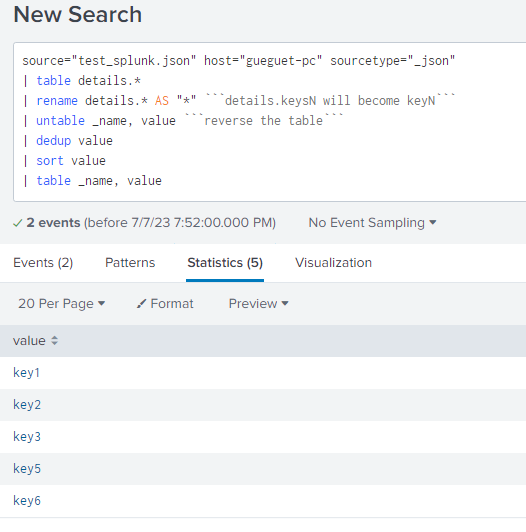

Hello @adikrhd,

You can try something like this

<<your_search_that_give_the_big_json>>

| table details.*

| rename details.* AS "*" ```details.keysN will become keyN```

| untable _name, value ```reverse the table```

| dedup value

| sort value

| table _name, valueHope it helps !

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @GaetanVP ,

Appreciate your efforts here but it doesn't seem to be working,

after untable _name value, it is not returning any visible stat results

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @adikrhd,

I suppose that we don't exactly have the same structure of data, could you give a screenshot of your data (basically when you just search your logs without any transformations) ?

Mine looks like this based on your first message

I suspect that your data is not correclty ingested or maybe you have the "Event 1: " before each JSON, which we didn't take into account in all the answers on this topic I think...

Keep us updated so we can find a fix!

GaetanVP

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @GaetanVP

I tried this same query using makeresult as suggested by @yuanliu , and it worked perfectly fine, I am not quite sure why it is not working with my production raw data.

due to some security reasons I may not be able to provide you raw data screenshot but it looks something like this

{

"severity": "INFO",

"time": "2023-07-09 18:53:53.930",

"Stats": {

"discrepancy" : 10

},

"discrepancyDetails": {

"record1/0": "#DEL",

"record2/1": "#DEL",

"record3": "expected => actual",

}

}

I want all unique discrepancyDetails and its count,

anyway, I was able to get it through this query

index="demo1" sourcetype="demo2"

| search discrepancyDetails AND Stats

| spath "Stats.discrepancy"

| search "Stats.discrepancy" > 0

| stats count(discrepancyDetails.*) as discrepancyDetails.*

| transpose

I have some follow up queries on this. I may create a new thread for the same.

Thanks for your prompt response, really appreciated!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is the raw event in the same format as you illustrated? untable should work just well if it is. Here is an example with emulated data in the exact format as you illustrated:

| makeresults

| eval data = mvappend("{

\"severity\": \"INFO\",

\"message\": \"msg\",

\"details\": {

\"key1\": \"val1\",

\"key2\": \"val2\",

\"key3\": \"val3\"

}

}",

"{

\"severity\": \"INFOn\",

\"message\": \"msgn\",

\"details\": {

\"key2\": \"val2a\",

\"key1n\": \"val1\",

\"key2n\": \"val2\",

\"key3n\": \"val3\"

}

}")

| mvexpand data

| rename data AS _raw

``` data emulation above ```

| spath

| table details.*

| rename details.* AS "*" ```details.keysN will become keyN```

| untable _name, value ```reverse the table```

| dedup value

``` I don't think you asked for sort ```

| table _name, value

I get this output:

| value |

| key1 |

| key2 |

| key3 |

| key1n |

| key2n |

| key3n |

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would help to know what query you've tried so far. Perhaps this is something different.

| makeresults

| eval _raw="{

\"severity\": \"INFO\",

\"message\": \"msg\",

\"details\": {

\"key1\": \"val1\",

\"key2\": \"val2\",

\"key3\": \"val3\"

}

}"

``` Above just sets up demo data. Delete IRL ```

| spath path=details

| eval keys=json_keys(details)If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @richgalloway ,

Hope you are doing well.

I have already tried using the json_keys() approach, but the problem I encountered is that it returns a list of multivalue events in the result, something like this:

keys

-----------

["key1","key2","key3"]

.

.

["key1n","key2n","key3n"]

But the ask here is that we want all unique keys in a single list. I am unable to figure out how to merge these two multivalues into a single unique list.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use json_array_to_mv to change keys to native array, then count array members.

| spath path=details

| eval keys = json_array_to_mv(json_keys(details))

| stats values(keys) as unique_keys dc(keys) as unique_key_countThis is a data emulation that you can play with and compare with real data

| makeresults

| eval data = mvappend("{

\"severity\": \"INFO\",

\"message\": \"msg\",

\"details\": {

\"key1\": \"val1\",

\"key2\": \"val2\",

\"key3\": \"val3\"

}

}",

"{

\"severity\": \"INFOn\",

\"message\": \"msgn\",

\"details\": {

\"key2\": \"val2a\",

\"key1n\": \"val1\",

\"key2n\": \"val2\",

\"key3n\": \"val3\"

}

}")

| mvexpand data

| rename data AS _raw

``` data emulation above ```Here, I added a duplicate key "key2" in the second event to test dedup.

You can also use untable as @GaetanVP suggested.