Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to write a search to convert bytes to KB, ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Splunkers

I am unable to convert no. of bytes to KB, MB, and GB based on the bytes.

I have used the search:

source="F:\\Splunk_Log Files\\*"| eval volume=recv_bytes/1024/1024 | stats sum(volume) as volume by src_ip | sort - sum(volume) | head 10 | eval volume=volume."MB"

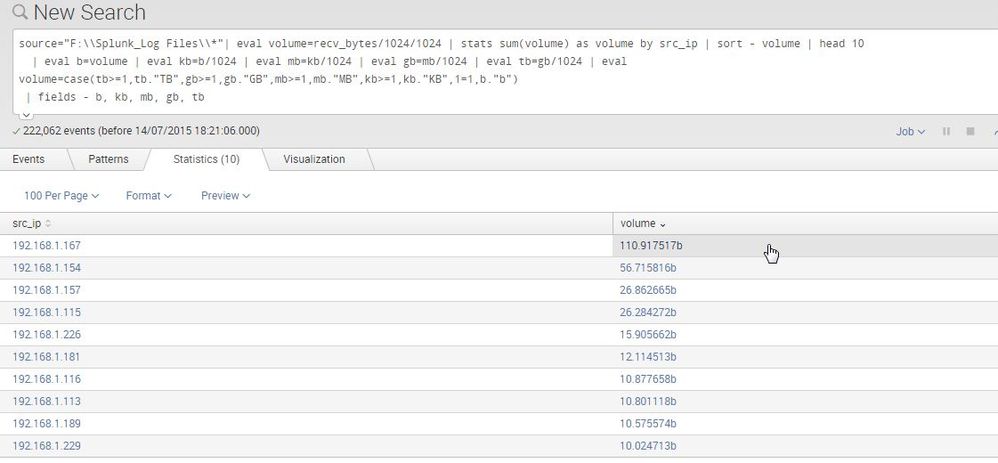

It is showing results like volume=1024.123, but i want it to display like 1.12GB, please help me in writing the search. Please find the attached screenshot. 😞

alt text

Thanks,

Santhosh

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The question is how do you decide which unit to use ?

You can use a complex eval command with if/cases to decide how to format your volume.

... < mysearch> .....

| eval volume_converted=case(

volume>=(1024*1024*1024*1024),round(volume/(1024*1024*1024*1024),0)."TB",

volume>=(1024*1024*1024),round(volume/(1024*1024*1024),0)."GB",

volume>=(1024*1024),round(volume/(1024*1024),0)."MB",

volume>=1024,round(volume/1024,0)."KB",

1=1,volume."B")

|table src_ip volume_converted

Depending of you needs you can keep the decimals after rounding, but they will be in % of the unit.

example :

host volume volume_converted

kiki 662380438 632MB

kuku 87064303 83MB

keke 87132011 83MB

kyky 183482081 175MB

kooo 786 786B

kaka 20334205142 19GB

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your query, you have eval volume=recv_bytes/1024/1024 | stats sum(volume) as volume by src_ip

Remove that, and just have | stats sum(recv_bytes) as volume by src_ip

Doing the recv_bytes/1024/1024 is converting your byte count to MB and skewing your results. Leave the formatting of the values to later.

So you should have

source="F:\\Splunk_Log Files\\*" | stats sum(recv_bytes) as volume by src_ip | sort - sum(volume) | head 10

| eval b=volume | eval kb=b/1024 | eval mb=kb/1024 | eval gb=mb/1024 | eval tb=gb/1024 | eval volume=case(tb>=1,tb."TB",gb>=1,gb."GB",mb>=1,mb."MB",kb>=1,kb."KB",1=1,b."b")

| fields - b, kb, mb, gb, tb

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The question is how do you decide which unit to use ?

You can use a complex eval command with if/cases to decide how to format your volume.

... < mysearch> .....

| eval volume_converted=case(

volume>=(1024*1024*1024*1024),round(volume/(1024*1024*1024*1024),0)."TB",

volume>=(1024*1024*1024),round(volume/(1024*1024*1024),0)."GB",

volume>=(1024*1024),round(volume/(1024*1024),0)."MB",

volume>=1024,round(volume/1024,0)."KB",

1=1,volume."B")

|table src_ip volume_converted

Depending of you needs you can keep the decimals after rounding, but they will be in % of the unit.

example :

host volume volume_converted

kiki 662380438 632MB

kuku 87064303 83MB

keke 87132011 83MB

kyky 183482081 175MB

kooo 786 786B

kaka 20334205142 19GB

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ThanQ so much @yannK for supporting newbies like me 🙂

It is not been supported to charts right.?

Can i know how can we do it for charts .?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It will not work for charts of course.

In chart you would prefer to have a consistent unit of measure. To compare apples to apples, not apples to TerraApples 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, make sure that the data in field recv_bytes is numeric. If it's not, use tonumber(recv_bytes)/1024/1024.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jeffland

Thanks, It is been in number format only. it is showing in decimal format for me.

Please find the screenshot of my requirement to the post. I have added now.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Then that is not a problem. I just wanted to point out that non-numeric fields may not work as expected with mathematical operations.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

source="F:\\Splunk_Log Files\\*"| eval volume=recv_bytes/1024/1024 | stats sum(volume) as volume by src_ip | sort - sum(volume) | head 10

| eval b=volume | eval kb=b/1024 | eval mb=kb/1024 | eval gb=mb/1024 | eval tb=gb/1024 | eval volume=case(tb>=1,tb."TB",gb>=1,gb."GB",mb>=1,mb."MB",kb>=1,kb."KB",1=1,b."b")

You may want to consider rounding off the values to be more legible.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @sduff_splunk

I have tried the query that you have shared. it is showing in different columns but it is not actually i want.

it is to be only one column having showing volume based on the values it must be KB or GB or MB.

please find the attached screenshot in the question which i have just updated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

source="F:\\Splunk_Log Files\\*"| eval volume=recv_bytes/1024/1024 | stats sum(volume) as volume by src_ip | sort - sum(volume) | head 10

| eval b=volume | eval kb=b/1024 | eval mb=kb/1024 | eval gb=mb/1024 | eval tb=gb/1024 | eval volume=case(tb>=1,tb."TB",gb>=1,gb."GB",mb>=1,mb."MB",kb>=1,kb."KB",1=1,b."b")

| fields - b, kb, mb, gb, tb

That will remove the individual fields

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sduff_splunk, it is not showing KB,MB and GB instead it is displaying only "b".

any ideas still 😞

here is my link for the query output.

http://answers.splunk.com/storage/attachments/46175-cyberoam%20iview%20%20screenshots%20-%20google%2...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your query, you have eval volume=recv_bytes/1024/1024 | stats sum(volume) as volume by src_ip

Remove that, and just have | stats sum(recv_bytes) as volume by src_ip

Doing the recv_bytes/1024/1024 is converting your byte count to MB and skewing your results. Leave the formatting of the values to later.

So you should have

source="F:\\Splunk_Log Files\\*" | stats sum(recv_bytes) as volume by src_ip | sort - sum(volume) | head 10

| eval b=volume | eval kb=b/1024 | eval mb=kb/1024 | eval gb=mb/1024 | eval tb=gb/1024 | eval volume=case(tb>=1,tb."TB",gb>=1,gb."GB",mb>=1,mb."MB",kb>=1,kb."KB",1=1,b."b")

| fields - b, kb, mb, gb, tb

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This could be also solution for you.

| makeresults count=35

```THIS SECTION IS JUST CREATING SAMPLE VALUES.```

| streamstats count as digit

| eval val=pow(10,digit-1), val=val+random()%val

| foreach bytes [eval <<FIELD>>=val]

| table digit val bytes

| fieldformat val=tostring(val,"commas")

```THE FOLLOWING LINES MAY BE WHAT ACHIEVES THE FORMAT YOU ARE LOOKING FOR.```

| fieldformat bytes=printf("% 10s",printf("%.2f",round(bytes/pow(1024,if(bytes=0,0,floor(min(log(bytes,1024),10)))),2)).case(bytes=0 OR log(bytes,1024)<1,"B ", log(bytes,1024)<2,"KiB", log(bytes,1024)<3,"MiB", log(bytes,1024)<4,"GiB", log(bytes,1024)<5,"TiB", log(bytes,1024)<6,"PiB", log(bytes,1024)<7,"EiB", log(bytes,1024)<8,"ZiB", log(bytes,1024)<9,"YiB", log(bytes,1024)<10,"RiB", log(bytes,1024)<11,"QiB", 1=1, "QiB"))

If you can install app or ask admin on your to install app,

installing add-on Numeral system macros for Splunk enables you to use macros numeral_binary_symbol(1) or numeral_binary_symbol(2).

Example

| makeresults count=35

```THIS SECTION IS JUST CREATING SAMPLE VALUES.```

| streamstats count as digit

| eval val=pow(10,digit-1), val=val+random()%val

| foreach bytes [eval <<FIELD>>=val]

| table digit val bytes

| fieldformat val=tostring(val,"commas")

```THE FOLLOWING LINES MAY BE WHAT ACHIEVES THE FORMAT YOU ARE LOOKING FOR.```

| fieldformat bytes=printf("% 10s",`numeral_binary_symbol(bytes,2)`)

Numeral system macros for Splunk

https://splunkbase.splunk.com/app/6595

Usage:

How to convert a large number to string with expressions of long and short scales, or neither.