Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to parse and extract field from my raw dat...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

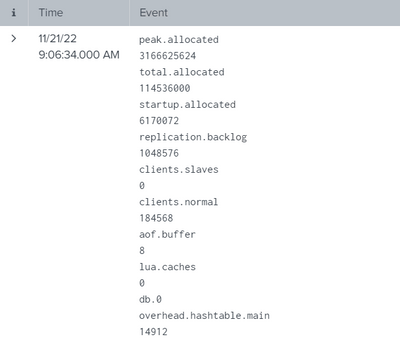

I Have a log like this, how do I Parse it into fields??

Is there a way to use Splunk to parse this and extract one value? If so, how?

Thank you in advance.

Regards,

Imam

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at that data, it appears to be field name/field value pairs separated by line feeds, so a simple mechanism in SPL is to do

| extract kvdelim="," pairdelim="\n"which will extract the field names/values from the _raw field

However, you should really extract these at ingest time, but that will depend on how your data is being ingested. Is the data coming in as a multi-row event. If you take a file containing a number of events, you can use the 'Add data' option to play with ingesting that data, so the fields are extracted automatically

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The screenshot doesn't show any comma, or anything that clearly signifies a separation of field name from field value for @bowesmana's method to succeed. If that is the raw log, your best bet is to go back to developers of the code and ask for better logging. There is no excuse to write such poorly formatted logs. If it is not raw log, you should illustrate raw data (in text, anonymize as needed).

There are some workarounds if certain assumptions holds, but they will not work 100%.

First, I assume that each line that contains a string like abc.efg represents a field name, and lines that do not contain this pattern are values.

| eval data=split(_raw, "

")

| mvexpand data

| rex field=data mode=sed "s/^([^\.]+)\.(.+)/\1_\2=/"

| stats list(data) as _raw

| eval _raw = mvjoin(_raw, " ")

| rex mode=sed "s/= /=/g"

| kvThis will (kinda) work till it gets to the line db.0. The next line is overhead.hashtable.main. (With two dots.) Is this a field name or a field value? Using the above method, you'll get something like db.0=overhead.hashtable.main=14912, which the kv (extract) command will extract into field name db_0, value overhead_hashtable_main=14912.

An less versatile method could be to assume that each "odd numbered" line is a field name, other lines will be field value.

| rex mode=sed "s/\n/=/1"

| rex mode=sed "s/\n/=/2"

| rex mode=sed "s/\n/=/3"

...

| rex mode=sed "s/\n/=/10" ``` illustrated data seems to have 19 lines ```

| kvAgain, this kinda works till it gets to the line db.0. It will transform into db.0=overhead.hashtable.main, and 14912. Splunk will then extract field name db_0 with value overhead.hashtable.main, and field name 14912 with null value.

Either way, these are very tortured methods. Application developers ought to do better logging than this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

where i write this command , in search or transform.conf?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

These are all search time examples

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at that data, it appears to be field name/field value pairs separated by line feeds, so a simple mechanism in SPL is to do

| extract kvdelim="," pairdelim="\n"which will extract the field names/values from the _raw field

However, you should really extract these at ingest time, but that will depend on how your data is being ingested. Is the data coming in as a multi-row event. If you take a file containing a number of events, you can use the 'Add data' option to play with ingesting that data, so the fields are extracted automatically

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

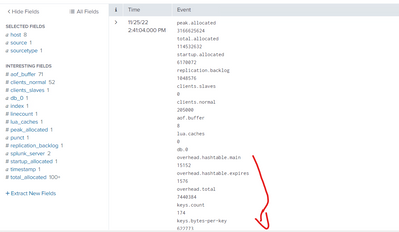

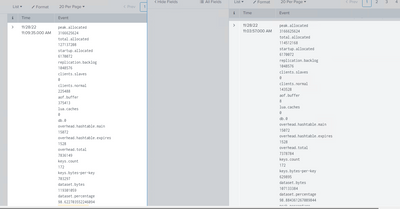

I have followed the command, but there are some that still haven't extracted what is the solution?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem you have is that db.0 has no 'value' following it on the next row, so no simple regular extraction will work.

Can you post the _raw view of that event.

Just wondering what it's showing, whether it's a multivalue field, where perhaps the "value" for db.0 is a null value, in which case it may be possible to extract it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct, db.0 in the next raw has no value in the next raw

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it is log event , only db.0 haven't value

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is the exact problem @bowesmana and I pointed out: Poorly written logs. You really need to ask coders to write logs that leaves no ambiguity, that is conducive to extraction by mechanical tools like Splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My bad reading your screenshot, I saw commas - however, although you don't have commas in your data, the extract command can still separate out the line feed separators, as in this example

| makeresults

| eval _raw="peak.allocated

31660

total.allocated

423084723094

c

2"

| extract kvdelim="\n" pairdelim="\n"but it would rely on well formatted data. However, this solution is really only a hack solution to read already ingested data like that.