Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How to optimize my search involving calculated...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to optimize my search involving calculated search-time extracted field via lookup file

Greetings Splunkers,

I am currently running into an issue where when attempting to search against a field (let's call it "problem_field") produces atrocious runtimes for searches. This field "problem_field" is extracted search time via a lookup file in the props.conf via something like this:

LOOKUP-problem_field_lookup = problem_field_lookup problem_field_md5 AS problem_field_md5 OUTPUTNEW problem_field AS problem_field

Now I have another field that is extracted at search time (let's call this "good_field") that is extracted via transforms.conf in a regex statement.

REGEX = regexstuff(?<good_field>)regexstuff

The data that each field looks on is similar and sometimes has exactly the same field value depending on how the log event is structured for a source type, so the two fields are good to use to compare against each other on search runtimes. The example I'm using in the real world to compare searches actually goes across a group of events where the fieldvalue for good_field and problem_field is exactly the same and both get a 100% event match rate. The problem is that for a 1 day period of time, if I search the field based on problem_field="samevalue" it takes an avg of 1,800 seconds to finish, versus using good_field="samevalue" taking an avg of 20 seconds to finish. As you can see this is a horrendous discrepancy.

When I inspect the jobs, here is the type of differences I am seeing:

I am wondering are the possible factors here that are causing such a vast discrepancy in how these searches are being executed? Are fields extracted via lookups just generally this much worse on the system as far as search time extractions go? This is Splunk 6.4.0 if that helps.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect that this app will give you much insight:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the extra detail in your comments.

I could hazard a guess at the problem, but one of the more knowledgeable Splunk peeps here should be able to confirm...

I would think that because you've got the lookup as a calculated field in props.conf, for every event that your search returns, it has to go and scan this 30k line table. That's going to be quite an IO hit from a disk perspective.

There are a couple of things you could try (on a test/dev system).

You could run your search as before, then use a join command to append the detail from the table. My thinking is that the table only needs to be read in from disk once and then all the joining is done from there.

As an example...

Let's assume that my search returns the fields:

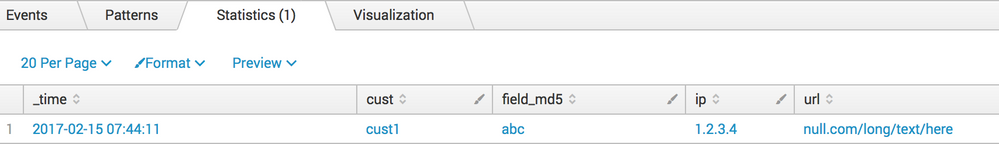

field_md5="abc", ip="1.2.3.4", cust="cust1", url="null.com/long/text/here"

And then lets take a .csv file that has:

field_md5,cust,field2,field3,field4,field5,field6,field7

abc,cust1,abc2,abc3,abc4,abc5,abc6,abc7

def,cust2,def2,def3,def4,def5,def6,def7

You can define your lookup in transforms.conf as something like:

[lookup-text]

filename = my-lookup-file.csv

Then for your search, you could use the command:

| join type=inner field_md5 [| inputlookup lookup-text]

In this command 'inner' means only keep results from the original search that have a match in the lookup table. You can use an 'outer' if you want to keep all results, even if they don't have a match.

You've got the real data to test with, but for this example, you could fake it with:

| makeresults

| eval field_md5="abc", ip="1.2.3.4", cust="cust1", url="null.com/long/text/here"

To get:

Then add the join to the search:

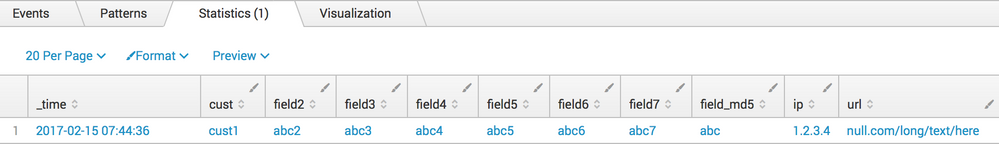

| makeresults

| eval field_md5="abc", ip="1.2.3.4", cust="cust1", url="null.com/long/text/here"

| join type=inner field_md5

[| inputlookup lookup-text]

To get:

I'd be really interested to know if that improved things for you. I'll be honest, I haven't worked with a lookup table that size.

The other thing you could investigate would be using Splunk's Key Value store as opposed to the flat csv which you have:

http://docs.splunk.com/Documentation/Splunk/latest/Admin/AboutKVstore

You may need to check if this came with 6.4, or if it is a 6.5 feature.

So, a couple of bits to try. Let us all know how you get on.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

Have you got some samples that you could share?

It would be great to see some example event and maybe a few lines from your lookup file (is this something like a .csv table).

Also, how big is the lookup file (how many lines?).

Maybe there's a different way to structure some of this to make it more performant for you?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The lookup file hovers around 7 MB on average for the query I used in the screenshot. I have other queries with similar problems and those lookup files are only around 1 MB. Each are csv files.

The file is a 7 column 30,000 row csv (we hard limit it to not exceed 30k rows).

The events themselves can be variant but are typically request logs that contain standard request information like the request URL and parameters, IP address, http status code, response time/size, correlation ids, and what the lookup does is uses that info to determine which account the request belongs to.