- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: How do we overcome the shortcomings of "bin" w...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do we overcome the shortcomings of "bin" when trying to find X number of events in a certain time period?

Hello all,

I've had this issue in the past but never really spent the time to find a solution as bin is usually "good enough." Take the following data set:

12:00:00 -- id = A

12:01:00 -- id = B

12:02:00 -- id = A

12:03:00 -- id = A

12:04:00 -- id = A

12:05:00 -- id = A

12:06:00 -- id = A

12:07:00 -- id = A

12:08:00 -- id = A

12:09:00 -- id = A

12:10:00 -- id = B

12:11:00 -- id = A

If I did a |bin _time span=5m | stats count by idI'd get something like:

12:00:00| A : 4 | B : 1

12:05:00| A : 5 | B : 0

12:10:00| A : 1 | B : 1

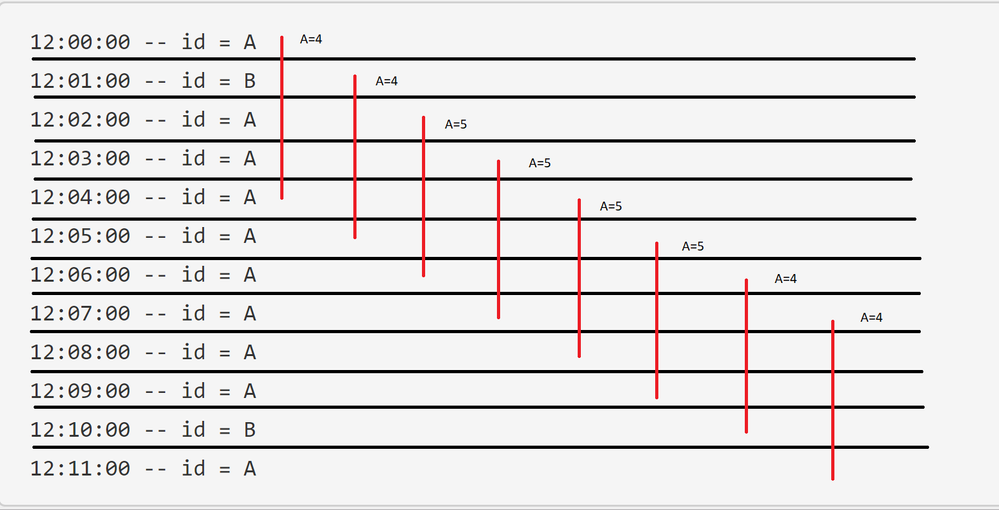

And if then wanted to find a period where I had 5 or more As I'd have 1 period. If I wanted a period with 4 or more As I'd get 2 periods. Here's a very advanced diagram to illustrate the point:

Using what I'm intending I would have 8 periods with A>=4 and 4 periods with A>=5

I've tried stuff like transaction with maxspan but that doesn't seem to work and streamstats has been giving me issues when I'm not using only one field. bin and span seem to do the job most of the time but I feel like a lot of interesting data can be missed simply because the data happens to fall across the binning period.

More severely if it the following happened and I was looking for A>=5 I would get no results!

12:00:00 -- id = B

12:01:00 -- id = B

12:02:00 -- id = B

12:03:00 -- id = A

12:04:00 -- id = A

12:05:00 -- id = A

12:06:00 -- id = A

12:07:00 -- id = A

12:08:00 -- id = B

12:09:00 -- id = B

12:10:00 -- id = B

12:11:00 -- id = B

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| makeresults count=2

| streamstats count

| eval _time=relative_time(_time,-1*count."d@d")

| makecontinuous _time span=1min

| eval id=mvindex(split("ABC",""),random() % 3)

| table _time id

| streamstats dc(eval(id="A")) as p_test by id

| streamstats window=5 sum(p_test) as window_num count as tmp

| streamstats count(eval(tmp="5")) as session

| table _time id window_num session

| eventstats count(eval(window_num=4)) as "A>=4" count(eval(window_num=5)) as "A>=5"

From the table, time_window does not work.

Please set _time appropriately.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Streamstats seems to work the way you are thinking.

<your search> | reverse| streamstats time_window=5m count by id | table _time id count

I put the reverse in to get the events in the right order. It produces this outcome which looks like it lines up with your expectations.

_time id count

2020-01-09 12:00:00 A 1

2020-01-09 12:01:00 B 1

2020-01-09 12:02:00 A 2

2020-01-09 12:03:00 A 3

2020-01-09 12:04:00 A 4

2020-01-09 12:05:00 A 4

2020-01-09 12:06:00 A 5

2020-01-09 12:07:00 A 5

2020-01-09 12:08:00 A 5

2020-01-09 12:09:00 A 5

2020-01-09 12:10:00 B 1

2020-01-09 12:11:00 A 4

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That works if you've filtered down to a single entity with those values, but say I have that dataset 3 or 4 times in roughly the same time period for other entities. Those events intermingle with the other data and I think streamstats ends up just resetting basically every event. At the very least I have not had any success.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems to work pretty consistently for me for both values, but I'm using pretty small samples (~150 events).

You may need to tweak the time_window, for the counting, but give it a try.