- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- How can I optimize my search to run faster?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Splunkers

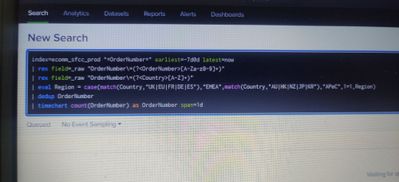

While running the attached query, results are populating very slow. From that query i want to achieve trend graph by using the line visualisation. But graphs are populating very slow. Please recommend how can I optimize my query to get the results quickly. Please check the attachment for the query.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All @richgalloway 's points are valid. Get acquainted with the Job Inspector - it's gonna tell you which part is taking longest and which is processing how many results. It can also show you how much (and where) your search improves as you change it.

I'd say that the most important factor here is the wildcard at the beginning of the search term. Due to the way Splunk works it means that Splunk is unable to just sift through its index files and pick a - hopefully small - subset of events for further filtering and processing but has to examine full text of raw data of each event. Which - especially if you're looking for a rarely encountered term - can make a huuuuge difference.

A quick example from my home installation of splunk free. Let's look into my DNS server events and see how many times I asked it for github during last 7 days (aligned to midnight so we're searching through the same timeframe).

The search is simply

index=pihole github earliest=-8d@d latest=@d

| stats count

As a result I get a nice number of 595. If I inspect the job I get the info

This search has completed and has returned 1 results by scanning 595 events in 0.934 seconds

If I modify the search to have a wildcard before github

index=pihole *github earliest=-8d@d latest=@d

| stats count

I get the same result count of 595 (apparently I wasn't visiting any sites named mygithub or porngithub or something like that). But the Job Inspection log shows:

This search has completed and has returned 1 results by scanning 531,669 events in 8.086 seconds

As you can see, the search got me the same results but took almost ten times as much time and had to look through thousand times as much data!

And the time difference was relatively small (sic!) only because the whole set of datato search was relatively small and was already cached in RAM. If I was searching through big index on some production cluster, that wildcard could mean a difference between "let's get some coffee before this search completes" and "this search will never end"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All @richgalloway 's points are valid. Get acquainted with the Job Inspector - it's gonna tell you which part is taking longest and which is processing how many results. It can also show you how much (and where) your search improves as you change it.

I'd say that the most important factor here is the wildcard at the beginning of the search term. Due to the way Splunk works it means that Splunk is unable to just sift through its index files and pick a - hopefully small - subset of events for further filtering and processing but has to examine full text of raw data of each event. Which - especially if you're looking for a rarely encountered term - can make a huuuuge difference.

A quick example from my home installation of splunk free. Let's look into my DNS server events and see how many times I asked it for github during last 7 days (aligned to midnight so we're searching through the same timeframe).

The search is simply

index=pihole github earliest=-8d@d latest=@d

| stats count

As a result I get a nice number of 595. If I inspect the job I get the info

This search has completed and has returned 1 results by scanning 595 events in 0.934 seconds

If I modify the search to have a wildcard before github

index=pihole *github earliest=-8d@d latest=@d

| stats count

I get the same result count of 595 (apparently I wasn't visiting any sites named mygithub or porngithub or something like that). But the Job Inspection log shows:

This search has completed and has returned 1 results by scanning 531,669 events in 8.086 seconds

As you can see, the search got me the same results but took almost ten times as much time and had to look through thousand times as much data!

And the time difference was relatively small (sic!) only because the whole set of datato search was relatively small and was already cached in RAM. If I was searching through big index on some production cluster, that wildcard could mean a difference between "let's get some coffee before this search completes" and "this search will never end"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PickleRick Thanks for sharing all your valuable things on Splunk search performance. This is very huge learning thing for me while creating a SPL query.

I have one other issue related to the search performance. In that I want to optimize my all alert queries. Here I have hardcorded all the services name by giving using the "OR" clause. The services are coming from the raw data without having any field name. How we can optimize this kind of search without using the multiple OR condition.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This probably should be a new question.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The query isn't bad. There are a few improvements that can be made, but it's likely that the reason for slow performance is the volume of data being searched. How large is the index and how many indexers do you have?

Some tips to improve performance:

- Make the base search (prior to the first |) as specific as possible. Consider adding source and/or sourcetype specifiers.

- Avoid leading wildcards in search strings.

- Don't extract fields that aren't used. Remove the second rex and the eval commands.

- Use timechart distinct_count() in place of dedup | timechart count().

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@richgalloway Thanks for your response. I will try and let you know the progress.