Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Eval case statement

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Eval case statement

Hello,

I hope everything is okay.

I need your help.

I am using this spl request :

"index="bloc1rg" AND libelle IN (IN_PREC, OUT_PREC, IN_BT, OUT_BT, IN_RANG, OUT_RANG) earliest=-1mon@mon latest=-1d@d

| append [search index="bloc1rg" AND libelle IN (IN_PREC, OUT_PREC, IN_BT, OUT_BT, IN_RANG, OUT_RANG) earliest=-1mon@mon latest=-1d@d

| chart count over id_flux by libelle

| eval IN_BT_OUT_BT=IN_BT+OUT_BT

| eval IN_PREC_OUT_PREC=IN_PREC+OUT_PREC

| eval IN_RANG_OUT_RANG=IN_RANG+OUT_RANG

| where IN_BT_OUT_BT>=2

| where IN_PREC_OUT_PREC >=2

| where IN_RANG_OUT_RANG >=2

| transpose

| search column=id_flux

| transpose

| fields - "column"

| rename "row 1" as id_flux] | stats last(_time) as last_time by id_flux libelle "

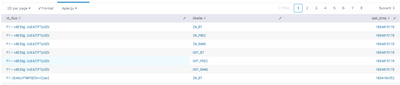

I have this results :

I can't get what I want.

Let me explain.

For a given id_flux, I'd like to have the response time defined as follows:

- out_rang time - in_rang time

- time out_prec - time in_prec

-time out_bt - time in_bt

Voilà ce que j'ai utilisé comme requête complète :

search index="bloc1rg" AND libelle IN (IN_PREC, OUT_PREC, IN_BT, OUT_BT, IN_RANG, OUT_RANG) earliest=-1mon@mon latest=-1d@d |chart count over id_flux by libelle |eval IN_BT_OUT_BT=IN_BT+OUT_BT |eval IN_PREC_OUT_PREC=IN_PREC+OUT_PREC |eval IN_PREC_OUT_PREC=IN_PREC+OUT_PREC | eval IN_RANG_OUT_RANG=IN_RANG+OUT_RANG |where IN_BT_OUT_BT>=2 |where IN_PREC_OUT_PREC >=2 |where IN_RANG_OUT_RANG >=2 |transpose |search column=id_flux |transpose |fields - "column" |rename "row 1" as id_flux] | eval sortorder=case(libelle=="IN_PREC",1,libelle=="OUT_PREC" AND statut=="KO",2,libelle=="OUT_PREC" AND statut=="OK",3,libelle=="IN_BT",4,libelle=="OUT_BT",5, libelle=="IN_RANG",6, libelle=="OUT_RANG" AND statut=="KO",7, libelle=="OUT_RANG" AND statut=="OK",8) | sort 0 sortorder |eval libelle=if(sortorder=2,"ARE", (if (sortorder=3,"AEE", (if(sortorder=7, "BAN",(if(sortorder=8, "CCO", libelle))))))) |table libelle sortorder _time |chart avg(_time) over sortorder by libelle | filldown AEE, ARE, IN_BT, IN_PREC, OUT_BT, IN_RANG, OUT_RANG |eval OK=abs(OUT_BT-IN_BT)/1000 |eval AEE=abs(AEE-IN_PREC)/1000 |eval ARE=abs(ARE-IN_PREC)/1000 |eval CCO=abs(CCO-IN_RANG) |eval BAN=abs(BAN-IN_RANG) |fields - sortorder |stats values(*) as * |table AEE ARE BAN CCO OK |transpose |rename "row 1" as "temps de traitement (s)" |rename column as "statut"

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is rather strange to use the exact same base search in a subsearch. If nothing else, this reduces performance. It is also strange that you have to use two consecutive transpose inside the subsearch seemingly just to get a list of id_flux values. I think you are looking for appendpipe, not append.

index="bloc1rg" AND libelle IN (IN_PREC, OUT_PREC, IN_BT, OUT_BT, IN_RANG, OUT_RANG) earliest=-1mon@mon latest=-1d@d

| stats max(_time) as last_time count by id_flux libelle

| appendpipe

[chart sum(count) over id_flux by libelle]

| eventstats values(IN_*) as IN_* values(OUT_*) as OUT_* by id_flux

| search libelle=*

| eval IN_BT_OUT_BT=IN_BT+OUT_BT

| eval IN_PREC_OUT_PREC=IN_PREC+OUT_PREC

| eval IN_RANG_OUT_RANG=IN_RANG+OUT_RANG

| search IN_BT_OUT_BT>=2 AND IN_PREC_OUT_PREC >=2 AND IN_RANG_OUT_RANG >=2

``` the above is equivalent to search 1 ```In fact, appendpipe can also help you determine the response times you are looking, if I am guessing your intention correctly:

index="bloc1rg" AND libelle IN (IN_PREC, OUT_PREC, IN_BT, OUT_BT, IN_RANG, OUT_RANG) earliest=-1mon@mon latest=-1d@d

| stats max(_time) as last_time count by id_flux libelle

| appendpipe

[chart sum(count) over id_flux by libelle]

| eventstats values(IN_*) as IN_* values(OUT_*) as OUT_* by id_flux

| search libelle=*

| eval IN_BT_OUT_BT=IN_BT+OUT_BT

| eval IN_PREC_OUT_PREC=IN_PREC+OUT_PREC

| eval IN_RANG_OUT_RANG=IN_RANG+OUT_RANG

| search IN_BT_OUT_BT>=2 AND IN_PREC_OUT_PREC >=2 AND IN_RANG_OUT_RANG >=2

``` the above is equivalent to search 1 ```

| appendpipe

[chart limit=0 max(last_time) over id_flux by libelle]

| search NOT libelle=*

| fields - libelle last_time

| eval response_rang = OUT_RANG - IN_RANG

| eval response_prec = OUT_PREC - IN_PREC

| eval response_bt = OUT_BT - IN_BTFor the purpose of getting the help you wanted from this forum, complex SPL - especially with multiple transpose, only adds barrier to volunteers' understanding of your real objective. I suggest that you describe the basic data set, describe the desired outcome, and describe the logic between desired outcome and the data. Illustrate with text tables and strings (anonymize as necessary).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@anissabnk so do you have ONE of each libelle per event, if so then how do you define response time - is it the TIME of the event, so BT time is OUT time - IN time and is there only a SINGLE one of each libelle per flux?

Try something like this

index="bloc1rg" AND libelle IN (IN_PREC, OUT_PREC, IN_BT, OUT_BT, IN_RANG, OUT_RANG) earliest=-1mon@mon latest=-1d@d

| stats max(eval(if(libelle="IN_PREC", _time, null()))) as IN_PREC_TIME

max(eval(if(libelle="OUT_PREC", _time, null()))) as OUT_PREC_TIME

max(eval(if(libelle="IN_BT", _time, null()))) as IN_BT_TIME

max(eval(if(libelle="OUT_BT", _time, null()))) as OUT_BT_TIME

max(eval(if(libelle="IN_RANG", _time, null()))) as IN_RANG_TIME

max(eval(if(libelle="OUT_RANG", _time, null()))) as OUT_RANG_TIME

by id_flux

| eval response=(OUT_PREC_TIME-IN_PREC_TIME) + (OUT_BT_TIME-IN_BT_TIME) + (OUT_RANG_TIME-IN_RANG_TIME)

| fields - *_TIME

so you are collecting all the event times for each of the event types by flux id and then just calculating the response time at the end.