Join the Conversation

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Re: Date parsing incorrect

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have logs from two Unifi switches. One parses the date just fine, the other gets the year messed up, but parses the rest correctly. How come this is happening?

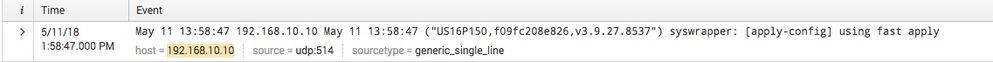

On the one that works fine, shows the correct date of 05/11/18:

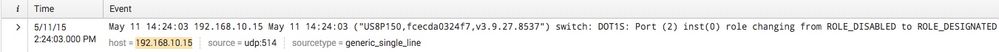

But this one does not, notice the year is incorrect. This one shows a date of 05/11/15 instead of 05/11/18 like it should:

Since they both use the same source/sourcetype, and other hosts share this same source/sourcetype with various other date formats, I really don't want to hardcode anything unless I can also hardcode a reference to the host ip address.

Any ideas why the parsing isn't working correctly? I find it odd that it parses 192.168.10.10 correctly but then thinks 192.168.10.15 is a reference to a year.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to get a quick win here by modifying the udp/514 settings. It was set to "generic_single_line", and setting it to "syslog" appears to have corrected this.

Thanks @xpac.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to get a quick win here by modifying the udp/514 settings. It was set to "generic_single_line", and setting it to "syslog" appears to have corrected this.

Thanks @xpac.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This behavior is perhaps due to the default value of 2000 days for the MAX_DAYS_AGO setting in props.conf.

It interprets the last block of the ip address as the year, but because that is outside the acceptable range, it perhaps takes the other timestamp for which it doesn't find a year? 2010 is more than 2000 days ago, while 2015 isn't.

Anyway, you can also write the timestamp extraction config in a [source::...] stanza, or even a [host::..] stanza, such that you can apply it more specifically to this data source.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you configured any timestamp extraction rules for your sourcetype? Splunk recommends you should. That way data from all hosts will be parsed correctly. Right now there are multiple timestamp in non-standard format in your logs and with no explicit timestamp extraction rules being setup, Splunk may get confused in extracting automatically.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In doing so, i suppose I would need to change the sourcetypes of these so that I can do that. I've never done much with that, but suppose I could. "generic_single_line" likely shouldnt really be used to begin with and I likely should put these in correct sourcetypes.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might get an easy success by using the sourcetype syslog. Syslog often uses the old timestamp style, without year and timezone, and that might end up in bad timestamp detection unless you use sourcetype syslog.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That would give you much more control on what you want to do with your data. There are several posts which talk about line breaking and timestamp parsing. Listing few of those for reference.

https://answers.splunk.com/answers/654502/line-break-help-with-incoming-logging-data.html

https://answers.splunk.com/answers/37583/should-linemerge-break.html

Also, these documentation link would give more details on event breaking and timestamp extraction:

https://docs.splunk.com/Documentation/Splunk/7.1.0/Data/Configureeventlinebreaking

https://docs.splunk.com/Documentation/Splunk/7.1.0/Data/Handleeventtimestamps