Are you a member of the Splunk Community?

- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Count working hours after midnight

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Splunkers, hope you guys are all well.

I'm trying to do an adaptation of the search in this post (thanks to @elliotproebstel and @javiergn !)

I'm working in UTC and in my case I'm interested in counting the the hours between 1 PM and 1 AM (next day). It works great for other teams where hours are in the same day, but I'm finding the next day tricky.

This is what I have so far:

| eval start=strptime(reported_time,"%b %d %Y %H:%M:%S")

| eval end=strptime(processed_time,"%b %d %Y %H:%M:%S")

| eval minute = mvrange(0, (end - start), 60)

| mvexpand minute

| eval _time = start + minute

| eval myHour = strftime(_time,"%H")

| eval myMinute = strftime(_time,"%H")

| eval myDay = strftime(_time,"%A")

| eval myMonth = strftime(_time,"%b")

| where myDay != "Saturday" AND myDay != "Sunday" AND myHour >= 13 AND myHour <=1

| stats count as durationInMinutes by ticket,reported_time,processed_time

| eval duration = tostring(durationInMinutes*60, "duration")

| eval SLO=if(durationInMinutes>60,"SLO Fail","SLO Achieved")

| table ticket,reported_time,processed_time,duration,SLO

| sort by - duration

I want my table to show:

ticket number, reported time (when it was reported), processed time (when it got worked by an engineer), duration (time between reported time and processed time, counting only hours between 1 PM and 1 AM next day) and whether the SLO was met or not.

Thanks for the help!!

Wheresmydata.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I wasn't aware start and end time could span more than 1 day so I have reviewed my answer from 2016 and proposed the following for you:

| eval start_time_epoch = strptime(reported_time,"%b %d %Y %H:%M:%S")

| eval start_time_second = strftime(start_time_epoch,"%S")

| eval start_time_epoch_rounded = start_time_epoch - start_time_second

| fields - start_time_epoch, start_time_second

| eval close_time_epoch = strptime(processed_time,"%b %d %Y %H:%M:%S")

| eval close_time_second = strftime(close_time_epoch,"%S")

| eval close_time_epoch_rounded = close_time_epoch - close_time_second

| fields - close_time_epoch, close_time_second

| eval minute = mvrange(0, (close_time_epoch_rounded - start_time_epoch_rounded), 60)

| mvexpand minute

| eval _time = start_time_epoch_rounded + minute

| eval myHour = strftime(_time,"%H")

| eval myMinute = strftime(_time,"%H")

| eval myDay = strftime(_time,"%A")

| where myDay != "Saturday" AND myDay != "Sunday" AND (myHour >= 13 OR myHour < 1)

| stats count as durationInMinutes by reported_time, processed_time

| eval duration = tostring(durationInMinutes*60, "duration")

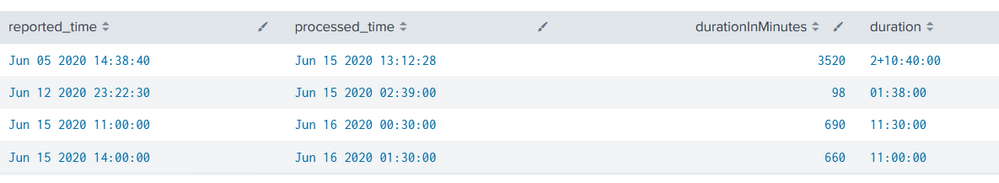

And it seems to work fine in calculating the duration up to the minute:

Let me know if that works. If not please provide examples (text always better than screenshots).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, so assuming I understood your question correctly, this is what I would do:

| eval start=strptime(reported_time,"%b %d %Y %H:%M:%S")

| eval end=strptime(processed_time,"%b %d %Y %H:%M:%S")

| eval startAt1pm = relative_time(start, "@d+13h")

| eval newStart = if(start > startAt1pm, start, startAt1pm)

| eval durationInHours = round((end - newStart)/3600)

| stats first(durationInHours) asdurationInHours by ticket, reported_time, processed_timeLines 3 to 5 are basically creating a new time field rounded to 1pm, if the actual start time is greater than 1pm then use it, otherwise use the one that starts at 1pm. Then calculate the difference in seconds between the new start and end and round it to the hour.

Let me know if that works. It should also be a lot faster than the solution I proposed in 2016 for your particular use case.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your quick answer @javiergn but unfortunately that would not do everything I need as I don't want to count hours during the weekends and I also need the exact time (not rounded up).

For example, if ticket01 arrives at 19:10 UTC and gets processed next day at 10.05 AM, I want the result to be:

ticket01 | JUN 16 2020 19:10:00 | JUN 17 2020 10:05:00 | 05:50:00 | SLO Fail

because I would count only from 19:10 to 1 AM

Hope I explained myself clearly.

thank you again!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, based on what you described I would do something like (PLEASE DOUBLE CHECK THE SYNTAX):

| eval start=strptime(reported_time,"%b %d %Y %H:%M:%S")

| eval end=strptime(processed_time,"%b %d %Y %H:%M:%S")

| eval startAt1pm = relative_time(start, "@d+13h")

| eval endAt1am = relative_time(end, "@d+1h")

| eval newStart = if(start > startAt1pm, start, startAt1pm)

| eval newEnd = if(end < endAt1am, end, endAt1am)

| eval durationInSecs = newEnd - newStart

| eval durationHours = floor(durationInSecs/3600)

| eval durationMins = floor((durationInSecs - (durationHours*3600))/60)

| eval durationString = if(durationHours<10, "0" . toString(durationHours), toString(durationHours)) . ":" . if(durationMins<10, "0" . toString(durationMins), toString(durationMins))

| stats first(durationString) as durationString by ticket, reported_time, processed_time

If you also need seconds you can proceed with the same logic and build that into the durationString.

Let me know if that works.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @javiergn ,

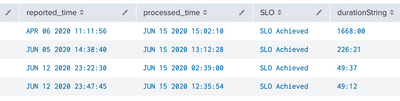

Thanks for your efforts! really appreciated. Some of the results look ok but others not really. For example look at this one (ticket on the left, omitted in the screenshot)

here's my current query:

| eval start=strptime(reported_time,"%b %d %Y %H:%M:%S")

| eval end=strptime(processed_time,"%b %d %Y %H:%M:%S")

| eval startAt1pm = relative_time(start, "@d+13h")

| eval endAt1am = relative_time(end, "@d+1h")

| eval newStart = if(start > startAt1pm, start, startAt1pm)

| eval newEnd = if(end < endAt1am, end, endAt1am)

| eval durationInSecs = newEnd - newStart

| eval durationHours = floor(durationInSecs/3600)

| eval durationMins = floor((durationInSecs - (durationHours*3600))/60)

| eval durationString = if(durationHours<10, "0" . toString(durationHours), toString(durationHours)) . ":" . if(durationMins<10, "0" . toString(durationMins), toString(durationMins)) |eval SLO=if(durationInMinutes>60,"SLO Fail","SLO Achieved")

| stats first(durationString) as durationString by ticket, reported_time, processed_time,SLO

| sort by - durationString

thank you,

Wheresmydata

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I wasn't aware start and end time could span more than 1 day so I have reviewed my answer from 2016 and proposed the following for you:

| eval start_time_epoch = strptime(reported_time,"%b %d %Y %H:%M:%S")

| eval start_time_second = strftime(start_time_epoch,"%S")

| eval start_time_epoch_rounded = start_time_epoch - start_time_second

| fields - start_time_epoch, start_time_second

| eval close_time_epoch = strptime(processed_time,"%b %d %Y %H:%M:%S")

| eval close_time_second = strftime(close_time_epoch,"%S")

| eval close_time_epoch_rounded = close_time_epoch - close_time_second

| fields - close_time_epoch, close_time_second

| eval minute = mvrange(0, (close_time_epoch_rounded - start_time_epoch_rounded), 60)

| mvexpand minute

| eval _time = start_time_epoch_rounded + minute

| eval myHour = strftime(_time,"%H")

| eval myMinute = strftime(_time,"%H")

| eval myDay = strftime(_time,"%A")

| where myDay != "Saturday" AND myDay != "Sunday" AND (myHour >= 13 OR myHour < 1)

| stats count as durationInMinutes by reported_time, processed_time

| eval duration = tostring(durationInMinutes*60, "duration")

And it seems to work fine in calculating the duration up to the minute:

Let me know if that works. If not please provide examples (text always better than screenshots).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @javiergn , I think we're almost there!

I checked many of the entries and they seem to be all ok - but found one that cannot get my head around. Look at the data below:

| ticket | reported_time | processed_time | duration | SLO | Comments |

| ticket01 | JUN 07 2020 11:21:43 | JUN 09 2020 19:54:52 | 19:54:00 | SLO Failed | Jun 7th was a Sunday. Counting 12h for Monday + 6h54m on Monday should be 18:54 |

| ticket02 | JUN 01 2020 15:23:13 | JUN 02 2020 11:28:46 | 09:37:00 | SLO Failed | Jun 1st Monday. This one is correct! |

| ticket03 | JUN 15 2020 15:51:27 | JUN 16 2020 13:12:35 | 09:21:00 | SLO Failed | Jun 15th Monday. This one is correct! |

| ticket04 | JUN 08 2020 22:46:51 | JUN 09 2020 19:55:32 | 09:09:00 | SLO Failed | Jun 9th Monday. This one is correct! |

| ticket05 | JUN 01 2020 18:17:04 | JUN 02 2020 11:28:49 | 06:43:00 | SLO Failed | Jun 1st Monday. This one is correct! |

| ticket06 | JUN 17 2020 18:34:51 | JUN 18 2020 00:25:11 | 05:51:00 | SLO Failed | Jun 17th Wednesday. This one is correct! |

| ticket07 | JUN 15 2020 19:24:19 | JUN 16 2020 13:12:27 | 05:48:00 | SLO Failed | Jun 15th Wednesday. This one is correct! |

| ticket08 | JUN 17 2020 18:56:50 | JUN 18 2020 00:26:17 | 05:30:00 | SLO Failed | Jun 17th Wednesday. This one is correct! |

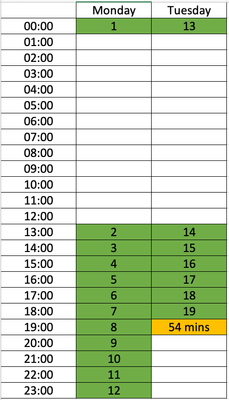

I just can't understand why ticket01 has 1 extra hour than expected - am I counting the hours wrong?

Sunday - no hours counted there

Monday: 12 hours (from 13 to 1)

Tuesday: from 13 to 19:54 = 6h 54m

Total = 12h + 6h 54m = 18h 54 m .... why is the duration showing 19:54?

Thanks so much! you're amazing 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I think Monday is correct too. Look:

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A ha!!! I see what happened, I was missing that 1 hour of Monday from 0 to 1 AM!

That makes sense now 🙂 THANK YOU!!!!!!!!!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Wheresmydata ,

First of all thank you for referencing one of my answers from ages ago. I probably need to review what I did first ha ha.

In any case, while I'm reviewing your question, could edit the post and format the table accordingly as it is very hard to read otherwise?

Thanks,

J