- Find Answers

- :

- Using Splunk

- :

- Splunk Search

- :

- Calculate the session duration by the same field's...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RAWDATA:

| user_name | machine_name | event_name | logon_time |

| user1 | machine1 | logon | 12/9/2021 7:20 |

| user1 | machine1 | logout | 12/9/2021 7:22 |

| user1 | machine1 | logon | 12/9/2021 8:20 |

| user1 | machine1 | logout | 12/9/2021 8:22 |

From the above Data, I am trying to retrieve the individual session duration for each user and machine and put it in a chart.

I have the query which renders the aggregate of the session for machine and user. .

| user_name | machine_name | event_name | logon_time | logout_time | session_duration |

| user1 | machine1 | logon | 12/9/2021 7:20 | 12/9/2021 7:22 | 1:01:51 |

However, I am trying to retireve the session duration for each login that happened anytime.

Desired result is :

| user_name | machine_name | event_name | logon_time | logout_time | Sesssion_duration |

| user1 | machine1 | logon | 12/9/2021 7:20 | 12/9/2021 7:22 | 0:01:51 |

| user2 | machine1 | logon | 12/9/2021 8:20 | 12/9/2021 8:22 | 0:01:51 |

Could someone pls help me correct my query to get the each session by logon and logout events.

TIA

my query :

index=foobar

sourcetype=foo source=bar

| dedup event_time | table machine_name, user_name, event_name, event_time

| streamstats current=f last(event_time) as logout_time by machine_name

| table , machine_name, user_name, event_name, event_time, logout_time

| where event_name="LOGON" and logout_time!=""

| eval type=typeof(logout_time) |eval logon_time=event_time

| convert timeformat="%Y-%m-%d %H:%M:%S" mktime(logon_time) as assigned_at

| convert timeformat="%Y-%m-%d %H:%M:%S" mktime(logout_time) as released_at

| eval session_duration=(released_at-assigned_at)

| eval session_duration=tostring(session_duration, "duration")

| table user_type, user_name, site_id, machine_name, event_name, logon_time, logout_time, session_duration

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

here is a one way to do it

| makeresults

| eval _raw = "user_name machine_name event_name logon_time

user1 machine1 logon 12/9/2021 7:20

user1 machine1 logoff 12/9/2021 7:22

user1 machine1 logon 12/9/2021 8:20

user1 machine1 logoff 12/9/2021 8:22"

| multikv forceheader=1

| eval _time = strptime(logon_time, "%m/%d/%Y %H:%M")

```| reverse```

| fields - _raw linecount

| eval login_time = if (event_name == "logon", logon_time, null()), logout_time = if (event_name == "logoff", logon_time, null())

| transaction endswith=(event_name=logon) startswith=(event_name=logoff) user_name machine_name

```| transaction startswith=(event_name=logon) endswith=(event_name=logoff) user_name machine_name```

| eval session_duration = tostring (duration, "duration")

| rename login_time as logon_time

| table user_name machine_name event_name logon_time logout_time session_durationYou should notice "reverse + startswith/endswith" (in comments) pair which should set by the order of event in your search.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, That worked like a charm!! Thank you @isoutamo and @yuanliu for your time and help.

However, I see session duration is coming in as a string. So I used below to show it in a chart using bucket command so I see no of machines logged for certain period of times like in span on 5min, 30min etc..

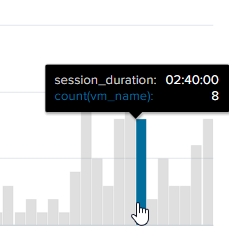

eval session_duration=strptime(session_duration, "%H:%M:%S")| bucket session_duration span=60m | chart count(vm_name) over session_duration

Te first eval command convert session_duration to epoch. so the cart shows epoc time which is not readable.

So I added fieldformat session_duration=strftime(session_duration, "%H:%M:%S") after te bucket command. But the data looks incorrect. For eg.,

2021-12-10 13:29:35 2021-12-10 13:28:42 00:00:00

but expected to be:

2021-12-10 13:29:35 2021-12-10 13:28:42 00:00:53

Any clue?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you are using bucket/bin command to time field it change values in that field based on you span values. In your case it means that all time values have only hour part left as you are using span=60m (1h). For that reason you cannot calculate duration which is less than 1h and even then the duration will always be multiply of 60min.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@isoutamo Thank you so much for replying and your time.

So the bucket command puts all the results in groups of span value. which does not give us the accurate chart atleast in my case. I am trying to group the results based on span. If span is selected as 60 mins,

So, can you suggest a best way to show the graph with buckets

No of VM logged in for 1-5min intervals when span = 5mins.

So the chart will show 100 vms were logged and had session_duration between 1min and 5 mins

200 machines were logged in and had session_duration between 5 to10 mins, 15 to 20 mins etc..

When span is set to 1h,

Nof VMs logged in for 1h-2h, 2hrs to 3hrs etc..

eval session_duration=strptime(session_duration, "%H:%M:%S")| bucket session_duration $span_tok$ | fieldformat session_duration=strftime(session_duration, "%H:%M:%S")| chart count(vm_name) over session_duration

Also, when you click on the chart, froom the above query, it leads to the search command appended with with "search session_duration="00:02:40"" and the results yield no data although we have results shown with that duration as 00:02:40 when run without the condition " search session_duration="00:02:40""

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Error in 'transaction' command: This search requires events to be in descending time order, but the preceding search does not guarantee time-ordered events.

I got the above error when trying to use the below query to use my search results into makeresults feed.

my base query will yield very messy data like

machine1 logged on at 12

machine 10 logged out at 10:01 and so on.

| makeresults | append [ search index=foo sourcetype=bar source=foobar |

table event_name, machine_name, user_name, user_type, _time| sort _time | eval _time = strptime(_time, "%m/%d/%Y %H:%M")

```| reverse```

| fields - _raw linecount

| eval login_time = if (event_name == "logon", _time, null()), logout_time = if (event_name == "logoff", _time, null())

| transaction endswith=(event_name=logon) startswith=(event_name=logoff) user_name machine_name

```| transaction startswith=(event_name=logon) endswith=(event_name=logoff) user_name machine_name```

| eval session_duration = tostring (duration, "duration")

| rename login_time as logon_time

| table user_name machine_name event_name logon_time logout_time session_duration]

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so if I use like this, how can i assign the fields to the _raw var so I can process it like you suggested?

index=foo sourcetype=bar source=foobar [ | makeresults | .......

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try not to be distracted by makeresults. Think of the entire section before the blank line as your raw search that gives you event list. If Splunk data is ingested "naturally", most likely that raw search result will be in reverse time order so the event order warning should not occur. If your data contain out-of-order events, you'll have to sort first - which can be very expensive.

Effectively, you will be doing

| eval _time = strptime(event_time, "%m/%d/%y %H:%M")

| transaction user_name machine_name startswith=eval(event_name=="logon") endswith=eval(event_name=="logout")

| eval duration=tostring(duration, "duration")after your raw search (and possible | sort - _time).

About transactions and transaction are good reads for this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your query looks super cool. how do i replace the info in raw data with my quer results?

| eval _raw = "user_name machine_name event_name logon_time

user1 machine1 logon 12/9/2021 7:20

user1 machine1 logoff 12/9/2021 7:22

user1 machine1 logon 12/9/2021 8:20

user1 machine1 logoff 12/9/2021 18:22"

here instead of the raw data, can I replace my query?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@isoutamo Thanks for your reply. you have used actual data in make results ? Not sure if thats the best for my case as the data comes in dynamically to splunk for the DB Connect.

I have managed to get the individual events as desired by using mv commands.

| eval zipped=mvzip(mvzip(vm_name,event_name),event_time) | mvexpand zipped | eval data=split(zipped,",")

|eval vm_name=mvindex(data,0) | eval action=if((mvindex(data,1) like "Logon"), "logon", "logout") | eval assign_time=if((mvindex(data,1) like "logon"), mvindex(data,2), "NA")|eval release_time=if((mvindex(data,1) like "logout"), mvindex(data,2), "NA") | streamstats window=1 current=f values(release_time) as machine_release_time by vm_name, user_name | streamstats window=1 current=f values(release_time) as machine_release_time by vm_name, user_name | where assign_time !="NA" OR machine_release_time!="NA" OR machine_release_time!=" " | where action="logon" | convert timeformat="%Y-%m-%d %H:%M:%S" mktime(assign_time) as assign_time_epoch | convert timeformat="%Y-%m-%d %H:%M:%S" mktime(machine_release_time) as machine_release_time_epoch |eval session_time=(machine_release_time_epoch-assign_time_epoch) | eval session_duration=tostring(session_time, "duration")

However, now I m stuck to put this in a chart using bucket command. The span doesnt work as expected.

my session_duration is of type string and it has values like 00.37.35, 02:45:30 and so on.

I am trying to put the chart using buckets so I can see machines logged on for 1-5 mins, 30-1h, >2 hrs and so on.

any google search for string to date and use in chart leads me to strptime and it doesnt work as expected. because it converts to epoch and the time is unreadable in chart visualization, any way I can show the graph with HH:MM:SS with buckets with different span like 5min , 30m 2h etc..

TIA

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

As you said in your question that this first table was your raw data, I just regenerate it as example with "|makeresults ....". In real life you should replace that part until "|eval login_time = ..." with your base SPL which generate that first "raw data" table.

I suppose that you can replace your SPL from "|eval type..." with my example without that example data setting up.

r. Ismo

BTW. it's more efficient to use fields instead of table (at least) as long as you have only streaming commands in your SPL. Then those are executed in each search peers instead of transfer events first into search head and then do that stuff! Also aways filter first and then do another stuff.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

here is a one way to do it

| makeresults

| eval _raw = "user_name machine_name event_name logon_time

user1 machine1 logon 12/9/2021 7:20

user1 machine1 logoff 12/9/2021 7:22

user1 machine1 logon 12/9/2021 8:20

user1 machine1 logoff 12/9/2021 8:22"

| multikv forceheader=1

| eval _time = strptime(logon_time, "%m/%d/%Y %H:%M")

```| reverse```

| fields - _raw linecount

| eval login_time = if (event_name == "logon", logon_time, null()), logout_time = if (event_name == "logoff", logon_time, null())

| transaction endswith=(event_name=logon) startswith=(event_name=logoff) user_name machine_name

```| transaction startswith=(event_name=logon) endswith=(event_name=logoff) user_name machine_name```

| eval session_duration = tostring (duration, "duration")

| rename login_time as logon_time

| table user_name machine_name event_name logon_time logout_time session_durationYou should notice "reverse + startswith/endswith" (in comments) pair which should set by the order of event in your search.

r. Ismo