- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Re: Ingestion Latency after updating to 8.2.1

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why are we receiving this ingestion latency error after updating to 8.2.1?

So we just updated to 8.2.1 and we are now getting an Ingestion Latency error…

How do we correct it? Here is what the link says and then we have an option to view the last 50 messages...

Ingestion Latency

- Root Cause(s):

- Events from tracker.log have not been seen for the last 6529 seconds, which is more than the red threshold (210 seconds). This typically occurs when indexing or forwarding are falling behind or are blocked.

- Events from tracker.log are delayed for 9658 seconds, which is more than the red threshold (180 seconds). This typically occurs when indexing or forwarding are falling behind or are blocked.

- Generate Diag?If filing a support case, click here to generate a diag.

Here are some examples of what is shown as the messages:

- 07-01-2021 09:28:52.276 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\Splunk\var\spool\splunk.

- 07-01-2021 09:28:52.276 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\Splunk\var\run\splunk\search_telemetry.

- 07-01-2021 09:28:52.276 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\Splunk\var\log\watchdog.

- 07-01-2021 09:28:52.276 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\Splunk\var\log\splunk.

- 07-01-2021 09:28:52.276 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\Splunk\var\log\introspection.

- 07-01-2021 09:28:52.275 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\Splunk\etc\splunk.version.

07-01-2021 09:28:52.269 -0500 INFO TailingProcessor [66180 MainTailingThread] - Adding watch on path: C:\Program Files\CrushFTP9\CrushFTP.log.

- 07-01-2021 09:28:52.268 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: monitor://$SPLUNK_HOME\var\log\watchdog\watchdog.log*.

- 07-01-2021 09:28:52.267 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: monitor://$SPLUNK_HOME\var\log\splunk\splunk_instrumentation_cloud.log*.

- 07-01-2021 09:28:52.267 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: monitor://$SPLUNK_HOME\var\log\splunk\license_usage_summary.log.

- 07-01-2021 09:28:52.267 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: monitor://$SPLUNK_HOME\var\log\splunk.

- 07-01-2021 09:28:52.267 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: monitor://$SPLUNK_HOME\var\log\introspection.

- 07-01-2021 09:28:52.267 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: monitor://$SPLUNK_HOME\etc\splunk.version.

- 07-01-2021 09:28:52.267 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: batch://$SPLUNK_HOME\var\spool\splunk\tracker.log*.

- 07-01-2021 09:28:52.266 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: batch://$SPLUNK_HOME\var\spool\splunk\...stash_new.

- 07-01-2021 09:28:52.266 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: batch://$SPLUNK_HOME\var\spool\splunk\...stash_hec.

- 07-01-2021 09:28:52.266 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: batch://$SPLUNK_HOME\var\spool\splunk.

- 07-01-2021 09:28:52.265 -0500 INFO TailingProcessor [66180 MainTailingThread] - Parsing configuration stanza: batch://$SPLUNK_HOME\var\run\splunk\search_telemetry\*search_telemetry.json.

- 07-01-2021 09:28:52.265 -0500 INFO TailingProcessor [66180 MainTailingThread] - TailWatcher initializing...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am going to reach out to support when I get a chance and will update here when I have found a solution/workaround of some sort. My OS is Linux and the log path/permission looks fine from my perspective as well. We upgraded over a month ago and this issue persists but only on our indexer. Our heavy forwarders are not affected by this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you heard back from support regarding this issue? We have been running on 8.2.2 for several weeks without issue, but today noticed this on one of the search heads within the SHC.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My apologies, we actually redeployed for a separate issue we were facing so I never did contact them on this.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am having this issue as well. Would appreciate any information you've been able to dig up.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marc,

We are facing the same issue after 8.2.1 upgrade

Have you already found a solution?

Greetings,

Justyna

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No....I have not found a solution. However it appears to have cleared itself.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So we thought we had it resolved. However it is back again.

We restart the services and we can watch it go from good to bad.

Anyone else had luck finding an answer?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

me too looking for a solution to address this ingestion latency....

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We had this problem after upgrading to v8.2.3 and have found a solution.

After disabling the SplunkUniversal Forwarder, the SplunkLightForwarder and the SplunkForwarder on splunkdev01, the system returned to normal operation. These apps were enabled on the Indexer and should have been disabled by default. Also when trying to load a UniversalForwarder that is not compatible to v8.2.3, it will cause ingestion latency and tailreader errors. We had some Solaris 5.1 servers (forwarders) that are no longer compatible with upgrades so we just kept them on 8.0.5. The upgrade requires Solaris 11 or more.

The first thing I did was go to the web interface, Manage Apps and searched *forward*.

This showed the three Forwarders that I needed to disable and I disabled them on the interface.

I also typed these commands in unix on the indexer:

splunk disable app SplunkForwarder -auth <username>:<password>

splunk disable app SplunkLight -auth <username>:<password>

splunk disable app SplunkUniversalForwarder -auth <username>:<password>

After doing these things the ingestion latency and tailreader errors stopped.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FWIW, we just upgraded from 8.1.3 to 8.2.5 tonight, and are facing exactly these same issues.

Only difference is that these forwarder apps are already disabled on our instance.

Is there any update from Splunk support on this issue?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We upgraded from 8.7.1 to 8.2.6 and we have the same tracker.log latency issue.

Please help us SPLUNK...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Commenting on this to be notified of the solution.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FWIW, my support case is still open. I still have no answers. Although I have many support people telling me the problem doesn't exist, so I reply with screenshots of the problem still existing.

The original resolution suggested was to disable the monitoring/alerting for this service. If anyone is interested in this solution, I'm happy to post it - but as it doesn't solve the underlying issue, and all it does is stop the alert telling you the issue exists, I haven't bothered testing/implementing it myself.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Splunk support have replied and confirmed (finally) that it is a known bug for the ingestion latency for both on-prem and cloud customers.

Their suggested solution is to disable the monitoring/disable the alerts.

Note - this doesn't fix the ingestion issues (that are causing indexing to be skipped & therefore data loss) - only stops warning you about the issue.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, in our case also the same thing happens, it seems to be monitoring the tracker.log file that does not exist in any of our deployed hosts, yet we have this problem in the SH and the Indexer.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

same problem here, only appeared immediately after 9.0 upgrade

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In our situation, the problem was actually the permissions on this one particular log file. It appears that when Splunk was upgraded, the permission on the log file was set to root only and splunk was not able to read the log file. We don't run Splunk as a root user, therefore we had no other choice but to change ownership of the file so Splunk could read it. We are running RHEL 8.x, so "chown -R splunk:splunk /opt/splunk" did the trick. Once we restarted Splunk the issue went away immediately.

Just like several others had mentioned previously, we were only seeing the issue on our Cluster Master and no other Splunk application server. Hope this helps!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are running on a windows platform. We have made no changes to the environment (permission or user).

We have upgraded to 9.0 and still have the issue.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FOUND A FIX!... or workaround rather and hopefully it works for you all.

i've been working tirelessly with a splunk senior technical support til midnight for the past 2 days in an effort to fault find and fix this problem. Support seem to think it is a scaling issue as they suspect network latency and our 2 indexers being overwhelmed.

This makes no sense to me as our environment is sufficiently scaled based on splunk validated architecture number of users and data ingestion amount.

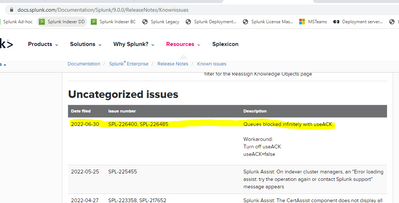

Anyways, i've spotted the issue classed as uncategorised under 9.0 known issues. Was only logged 2 weeks ago and i'm surprised (or not really) that support failed to pick this up rather take me on a wild goose chase of fault finding

turn off the useACK setting

useACK = false

on any outputs.conf file you can locate on the affected instances then restart for changes to take effect. This should stop the tracker.log errors and data should continuously flow through again

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Turning OFF ACK may cause data-loss.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So what's the verdict? Is the workaround is working?