- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Splunk Disk Space

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

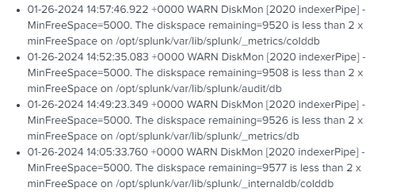

Hello everyone,

I'm currently trying to optimize Splunk with disk space and index.

I read about :

- Changing the parameter "Pause indexing if free disk space (in MB) falls below"

- Never modify the indexes.conf parameters

- And some others posts of the community

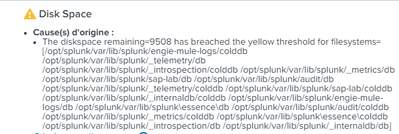

But I'm not quite sure about the solution for my problems :

The coldToFrozenDir/Script parameters are empty.

Kind regards,

Tybe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changing the threshold will restore indexing, but is just kicking the can down the road. As @PickleRick said, you should find out where the space is being used. It may be necessary to add storage or reduce the retention time on one or more of the larger indexes.

Make sure indexed data is on a separate mount point from the OS and from Splunk configs. That will keep a huge core dump or lookup file from blocking indexing.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @PickleRick and @richgalloway 🙂

Thank you both for your answers ! First I will say this infrastructure is not mine but now I need to optimize it. Of course I know we can't fill the disk to the brim and I'm trying to perfectly understand the impact of my futur actions.

@PickleRick I'm not quite sure of what uses up my space since I'm not an expert Splunk yet haha

Here is what I found :

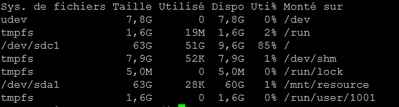

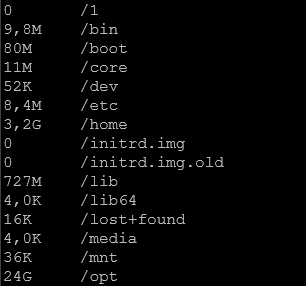

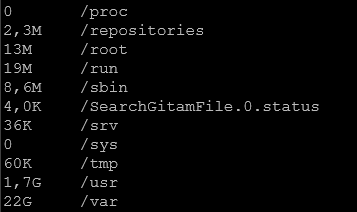

When I run the command :

sudo du -sh /*So, do I need to reduce the retention time of maybe something is using more space than it should be ?

Have a nice day all !

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. This is not a big server.

2. You seem to have much data in /var (almost as much as your whole Splunk installation). Typically for a Splunk server /var would only contain last few days/weeks of logs and probably some cache from the package manager. This is up to you to diagnose what is eating up your space there.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PickleRick ,

1. You totally right. As far as I understood, this server is for begginers use, lab etc.

2. That's right but in the past I tried to clean some of those directories in my test environment and it didn't end well 😫 What is the best practice ?

I thought I could change the minFreeSpace=5000 variable to 4000 for example ("Pause indexing if free disk space (in MB) falls below")

I'm reading some post from the community but I'm not finding a clear answer about reducing the retention time or deleting colddb... I really don't want to mess things up and if you have a link to the documentation for my problem I'd appreciate it 😊

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. For beginners use, it's _probably_ ok to have relatively short retention. If you have sources that contiously supply your environment with evetns. Otherwise you might want to keep your data for longer in case you want your users to have some material to search from. Noone can tell you what your case is. If it's an all-in-one server (which I assume it is) the index settings are in the settings menu under... surprise, surprise, "Indexes".

2. That's completely out of scope of Splunk administration as such and is a case for your Linux admins. We have no idea what is installed on your server, what is its configuration and why it is configured this way. So we can tell you that "you have way too much here for the server's size" and you have to deal with it. And yes, removing random directories is not a very good practice.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. I already searched under the index settings and all of my index files are not occupying more than 3 Go of space.

2. I'm also aware that you can't determine what configuration I have, what is installed on it etc. I'm just asking for some complementary informations about optimizing my parameters, maybe reducing tsidx files, because I think I'm forgetting something and I know I may not be aware of the best practice...

Kind regards 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. If your overall usage of $SPLUNK_HOME differs greatly from the size of your indexes, check if you don't have crash logs and coredumps lying around (you only need them if you're gonna debug your crashes with support; otherwiseyou can safely delete them).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Edit :

It seems I must change the parameter "Pause indexing if free disk space (in MB) falls below" from 5000 to 4000 for example ?

Am I right ?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changing the threshold will restore indexing, but is just kicking the can down the road. As @PickleRick said, you should find out where the space is being used. It may be necessary to add storage or reduce the retention time on one or more of the larger indexes.

Make sure indexed data is on a separate mount point from the OS and from Splunk configs. That will keep a huge core dump or lookup file from blocking indexing.

If this reply helps you, Karma would be appreciated.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's not that easy. Filling the disk to the brim is not very healthy performance-wise. It's good to leave at least a few percent of the disk space (depending on the size of the filesystem and use characteristics) free so that the data doesn't get too fragmented.

Question is - do you even know what uses up your space and do you know which of this data is important? (also - how do you manage your space and what did you base your limits on)