Join the Conversation

- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Re: Rebalancing issues

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rebalancing issues

I added two new indexers to our 10-indexer "cluster" (we have replication factor of 1 so I'm using the quotes, because it's really more of a simple distributed search setup; but we have master node and we can rebalance so it counts as a cluster ;-)) and I ran rebalance so the data would get redistributed across whole environment.

And now I'm a bit puzzled.

Firstly, the 2 new indexers are stressed with datamodel acceleration. Why is it so? I would understand if all indexers needed to re-accelerate the datamodel but only those two? (I wouldn't be very happy if I had to re-accelerate my TB-sized indexes but I'd understand).

I did indeed start the rebalancing around 16:30 yesterday.

Secondly - I can't really undersand some of the effects of the rebalancing. It seems that even after rebalancing the indexers aren't really well-balanced.

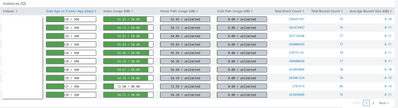

Example:

The 9th one is the new indexer. I see that it has 66 buckets so some of the buckets were moved to that server but I have no idea why the average bucket size is so low on this one.

And this is quite consistent across all the indexes - the numbers of buckets are relatively similar across the deployment but the bucket sizes on the two new indexers are way lower than on the rest.

The indexes config (and most of the indexers' config) is of course pushed from the master node so there should be no significant difference (I'll do a recheck with btool anyway).

And the third one is that I don't know why the disk usage is so inconsistent across various reporting methods.

| rest /services/data/indexes splunk_server=*in*

| stats sum(currentDBSizeMB) as totalDBSize by splunk_server

Gives me about 1.3-1.5T for the new indexers whereas df on the server shows about 4.5T of used space.

OK. I correlated it with

| dbinspect index=*

| search splunk_server=*in*

| stats sum(rawSize) sum(sizeOnDiskMB) by splunk_server

And it seems that REST call gives the size of raw size, not of the summarized data size. But then again, the dbinspect shows that:

1) Old indexers have around 2.2 TB of sum(rawSize) whereas new ones have around 1.3T.

2) Old indexers have 6.5TB of sum(sizeOnDiskMB), new ones - 4.5T

3) On new indexers the 4.5T is quite consistent with the usage reported by df. On old ones there is about 1T used "extra" on filesystems. Is it due to some unused but not yet deleted data? Can I identify where it's located and clean it up?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I think that you have read this already: https://docs.splunk.com/Documentation/Splunk/8.2.4/Indexer/Rebalancethecluster

Have you set this

splunk edit cluster-config -mode manager -rebalance_threshold 0.99before starting rebalancing? I have noticed that "normal" 0.95 is not enough and usually used that 0.99.

Then staring it with

splunk rebalance cluster-data -action start -searchable trueThis can take quite long and it could need to run it couple of time before it has found balance between nodes.

Then you need to know that as rebalance moves bucket not actually gb (my guess?) the amount of data with different node could be somehow different. Anyhow your result should be much better that what your picture shows.

One comment for using REST to get those values. It seems that time by time REST don't give you a correct values without restarting splunkd on server!

Before start it could be a good idea to remove excess buckets first (couldn't recall it rebalance do it itself or not)?

Unfortunately I cannot say anything for this data accelerations.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I didn't expect perfect balance (many of my indexes are quite fast-rotating so it will soon balance itself out naturally). But as I remembered (and the article confirms), rebalancing should move whole buckets around, not just some parts of them, right? That's my biggest surprise - that even though the bucket count is more or less reasonable (30% difference I can live with) but the bucket size and subsequently event count is hugely different.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes it always move whole bucket. BUT you must remember that buckets can (and usually) have different sizes. First is it just bucket with raw data or does it contains also metadata? Another thing is it defined as auto or high size. Third thing is if it’s grown to full or has there been any issues which has closed it before that and rolled it as “half empty” to warn.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I can understand all that 🙂

But honestly, I wouldn't expect that of all the - for example - 800 or so buckets (around 80 buckets per average per indexer, 10 indexers) per index, it will migrate only those 60 or so short ones 😉

Anyway, as I said, the data is quite fast rotating, so I'd expect the indexes to balance themselves out eventually quite quickly. I was just surprised to see such "irregularity" in size distribution.