Join the Conversation

- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- How to get events for .csv headers?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I found similar questions but the usual solution of using HEADER_FIELD_LINE_NUMBER did not work.

My custom csv sourcetype is working fine, except I'm getting an extra event with the column names. Splunk knows they're column names, it's still treating them as fields so the event has Col1=Col1, Col2=Col2 etc. The csv's all start the same, there's an identical line 1 then and identical line 2 which is the column names. After adding HEADER_FIELD_LINE_NUMBER =2 (in props.conf on the forwarder), I'm still getting events with the column names, but now I'm ALSO getting events with just the first line as well. Am I missing something?

Thanks

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After setting this aside until we finally upgraded splunk, a solution has been found. Working with splunk support for weeks, we were not able to fix it directly and concluded that the errors are due to splunk trying to read the files before they are done being written by our diode software. The files are transferred once every 24 hours, so I created a script run by a scheduled task that copies the files to a different set of folders and set up batch inputs to read then delete the copies. All logs come through without any extra junk.

Thanks for your help!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@R15Odd question for you but did you run the fillnull command?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, I don't believe so.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As an added note to this forum I am having the same exact issue. Interestingly enough, like you I had HEADER_FIELD_LINE_NUMBER set(in my case it is set to 1). Been ingesting the files for a couple of days and for some reason today it just now started ingesting the headers as an event, though nothing has changed on my end.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What version of splunk are you running? We're still on 8, but upgrading to 9 soon™.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are running 9.1.2

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I posted a workaround if you're still having this issue.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @R15

Please provide all the Splunk config for this CSV file (inputs, props, transforms?) that are defined on the UF.

Use the insert/edit code button to format it nicely.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

props.conf:

[sample]

SHOULD_LINEMERGE = false

pulldown_type = true

HEADER_FIELD_LINE_NUMBER = 2

INDEXED_EXTRACTIONS = csv

TIMESTAMP_FIELDS = TimeCreated

KV_MODE = none

category = Structure

disabled = false

inputs.conf:

[monitor://filepath]

disabled = 0

crcSalt = <SOURCE>

index = index2

sourcetype = sample

I've redacted the filepath and changed some names. I'm not currently using any transforms. This is monitoring ~250 csv's that get replaced once a day. It works fine except for the extra events.

Thank you for your assistance.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @R15

Thanks. That configuration looks good to me, so it's a bit strange.

You probably have already done this, but if you have access to the source CSV files, have you opened them with a text editor and confirmed there are no empty or blank lines a the start. Might explain why it works for some files and not others.

If you could provide a redacted example of the first three or so lines of a CSV file, then that may help.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do have access and have looked through a few dozen of them. They all appear normal/identical for the first two lines. I can't copy and paste from that environment so I only typed the first few fields of line 2:

#TYPE System.Diagnostics.Eventing.Reader.EventLogRecord

"Message","Id","Version","Qualifiers","Level","Task"

They're all Windows event logs with the same fields.

To reiterate, before I was getting all events correctly plus an additional event containing only line 2. After adding HEADER_FIELD_LINE_NUMBER = 2, I'm still getting that additional event plus another containing only line 1.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, you've done everything right, as far as I can tell. It looks like it should work.

Generally, Splunk is pretty good at auto detecting the header field line for inputs like this without specifying HEADER_FIELD_LINE_NUMBER. Another setting you could try is this

PREAMBLE_REGEX = ^(#TYPE| *$)It should ignore any header lines that have start with #TYPE or are blank/empty. Remove HEADER_FIELD_LINE_NUMBER and ensure the UF is restarted.

Hope that helps

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@yeahnah I've added that line and removed HEADER_FIELD_LINE_NUMBER but to no effect.

You mentioned restarting the forwarder which I haven't been doing as I don't have permissions. I've been pushing these changes from a deployment server (which I have full access/control).

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On a SPlunk universal forwarder agent you'll need a restart to pick up any changes, which would help explain why this is not working.

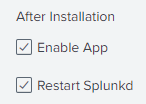

If you do not have access this a restart can still be done via the deployment server, under the Apps tab, where you need to ensure the Restart Splunkd checkbox is ticked for the deployed app.

If you have access to the _internal index you can check for a restart of the agent with the following search

index=_internal sourcetype=splunkd host=<your host> My GUID

Hopefully, you'll see some positive changes once this is done.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've checked and that box was already ticked.

I ran that search and there are events when I made changes yesterday and last week.

Are we at a dead end?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you confirm the version of Splunk universal forwarder agent you are using. Maybe it is an older version and the props.conf settings do no work on it.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

One way to make onboarding / debugging much easier is install splunk on your own laptop and then use that css sample with it. Just add data with Settings -> Add Data and then try to change those options as needed. When you are happy with props & transforms you just copy those to your real app into DS and then deploy those.

On macOS with Splunk 9.0.4.1this works correctly with these settings

[ csv ]

SHOULD_LINEMERGE=false

LINE_BREAKER=([\r\n]+)

NO_BINARY_CHECK=true

CHARSET=UTF-8

INDEXED_EXTRACTIONS=csv

KV_MODE=none

category=Structured

description=Comma-separated value format. Set header and other settings in "Delimited Settings"

disabled=false

pulldown_type=true

HEADER_FIELD_LINE_NUMBER=2

MAX_DAYS_AGO=9999MAX_DAYS_AGO is not needed, I just added it with TimeCreated values like 11111111111 to work.

r. Ismo

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did some testing before implementing by starting to add a sample directly to an indexer, and the events preview looked good. There were no extra events.

I will push your sourcetype code and report back (I'll just replace MAX_DAYS_AGO=9999 with TIMESTAMP_FIELDS=TimeCreated).

Our forwarders are running 8.0.6

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Splunk docs indicate it all should work, but maybe the Splunk UF and Splunk Enterprise are not aligned at this version. You could try a Support ticket but Splunk would probably want a supported UF version installed to do anything.

Like @isoutamo and you said, it works fine when testing the config on Splunk Enterprise (v8.2.7 for me). The only other thing I can think of, is there is a small mistake in the config you have pushed out. Double/triple check the sourcetype names match. Maybe try adding a new stanza entry, using [source::<you file>], which has a higher precedence than sourcetype stanzas, and see if that works.

It gets pretty hard to help when you are not able to see the environment and the real configs in use. All I can say from what I seen, is that it should be working, so either it's a versioning issue with the Splunk UF (can it be upgraded) or the config has a small mistake.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@yeahnah I have confirmed the forwarder and indexer are both on 8.0.6. We intend to upgrade but I'd be lucky if it's by the end of the year.

With no sourcetype or with the default csv sourcetype the events do not have proper timestamps. So I know my sourcetype is definitely being applied, and it works, it's just throwing out these junk events as well for some reason. I replaced it with the code from @isoutamo and got the same result. I will turn to support for further troubleshooting. Thank you both for your time!

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good luck and if you do find out why it's not working for you then it would be good to update this question with the answer.