Are you a member of the Splunk Community?

- Find Answers

- :

- Splunk Platform

- :

- Splunk Enterprise

- :

- Can I use Open Telemtery metric Data as a seed for...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can I use Open Telemtery metric Data as a seed for Eventgen metric Data?

Hi

I am working on a project where we are taking in Open Telemetry Data Metric.

I am looking for a way to re-import Metric Data for testing and i have been looking at eventgen.

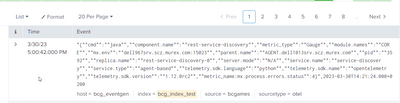

I took some metric data that I have and using mpreview i exported it to a file (Below - sample.metric4).

I followed this great video - https://www.youtube.com/watch?v=WNk6u04TrtU

_raw,_time

"{""cmd"":""python3"",""component.name"":""rtpm-probe"",""metric_type"":""Gauge"",""module.names"":""MONITORING"",""mx.env"":""dell967srv.scz.murex.com:15023"",""parent.name"":""AGENT.dell1013srv.scz.murex.com"",""pid"":""789937"",""replica.name"":""rtpm-probe-ORCH2"",""server.mode"":""N/A"",""service.name"":""monitoring"",""service.type"":""agent-based"",""telemetry.sdk.language"":""python"",""telemetry.sdk.name"":""opentelemetry"",""telemetry.sdk.version"":""1.12.0rc2"",""metric_name:mx.process.errors.status"":4}",2023-03-30T14:21:24.000+0200

Then using evengen i was able to import this data into an event index to test it worked - it did.

I have been trying to get it to work to sed it to a metric index, but no luck. I think i am close, but i just cant crack it.

This is the eventgen.conf file i have been using.

To note, i have tried multiple sourcetypes in relation to metrics, but i dont seem to be picking the correct one. Or the mpreview format is not ok!

[sample.metric4]

mode=sample

interval = 1m

earliest = -2m

latest = now

count=-1

outputMode = metric_httpevent

index = bcg_eventgen_metrics

host = bcg_eventgen

source = bcgames

sourcetype=otel

This is the error I am getting

The metric event is not properly structured, source=bcgames, sourcetype=metrics_csv, host=bcg_eventgen, index=bcg_eventgen_metrics. Metric event data without a metric name and properly formated numerical values are invalid and cannot be indexed. Ensure the input metric data is not malformed, have one or more keys of the form "metric_name:<metric>" (e.g..."metric_name:cpu.idle") with corresponding floating point values.

3/30/2023, 5:20:02 PM

When I change the eventgen.conf to send to an event index i can see the data. I just cant figure out how to get it to go to metric data. Perhaps by outputmode is not correct.

Thanks in advance for any help 🙂

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

How is your otel source type defined?

Using your sample data with metric_name:mx.process.errors.status changed to mx.process.errors.status, I created a source type named otel based on the log2metrics_json source type following the process documented here: <https://docs.splunk.com/Documentation/Splunk/9.0.4/Metrics/L2MSplunkWeb>.

For testing, I set DATETIME_CONFIG = CURRENT.

The user interface defaults to _ALLNUMS_ for measures, which includes the mx.process.errors.status and pid fields. I changed this value to mx.process.errors.status. The include and exclude lists were left empty to use all remaining fields as dimensions.

Adding the data to a metrics index worked as expected.

The final configuration is below. See <https://docs.splunk.com/Documentation/Splunk/9.0.4/Admin/Transformsconf#Metrics> for more information on customizing metric transforms.

# props.conf

[otel]

DATETIME_CONFIG = CURRENT

INDEXED_EXTRACTIONS = json

LINE_BREAKER = ([\r\n]+)

METRIC-SCHEMA-TRANSFORMS = metric-schema:log2metrics_default_json

NO_BINARY_CHECK = true

category = Log to Metrics

description = JSON-formatted data. Log-to-metrics processing converts the numeric values in json keys into metric data points.

disabled = false

pulldown_type = true

#TZ = <time zone>

# transforms.conf

[metric-schema:log2metrics_default_json]

METRIC-SCHEMA-MEASURES = mx.process.errors.status